- Home

- Education

- Continuing Education & Training

- The Ethics of Artificial Intelligence (AI)

Programme Code: SOM-NON TEOAI

SFC-eligible

Course Outline & Highlights

Artificial Intelligence (AI) has boundless potential to reshape society, and it is important to have a robust understanding of the ethical, legal, and social implications of its incorporation into our lives.

This course delves into the intersection of ethics, AI technology and law, and is led by a panel of distinguished academics and leading thinkers in these fields from around the world.

Learners will acquire insights into :

- ethical issues such as transparency and fairness in society with the increasing use of AI;

- moral decision-making frameworks for AI, and issues with how AI processes data and makes decision like or unlike a human being;

- the legal implications of deploying AI technologies; and

- the longer-term social implications as new AI technologies are developed and deployed in even the most private aspects of our lives (including medicine and healthcare).

Who Should Attend

- AI researchers and technology specialists

- Healthcare professionals

- Academics and educators

- Legal practitioners

- Government officials & policy analysts

Why Attend

- Better recognise the potential ethical, legal, and social impact of new AI technologies.

- Better equipped to understand, analyse, and articulate the ethical dilemmas and challenges that can arise from the introduction or implementation of new AI technologies.

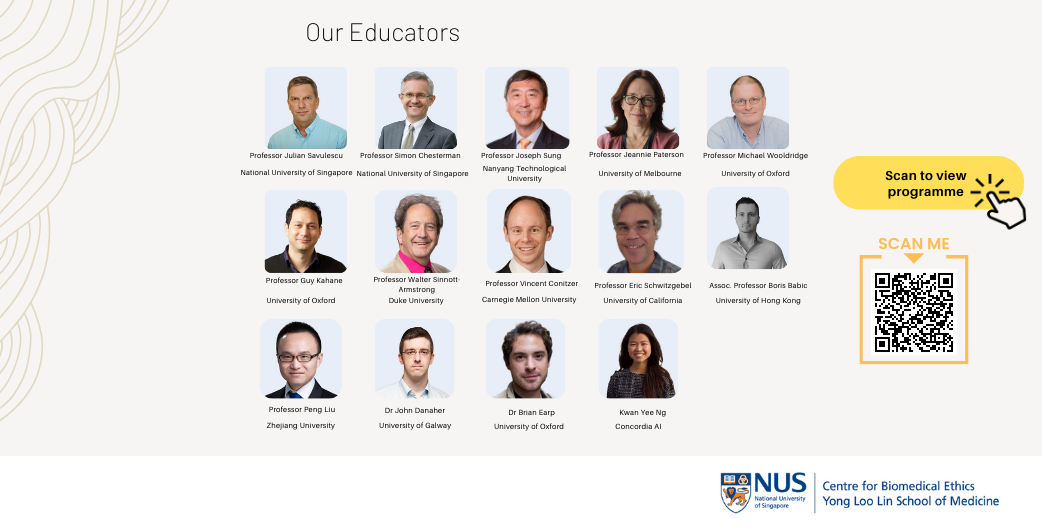

Educators

Professor Julian Savulescu

National University of Singapore

Professor Simon Chesterman

National University of Singapore

Professor Joseph Sung

Nanyang Technology University

Professor Jeannie Paterson

University of Melbourne

Professor Michael Woolridge

University of Oxford

Professor Guy Kahane

University of Oxford

Professor Walter Sinnott-Armstrong

Duke University

Professor Vincent Conitzer

Carnegie Mellon University

Professor Eric Schwitzgebel

University of California, Riverside

Assoc. Prof Boris Babic

University of Hong Kong

Dr Peng Liu

Zhejiang University

Dr John Danaher

University of Galway

Dr Brian Earp

University of Oxford

Kwan Yee Ng

Concordia AI

Course Structure

The course comprises a series of topics that cover a diverse range of subjects in AI. Each topic consists of two parts

Part 1

Delivery Mode:

- A pre-recorded video lecture by the speaker for that topic.

- View anytime online from 30 April 2024, with subtitles in English & Chinese.

Duration:

40 – 50 minutes

Part 2

Delivery Mode:

- Synchronous ‘live’ online Zoom dialogue session where you can engage in further discussion with the speaker for that topic.

- The date & time for each dialogue session is shown in the topic schedule below.

Duration:

60 – 70 minutes

14 May 2024 (Tue)

5.00pm – 6.10pm (SGT)

10.00am – 11.10am (BST)

Speaker : Professor Michael Wooldridge, University of Oxford

The idea of “Artificial Intelligence” is not as novel as one might think, having developed alongside the technological advancements in the era of modern computing. Learn about the different strands of AI that have been developed through the years, and continue to be developed today. Consider their different characteristics, and from that, the likelihood (or unlikelihood) that different forms of AI can impact on various aspects of our lives.

16 May 2024 (Thu)

5.00pm – 6.10pm (SGT/HKT)

Speaker : Associate Professor Boris Babic, University of Hong Kong

How does any particular piece of AI technology work? Does it operate in a well-defined or clearly understood manner, or is it ‘black box’ that nonetheless produces reliable results? Is such a distinction important – whether from a technical or an ethical point of view? Explore these questions and more in this topic.

21 May 2024 (Tue)

8.30pm – 9.40pm (SGT)

8.30am – 9.40am (EDT)

Speaker : Professor Vincent Conitzer (Carnegie Mellon University)

Does an AI system process data and make decisions in a manner that is the same as, or at least functionally similar to, that of human being? If not, is it still possible to ensure that AI systems reflect human values and moral reasoning? Conversely, is that even important? Consider the possible reasons and justifications for having automated moral reasoning by AI.

23 May 2024 (Thu)

5.00pm – 6.10pm (SGT)

Speaker : Professor Simon Chesterman (NUS)

Explore the complex landscape of AI governance, acknowledging its dual nature as both an opportunity and a threat. Delve into the intricacies of regulating AI, addressing challenges to traditional oversight, and evaluating essential regulatory frameworks in terms of timing and methods. Assess the global impact of AI from the perspective of international law and institutions, considering participation, coherence, and collaboration of different stakeholders.

28 May 2024 (Tue)

5.00pm – 6.10pm (SGT/HKT)

Speaker : Kwan Yee Ng, Concordia AI

The stage appears set for China and the rest of the world to increase cooperation on AI safety issues. With the prospect of further dizzying advances in capabilities of the frontier AI models, humanity appears woefully underprepared for the potential future challenges these will pose. It seems plausible that frontier systems will soon be able to assist in developing new biochemical weapons, exacerbate disinformation problems, and possibly even escape human control. This topic will review the landscape of Chinese domestic governance, international governance, technical research, expert views, lab self-governance, and public opinion with regard to AI safety, and conclude with preliminary suggestions on international AI governance cooperation.

31 May 2024 (Fri)

8.00am – 9.10am (SGT)

30 May 2024 (Thu)

5.00pm – 6.10pm (PDT)

Speaker : Professor Eric Schwitzgebel, University of California, Riverside

As AI systems become more advanced and ‘human-like’, the inevitable question arises whether they should be treated more like human beings. Explore the possible implication of granting such rights to an AI entity, including the ethical, legal and possibly emotional challenges. Examine a future scenario where advanced AI may merit specific rights, which may be different or even exceed what we understand to be human rights.

4 June 2024 (Tue)

5.00pm – 6.10pm (SGT)

10.00am – 11.10am (BST)

Speaker : Professor Guy Kahane, University of Oxford

Will it be possible in future to live a life entirely in virtual reality? Embark on a thought-provoking journey questioning the richness of virtual reality compared to tangible experiences. Immerse yourself in a discourse on “Virtual Realism” and explore the secrets to a meaningful life in the virtual realm, and then contemplate the link to life in our ‘real’ non-virtual existence.

6 June 2024 (Thu)

5.00pm – 6.10pm (SGT)

10.00am – 11.10am (IST)

Speaker : Dr John Danaher, University of Galway

Building on a popular science fiction idea of an almost human-like synthetic being, this topic examines the possible ethical arguments, both for and against, why an AI entity should or should not be considered a human person. When we interrogate the issue of whether such an entity can or should be considered the same, and be given the same rights, as a human being, the inevitable question arises, what does it mean to be “human”?

11 June 2024 (Tue)

5.00pm – 6.10pm (SGT)

Speaker : Professor Joseph Sung, Nanyang Technological University

Hear first-hand from one who is eminently qualified to speak on the impact of introducing AI in medicine and healthcare – a specialist in gastroenterology and hepatology, a researcher of new medical technologies and techniques, and a leader in medical education and academia. Explore the wide scope for AI to be introduced in the field of medicine, and consider the medico-legal, ethical and professional implications it will have on the medical profession.

13 June 2024 (Thu)

8.00pm – 9.10pm (SGT)

8.00am – 9.10am (EDT)

Speaker : Professor Walter Sinnott-Armstrong, Duke University

Explore the ethical implications of using AI to predict human moral judgments across diverse fields. Investigate a hybrid approach combining a ‘top-down’ approach based on bioethical principles with a ‘bottom-up’ approach using data-driven models, demonstrated through a pilot study on the allocation of donor kidneys for transplant.

18 June 2024 (Tue)

5.00pm – 6.10pm (SGT)

Speaker :

Professor Julian Savulescu, National University of Singapore

Navigate the integration of personalised Large Language Models (LLMs), with emphasis on essential considerations for the future of AI in medicine and healthcare. Explore the ‘co-creation model’ in the utilisation of AI, highlighting its potential for genuine human progress. Investigate the capabilities of Moral AI, acting as personalised moral ‘gurus’ and assisting in ethical decision-making for individuals.

20 June 2024 (Thu)

5.00pm – 6.10pm (SGT)

7.00pm – 8.10pm (AEST)

Speaker : Professor Jeannie Paterson, University of Melbourne

The apparent lack of transparency in how AI operates poses ethical and legal issues. This is especially so for AI systems that interact with and directly impact consumers and the lay public, and is further compounded when it involves the health and well-being of the person. Is it possible to have transparency in how an AI system function? Can we make transparency work in AI?

25 June 2024 (Tue)

5.00pm – 6.10pm (SGT/CST)

Speaker : Dr Peng Liu, Zhejiang University

This lecture delves into public attitudes towards AI technology, and its acceptability by the public. The topics discussed will encompass the complexity and measures of public attitudes, diverse factors influencing public attitudes and acceptability, and psychological theories for explaining public acceptability.

27 June 2024 (Thu)

5.00pm – 6.10pm (SGT)

10.00am – 11.00am (BST)

Speaker: Dr Brian Earp, University of Oxford

Humans are increasingly not only delegating tasks to artificial intelligence (AI), but also “collaborating” with AI to produce new outputs, which may themselves be either beneficial to society (e.g. creative artworks) or harmful to society (e.g. propaganda, disinformation). When humans jointly create beneficial or harmful material in collaboration with AI, how should credit and blame be assigned? This topic examines credit and blame attributions for human-AI joint creations and asks how personalising AI (e.g. making a “digital twin” of oneself by fine-tuning a large language model) affects these attributions. Different uses of personalised and non-personalised AI (e.g. for predicting patient preferences or taking consent) in medicine will be highlighted with a discussion of implications for patient autonomy.

Schedule : Synchronous Online Dialogue Sessions

| Topic | Date & Time of Dialogue Session |

|---|---|

|

Topic 1 : History of Artificial Intelligence Professor Michael Wooldridge (Oxford) |

14 May 2024 (Tue) 5.00pm – 6.10pm (SGT) |

|

Topic 2 : Explainability, Interpretability or Justifiability A/Prof Boris Babic (HKU) |

16 May 2024 (Thu) 5.00pm – 6.10pm (SGT) |

|

Topic 3 : Moral Decision-Making Frameworks for Artificial Intelligence Professor Vincent Conitzer (Carnegie Mellon) |

21 May 2024 (Tue) 8.30pm – 9.40pm (SGT) |

|

Topic 4 : From Ethics to Law – Whether, When and How to Regulate AI Professor Simon Chesterman (NUS) |

23 May 2024 (Thu) 5.00pm – 6.10pm (SGT) |

|

Topic 5 : The State of AI Safety in China Kwan Yee Ng (Concordia AI) |

28 May 2024 (Tue) 5.00pm – 6.10pm (SGT) |

|

Topic 6 : Puzzles About The Moral Status of AI Systems Professor Eric Schwitzgebel (UC Riverside) |

31 May 2024 (Fri) 8.00am – 9.10am (SGT) |

|

Topic 7 : Meaning and Value in Virtual Reality Professor Guy Kahane (Oxford) |

4 June 2024 (Tue) 5.00pm – 6.10pm (SGT) |

|

Topic 8 : The Moral Status of AI – Can AI Be People? Dr John Danaher (NUI Galway) |

6 June 2024 (Thu) 5.00pm – 6.10pm (SGT) |

|

Topic 9 : Co-piloting With AI in Healthcare Professor Joseph Sung (NTU) |

11 June 2024 (Tue) 5.00pm – 6.10pm (SGT) |

|

Topic 10 : Using AI to Predict Human Moral Judgments – Case Study of Kidney Allocation Professor Walter Sinnott-Armstrong (Duke) |

13 June 2024 (Thu) 8.00pm – 9.10pm (SGT) |

|

Topic 11 : Ethics of Personalised Large Language Models Professor Julian Savulescu (NUS) |

18 June 2024 (Tue) 5.00pm – 6.10pm (SGT) |

|

Topic 12 : Misleading AI – Frauds, Fakes and the Value of Transparency Professor Jeannie Paterson (Melbourne) |

20 June 2024 (Thu) 5.00pm – 6.10pm (SGT) |

|

Topic 13 : Public Attitudes and Acceptability of AI Technology Dr Peng Liu (Zhejiang) |

25 June 2024 (Tue) 5.00pm - 6.10pm (SGT) |

|

Topic 14 : Credit, Blame, Autonomy and Consent in Medical AI Dr Brian Earp (Oxford) |

27 June 2024 (Thu) 5.00pm - 6.10pm (SGT) |

Pre-Requisites & Assessment

- No pre-requisites required.

- Assessment : Learners who take the full course using their SkillsFuture Credits (SFCs) are required to take an online test with multiple-choice questions, at the end of the series.

Awards and Certification

Learners who complete the course will be awarded a digital Certificate of Completion.

Course Fee

We offer flexible pricing bundles to cater to your learning preferences, giving you the flexibility to tailor your learning experience based on your individual interest and needs.

Learners can enrol for the full course of all topics, or select any combination of 3, 6, 9 or 12 topics. Each topic includes access to the pre-recorded video lecture by the topic speaker and the live online dialogue session with the speaker to discuss the topic.

| No. of Topics |

Course Fee (excluding GST) S$ |

Course Fee (including GST) S$ |

|---|---|---|

| 3 | $600 | $654 |

| 6 | $1,140.00 | $1,242.60 |

| 9 | $1,620.00 | $1,765.80 |

| 12 | $2,160.00 | $2,354.40 |

| Full Course (All Topics) | $2,720.00 | $2.964.80 |

Learners who take the full course may be eligible to use their SkillsFuture Credits (SFC), subject to fulfilling the prescribed criteria and conditions.