The University of the East-Ramon Magsaysay Memorial Medical Center, Inc. medical students’ perception of the objective structured examination in pharmacology as an assessment tool

Published online: 7 May, TAPS 2019, 4(2), 14-24

DOI: https://doi.org/10.29060/TAPS.2019-4-2/OA2062

Chiara Marie Miranda Dimla, Maria Paz S. Garcia, Maria Petrina S. Zotomayor, Alfaretta Luisa T. Reyes, Ma. Angeles G. Marbella & Carolynn Pia Jerez-Bagain

Department of Pharmacology, College of Medicine, University of the East-Ramon Magsaysay Memorial Medical Center, Inc., Philippines

Abstract

The teaching of pharmacology prepares the medical sophomore to prescribe drugs on a rational basis. In small group discussions (SGDs), the evaluation of individual competence poses a challenge. Hence, the Objective Structured Examination in Pharmacology (OSEP) was initiated, to provide an additional, objective means of assessing individual performance. The OSEP is an oral, time-bound, one-on-one examination given at the end of the course, designed with a rubric for scoring. The aim of this study was to determine the students’ perceptions of the OSEP as an assessment tool. A survey was conducted on all pharmacology students of School Year 2016-17 as a post-activity evaluation for curricular improvement. After the approval of the institutional ethics review board was obtained, the data was collected retrospectively. The responses of participants who gave their informed consent were included in the study. The students’ perceptions were based on the level of agreement to sixteen statements, using a Likert Scale. The median score for each statement and the proportion with positive perception were computed. The positive perception was operationally defined based on a pre-determined median score. A total of 414 students participated in the study. The mean response rate was 99% and the median score for all statements revealed that 88%, 93% and 94% have a positive perception of the effectiveness, content and conduct of the OSEP, respectively. In conclusion, the medical students perceived the OSEP as an effective assessment tool that can provide an additional, objective means of evaluating individual performance in the course.

Keywords: Assessment Tool, Rubric, Rational Use of Medicines, Objective Structured Examination, Pharmacology, Oral Examination, Outcome-based Education, Small Group Discussion, Biomedical Science, Medical Education

Practice Highlights

- The Objective Structured Examination in Pharmacology (OSEP) is an objective assessment tool to further evaluate student performance in pharmacology.

- Congruent with OBE (outcome-based education), OSEP aligns assessment with the desired outcomes.

- A one-station oral exam patterned after Objective Structured Clinical Examination (OSCE) to test skill of choosing a p-drug for a paper case.

- Rubrics are developed and used to score, ensuring more reliability and validity of assessment.

- Almost all Pharmacology students expressed positive perception of the OSEP as an assessment tool.

I. INTRODUCTION

At the University of the East-Ramon Magsaysay Memorial Medical Center, Inc. (UERMMMCI) College of Medicine, Pharmacology is a yearlong subject, encompassing basic and clinical pharmacology, taught in the second year of the basic medical education program. It is designed to prepare medical students to prescribe drugs to patients on a rational basis. The methods of teaching used in the course are lectures, laboratory experiments, video presentations, role-playing, mini-cases and small group discussions (SGDs) using hypothetical cases. Student assessment is through written examinations, short quizzes, laboratory grades, and preceptorial grades using a rubric for small group discussions.

The SGDs are geared to develop active learning so students will better comprehend and apply the concepts that are discussed in the lectures. These give the students a chance to articulate themselves and compare their understanding of the different concepts. In addition, they hone their listening and interpersonal skills by collaborating with their peers and learn to search for, critically appraise and apply current literature. Hypothetical cases of commonly encountered clinical conditions are discussed for two hours per preceptorial session. Each paper case is developed and given prior to each SGD during which students come up with a diagnosis, enumerate therapeutic goals, and discuss non-pharmacologic, as well as pharmacologic interventions, which include prescription writing and giving patients advice. To arrive at these, the students apply the process of rational use of medicines as recommended by the World Health Organization (WHO) Guide to Good Prescribing, a process that involves decision-making based on the efficacy, safety, suitability and cost of several drug options (de Vries, Henning, Hogerzeil, & Fresle, 1994).

In view of this, assessing students’ individual competence with objectivity and fairness becomes a challenge for the faculty, more so since student performance in the SGDs is 8% of the entire grade in pharmacology.

There are several ways of assessing student competence. These may be formative, summative or both. Competence is a concept that includes not only knowledge, skills and attitude but also, problem-solving skills such as critical thinking and reasoning, that of being a team player as well as being able to communicate effectively, in all formats. Any of these may be the focus of assessment of competence (Ilic, 2009).

The OSEP is one tool that assesses the competence of the second year student on knowledge gained during the entire year in pharmacology, skills in applying this knowledge in choosing an appropriate drug, and in using communication skills during the one-on-one oral examination.

The Objective Structured Examination in Pharmacology (OSEP) was conceptualised as an additional assessment tool patterned after the Objective Structured Clinical Examination (OSCE) although its main focus is on the application of the knowledge and skill of students in rational drug prescribing. It is conducted near the end of each school year, in which the medical student of pharmacology randomly chooses a case similar to those previously discussed in the preceptorial case sessions and answers questions intended to find out whether he/she demonstrates knowledge of pertinent drugs and is able to apply these in rational drug prescribing. This form of assessment has been used by the UERMMMCI College of Medicine, Department of Pharmacology for the past 3 years. It was patterned after the widely-used and validated OSCE to assess the clinical performance of medical students (Harden, Stevenson, Downie, & Wilson, 1975; Zayyan, 2011).

In addition, the OSEP complies with the directive of the Philippines’ Commission on Higher Education via CMO No. 18 Series of 2016 for all Philippine medical schools to shift to outcomes-oriented approach (Commission on Higher Education, 2016). The OSEP enables a more structured and objective method of assessing if the student has achieved the outcome expected in pharmacology, which is to prescribe drugs rationally after consideration of the totality of the patient, the state of illness, the current management. It conforms more with the outcome-based curriculum since it allows the instructor to observe the student’s response to a hypothetical patient and assign a corresponding grade based on a prepared rubric. Rubrics are scoring guidelines used in outcome-based education (Reddy, 2007) which are also utilised in the OSCE. These intend to improve assessment of students, to make it more accurate, valid, reliable and fair thus, minimising bias in terms of grading the application of their knowledge from their small group discussions of cases.

Patterned also after the OSCE, an Objective Structured Practical Examination (OSPE) in pharmacology has been described and utilised in other countries, particularly in India. The OSPE consisted of several stations during which a student was appraised on cognitive and psychomotor skills acquired during the course. Through direct observation over a specified period of time, knowledge on prescription components, dose calculation, dosage forms, drug information sources, routes of drug administration and applied pharmacology, among others, were graded (Malhotra, Shah, & Patel, 2013; Vishwakarma, Sharma, Matreja, & Giri, 2016). A number of studies have shown the OSPE to be a valid and reliable assessment tool, not only in pharmacology, but in other basic medical science courses such as anatomy and physiology (Deshpande et al., 2013; Hasan, Malik, Hamad, Khan, & Bilal, 2009).

Student feedback, therefore, is vital in determining whether the OSEP is an effective tool to further assess their performance in pharmacology. This will also provide a way to improve the teaching and grading of the second year medical students in the course.

Furthermore, it is inherent that several factors will affect the taking of the OSEP like time allotment, student preparation and environment. Hence, this study not only intends to find out the perception of the second year medical students of the OSEP as an assessment tool but also their perception of the content and conduct of the OSEP.

The general objective of the study was to determine the students’ perception of the Objective Structured Examination in Pharmacology (OSEP) as a tool to assess what they have learned in pharmacology on the rational use of medicines and to determine the students’ perception of the content and conduct of the OSEP.

A survey tool containing sixteen statements was fielded to the students. It utilized a Likert Scale to measure the level of agreement to each statement (see Appendix).

The specific objectives were to determine: 1) the response rate; 2) the percentage distribution of students’ responses categorised into four levels of agreement to the survey statements: 1-“strongly disagree”, 2-“disagree”, 3-“agree”, and 4-“strongly agree”; 3) the median scores per survey statement; 4) the proportion of students with positive perception of the effectiveness, content and conduct of the OSEP; wherein positive perception was operationally defined as a median score for each survey statement.

For positive statements, median scores of 3 and 4 were analysed as positive perception while for negative statements, median scores of 1 and 2 were also analysed as positive perception. Conversely, the negative perception was a median score of 1 or 2 for positive statements and a median score of 3 or 4 for negative statements.

II. METHODS

The study proposal was developed and the survey tool was refined after pilot testing. The research protocol was then submitted and the approval of the Institutional Ethics Review Board was obtained before retrospectively collecting the data.

All second-year pharmacology students were eligible to be included in the study. There were no exclusion criteria. They were enjoined to participate in the survey as part of the post-activity evaluation that is integral in the college’s continuous initiative of curriculum development. Adequate orientation to the OSEP and that of the research study were done prior. Informed consent to utilise their survey responses in the study was requested. Only the responses of those students with informed consent were included in this paper.

Sample size estimation was done, using the available software tool in EpiTools epidemiological calculators from the internet site epitools.ausvet.com.au which followed the formula n = [(Z2)*P(1*P)]/e2, wherein Z = value from standard normal distribution corresponding to desired confidence level (CI); (Z = 1.96 for 95% CI); P = expected true proportion which was set at 50%; and e = desired precision which was set at 5%.

The required sample size of 385 was exceeded with the total number of 414 participants using convenience sampling technique. This implies that the conclusions and recommendations derived from this study are valid and applicable to future batches.

The survey was conducted at the end of the School Year 2016-17, wherein the medical sophomores of the pharmacology course were asked to fill up and submit the survey tool. In the context of the need to conduct a post-activity evaluation, a survey of the students’ perception of the OSEP was conducted. During the orientation about the OSEP, the objectives, rationale and conduct of the said activity were relayed to the students ahead of time.

A 4-point Likert scale was utilised to generate responses on either side of the scale, eliminating neutral options, which would be difficult to analyse. While not the traditional 5-point scale as originally expounded by Rensis Likert, the 4-point scale is also widely used and accepted (Lee & Paek, 2014; Lozano, García-Cueto, & Muñiz, 2008). It was considered appropriate as the questionnaire measured attitudes toward an assessment method, not complex, variegated issues. Moreover, in the context of a post-activity evaluation, the 4-point Likert scale was a strategy to encourage responses to either agree or disagree on the items raised in each survey statement, especially since an option to write textual comments and recommendations was available at the time; although, not within the scope of this research paper.

Ethics approval of the study proposal was obtained July 12, 2017, prior to retrospective data collection. The approval was given by the Institutional Ethics Review Committee (ERC) of the Research Institute for Health Sciences (RIHS) under RIHS ERC Code: 0415/C/M/17/74. The students’ participation was voluntary and informed consent was obtained.

In anticipation of securing the ethics approval for the research undertaking, the following steps were observed: 1) the required data elements to meet the research objective were integrated into the survey form, which was pre-tested to a small group of third-year medical students who had taken the OSEP in the previous year; 2) a portion to obtain informed consent was included in the survey form; 3) the objectives and conduct of the anticipated research study were explained to the students; 4) the ethical rights of the students were explained; 5) measures to uphold ethical considerations were anticipated by the researchers. Once the ERB approval was obtained, the data was collected retrospectively to describe the student’s perception of the OSEP as an additional assessment tool and to generate hypotheses of factor relationships for future research undertakings. The responses of only those students who signed the Informed Consent form were included in this paper.

Descriptive statistics were generated from the collated data.

The decision to analyse the data into positive perception and negative perception by combining the affirmative and opposing responses to each survey statement stems from the context of a post-activity evaluation, wherein the direction of developing the OSEP in the pharmacology curriculum will be guided by the proportion of students to either side of the scale, irrespective of the intensity of their affirmation or opposition.

III. RESULTS

A. Profile of Participants

The second-year medical student participants were predominantly in the 20 to 25-year-old age group (90%) and single Filipinos. The male to female ratio was 1:2.

B. Effectiveness of the OSEP

For the six survey statements intended to elicit perceptions on the effectiveness of the OSEP as an assessment tool (Table 1), the median score was 3 and 4, which indicated a positive perception for the positive statements (Survey Statement No. 1 to 6, except 4).

| Survey Statement No. | Strongly Agreea

(%) |

Agreeb

(%) |

Disagreec

(%) |

Strongly Disagreed

(%) |

Median Score, Interquartile range | Positive PerceptionX

(%) |

| 1 | 71.5 | 25.6 | 0.5 | 1.9 | 4.00, 1 | 97.1 |

| 2 | 65.9 | 30 | 1.9 | 1.9 | 4.00, 1 | 95.9 |

| 3 | 71 | 26.3 | 0.5 | 2.2 | 4.00, 1 | 97.3 |

| 4* | 24.2 | 34.5 | 34.5 | 6.8 | 3.00, 1 | 41.3* |

| 5 | 27.1 | 60.9 | 9.7 | 2.4 | 3.00, 1 | 88 |

| 6 | 46.9 | 43.7 | 6 | 2.9 | 3.00, 1 | 90.6 |

| Column Totals | 87.93 |

Note: Percentage positive perception, X = a+b, except in 4* where X*= c+d

Table 1. Students’ perception of the OSEP as an effective assessment tool

Survey Statement (SS) no. 4 is a negative statement and initially, a positive perception was defined as a median score of 1 and 2. However, Table 1 shows that the median score for SS no. 4 is 3 and that there is a small difference between those with positive and negative perception. The distribution of responses reveals that about 60% of the students do “agree” and “strongly agree” to SS no. 4: “The OSEP made me realise that I lack the ability to prescribe drugs on my own.” This predominant perception should be taken positively because it made the students realise that they have to identify and improve on the other factors that will increase their ability to rationally prescribe independently, the right drug for the right patient, at the right dose and at the right time. To the summary statement SS no. 6: “I can say that the OSEP is an accurate assessment tool of my ability to choose the p-drug through the process of Rational Drug Therapy.”, ninety percent gave an affirmative response.

C. Content of the OSEP

For the four survey statements intended to elicit perceptions on the content of the OSEP as an assessment tool (Table 2), the results show a positive perception with a median score of 4 for the three positive statements and 2 for SS no. 8 which is a negative statement.

| Survey Statement No. | Strongly Agreea

(%) |

Agreeb

(%) |

Disagreec

(%) |

Strongly Disagreed

(%) |

Median Score, Interquartile range | Positive PerceptionX

(%) |

| 7 | 60.9 | 35 | 1.2 | 2.2 | 4.00, 1 | 95.9 |

| 8* | 6 | 8.5 | 48.1 | 37.4 | 2.00, 1 | 85.5* |

| 9 | 52.9 | 42.3 | 1.9 | 2.9 | 4.00, 1 | 95.2 |

| 10 | 58.9 | 38.4 | 0.5 | 2.2 | 4.00, 1 | 97.3 |

| Column Totals | 93.475 |

Note: Percentage positive perception, X = a+b, except in 8* where X* = c+d

Table 2. Students’ perception of the content of the OSEP

D. Conduct of the OSEP

For the six survey statements intended to elicit perceptions on the conduct of the OSEP as an assessment tool (Table 3), the results show a positive perception with a median score of 4 for the five positive statements and 2 for SS no. 13 which is a negative statement. The general perception was that there was ample preparation for the OSEP and that it was conducted in an objective and orderly manner with realistic expectations within the given time.

| Survey Statement No. | Strongly Agreea

(%) |

Agreeb

(%) |

Disagreec

(%) |

Strongly Disagreed

(%) |

Median Score, Interquartile range | Positive PerceptionX

(%) |

| 11 | 60.6 | 35.5 | 1.4 | 2.2 | 4.00, 1 | 96.1 |

| 12 | 63.8 | 30.4 | 2.9 | 2.4 | 4.00, 1 | 94.2 |

| 13* | 5.3 | 6 | 47.6 | 39.6 | 2.00, 1 | 87.2* |

| 14 | 53.1 | 42 | 1.7 | 2.7 | 4.00, 1 | 95.1 |

| 15 | 71.3 | 25.6 | 1 | 2.2 | 4.00, 1 | 96.9 |

| 16 | 71.3 | 25.6 | 0.5 | 2.4 | 4.00, 1 | 96.9 |

| Column Totals | 94.4 |

Percentage positive perception, X = a+b, except in 13* where X* = c+d

Table 3. Students’ perception of the conduct of the OSEP

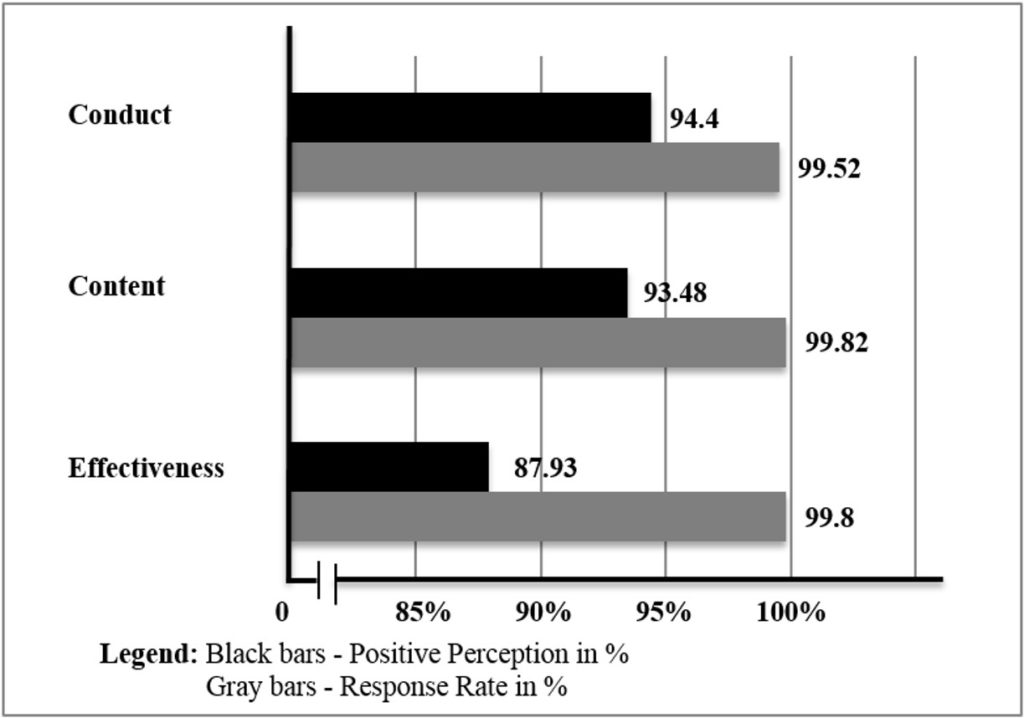

The survey had an almost 100% response rate for each category (Figure 1). The interpretation of the median scores revealed that 88% have a positive perception of the OSEP as an assessment tool (Table 1). As to the content and conduct of the exam activity, 93% (Table 2) and 94% (Table 3), also have a positive perception of the OSEP.

Figure 1. UERMMMCI’s pharmacology students’ perception of the OSEP

Figure 1. UERMMMCI’s pharmacology students’ perception of the OSEP

IV. DISCUSSION

In both teaching and learning, evaluation is important especially in health professions education wherein the emphasis on performance-based assessments is on testing complex learning which involves higher-order “knowledge and skills” (Peeters, Sahloff, & Stone, 2010). It behoves medical educators, therefore, to evaluate the effectiveness of any teaching or learning strategy.

Assessment, too, is an important part of the curriculum. It encompasses domains that need assessment, the tools to be used, the purpose of the assessment process, the timing of assessment, and the people who will do the assessment. It is a vital component of the learning process. It shows the progress of students, their strengths and weaknesses and guides them on which topics are important. Furthermore, it serves as a method for promotion and a measure of teaching effectiveness (Etheridge & Boursicot, 2017) and is an important factor that drives learning.

Assessment tools are characterised by validity, reliability, feasibility, cost, as well as educational impact. Not all domains of competency can be assessed, however, by a single assessment method. There should be a variety of assessment tools that must be used so that the advantage of one method can overcome the disadvantage of the other (Al-Wardy, 2010). Indeed, student assessment is a major concern among those teaching in the UERMMMCI College of Medicine, thus, a lot of ways of improving assessment methods to make them more valid and reliable are being done.

The OSEP is one assessment method that is used to further evaluate the performance of the second year medical student in pharmacology towards the end of the course. This is an added tool that may allow for students to be graded more objectively and fairly. This is also more congruent with outcome-based education (OBE), an educational design that advocates for assessment methods aligned with the desired outcomes.

One good feature of the OSEP is its use of rubrics in scoring a student’s performance because this ensures reliability and validity of the assessment. Also utilised in OSCE, rubrics are criterion-based assessment tools that make the evaluation process easier. They are a “set of scoring guidelines” which are in accordance with outcome-based education (Reddy, 2007). The use of rubrics in the assessment of performance has several benefits like consistency in scores, formation of learning and improved instruction. Through rubrics, feedback, as well as self-assessment, is made easy because they make criteria and expectations explicit. Making use of rubrics ensures a more reliable scoring of performance (Jonsson & Svingby, 2007). In the conduct of the OSEP, a great majority of the students perceived that the case scenario was clearly stated, that the questions were not confusing or ambiguous and that they were arranged logically (SS no.’s 7, 8, and 9). The systematic and organised delivery of the case and guide questions was vital in the development of the rubric for scoring. For example, for the student requirement: “give your P-drug (personal drug), dose and frequency”, the faculty scoring guide (rubric) contained a list of possible answers with the most rational drug for the case bearing full points if all three components for the item are enumerated by the student. The active engagement of the department in the preparation of the OSEP case materials and the accompanying rubric adequately contributed to the positive perception of the great majority of students that the faculty preceptor facilitated the oral examination objectively (SS no. 15).

The OSCE is a valid model for the OSEP because of its objective approach in assessing components of clinical competence in a well-planned or structured way (Harden, 1988). In one study conducted, the examinees believed that in medicine, the OSCE is the most comprehensive method of clinical assessment. They consider it as easier, fair, valid and reliable. It is practical and less biased, and its outcome is not influenced by factors like personality, gender and ethnicity. These are some of the considerations that guided the development of the OSEP. Furthermore, the objective and structured approach through the use of defined rubrics will enable the learner to identify specific areas of strengths and weaknesses in his/her competence and in the pharmacology curriculum. In line with this, another study found that if students are able to identify the “What” and “How” to better prepare themselves for the course or exam, this will enable them to get higher scores in the future (Khan, Ayub, & Shah, 2016). The ability of the OSEP to bring the students to a realisation of the gaps in knowledge and the level of confidence in prescribing drugs on their own was also revealed in this study (SS no.’s 3 and 5). These perceptions were shared by a great majority of the participants.

In a study assessing the validity of OSCE questionnaires, it was found that OSCE reduced bias. The overall perception, acceptance and satisfaction of both examiners and examinees on the validity of the OSCE were encouraging (Idris, Hamza, Hafiz, & Eltayeb A, 2014) and these study findings may be useful in providing direction to both instruction and learning.

The results of a study conducted among nursing students (Mahmoud & Mostafa, 2011) showed that they accepted the OSCE as a tool for assessing their clinical performance. For most, they considered it as a useful practical experience that reduced the possibility of failure.

But like other assessment tools, the OSCE also has its disadvantages. Some students look at OSCE as very stressful (Kim, 2016). Nevertheless, the importance of such feedback to develop and improve further the OSCE is being recognised (El-Nemer & Kandeel, 2009). In its implementation, there is a need for students to be trained extensively on time management and emotional stress relief (Mahmoud & Mostafa, 2011). The pharmacology students at the time of the OSEP were also observed by the faculty examiners to be anxious and stressed. Despite this, the general perception of the students was that the examination was conducted smoothly, in a conducive environment, and that the task expected of them was realistic (SS no.’s 16, 14 and 13).

Several studies on the OSPE, as applied in pharmacology, have likewise supported its utility as a teaching and examination tool (Deshpande et al., 2013). Medical students in universities in India, Nepal and Bangladesh have positively accepted OSPE as a form of examination. It is being recommended as an additional form of assessment to complement more traditional methods.

In contrast to the OSPE, the OSEP performed in this institution is a one-station exam that specifically aims to assess an individual student on the knowledge of core drugs used in common illnesses and the skill in applying the WHO-prescribed process of choosing a p-drug for a hypothetical case. The importance of performing this is that it allows the examiner to determine how much the student “practices” the desired outcome of choosing and prescribing drugs rationally.

The findings of students’ positive perception of the OSEP as an assessment tool, including its content and conduct is a valuable indicator of its utility, acceptability and validity as an additional means of measuring individual performance. This is supported by the findings in one study that mentioned the importance of student participation in developing newer assessment tools in the curriculum of medicine because it can influence its direction and development, as well as faculty teaching. More so, assessment formats that are considered valid, authentic and transparent become more acceptable to the students (Pierre, Wierenga, Barton, Branday, & Christie, 2004).

In another study, the perceptions and experiences of students show that the OSCE is an important and accepted tool for assessment. However, in a process of curriculum review, an assessment method like that of the OSCE should be assessed regularly. It is, therefore, necessary that the OSCE should be planned and organised carefully (Small, Pretorius, Walters, Ackerman, & Tshifugula, 2013). These concepts are also applicable to the implementation and development of the OSEP.

The general positive perception that the OSEP bears the basic qualities of an effective assessment tool was revealed in this study. The OSEP was regarded as an accurate evaluation tool by 90% of the students which can properly assess their ability to choose the p-drug through the process of Rational Drug Therapy (SS no. 6). Among the factors that probably contributed to the positive perception and acceptance of the OSEP is the adequate preparations done by both the faculty preceptors and the students in their roles as examiners and examinees respectively, coupled with the timely and practical orientation module that was held to communicate the rationale, objectives, content and conduct of the OSEP.

V. CONCLUSION

In conclusion, the medical students perceived the OSEP as an effective assessment tool in pharmacology with a positive perception of its content and conduct. This can pave the way for the development of additional, objective examination tools within the course and in other biomedical science subjects. Continuous efforts to improve the validity and positive perception of assessments tools must be institutionalised. Providing feedback to each student after their OSEP may be considered to further enhance learning while balancing this with limitations in time, and the probability of exam leakage. Furthermore, related studies like exploring the relationship between final grade and the OSEP are recommended because both are indicative of individual performance in the course.

Notes on Contributors

Chiara Marie Miranda Dimla, M.D., M.S.P.H., DPPS is an Associate Professor in the Department of Pharmacology, College of Medicine, UERMMMCI, Philippines with a Master of Science in Public Health, UERMMMCI Graduate School, Philippines.

Maria Paz S. Garcia, M.D. is an Associate Professor and Present Head of the Department of Pharmacology, College of Medicine, UERMMMCI, Philippines.

Maria Petrina S. Zotomayor, M.D. is a Professor and Past Chair of the Department of Pharmacology, College of Medicine, UERMMMCI, Philippines.

Alfaretta Luisa T. Reyes, M.D. is a Professor Emeritus and Past Dean of the College of Medicine, UERMMMCI, Philippines.

Ma. Angeles G. Marbella, M.D. is an Associate Professor and Past Head of Department of Pharmacology, College of Medicine, UERMMMCI, Philippines.

Carolynn Pia Jerez-Bagain, M.D. is an Assistant Professor of the Department of Pharmacology, College of Medicine, UERMMMCI, Philippines and has finished coursework of Master in Health Science Education in the UERMMMCI Graduate School, Philippines.

The primary authors are C. M. M. Dimla, M. P. S. Garcia and M. P. S. Zotomayor. The co-authors are A. L. T. Reyes, M. A. G. Marbella and C. P. Jerez-Bagain.

All were involved in conceptualising and writing the research proposal. All participated in its implementation, including data collection and analysis of results. While the primary authors did the final analysis and discussion of the results, all participated in writing and approving the final manuscript.

Ethical Approval

This study was approved by the Institutional Ethics Review Committee (ERC) of the Research Institute for Health Sciences (RIHS) under RIHS ERC Code: 0415/C/M/17/74.

Acknowledgements

The authors are grateful for the encouragement and support of the UERMMMCI community especially to the following:

- Administration;

- Research Institute for Health Sciences;

- Office of the Dean, College of Medicine;

- Fellow faculty members and support staff of the Department of Pharmacology, for their contributions in the conceptualisation and implementation of the OSEP, as well as in the data collection phase of this study; and

- Medical sophomore students of Pharmacology who are our inspiration.

Likewise, heartfelt thanks to our families, for their unwavering faith and unconditional love.

Funding

No funding is involved in this paper.

Declaration of Interest

All authors declared no conflict of interest in this paper.

References

Al-Wardy, N. (2010). Assessment methods in undergraduate medical education. Sultan Qaboos University Medical Journal, 10(2), 203-209.

Commission on Higher Education. (2016). Policies, standards and guidelines for Doctor of Medicine (M.D.) Program. Retrieved from Office of the President of the Phillippines https://ched.gov.ph/cmo-18-s-2016-2

Deshpande, R. P., Motghare, V. M., Padwal, S. L., Bhamare, C. G., Rathod, S. S., & Pore, R. R. (2013). A review of objective structured practical examination (OSPE) in pharmacology at a rural medical college. International Journal of Basic & Clinical Pharmacology, 2(5), 629-633. https://doi.org/10.5455/2319-2003.ijbcp20131021

de Vries, T. P. G. M., Henning, R. H., Hogerzeil, H. V., & Fresle, D. A. (1994). Guide to good prescribing: A practical manual. Retrieved from World Health Organization Website: https://apps.who.int/iris/handle/10665/59001

El-Nemer, A., & Kandeel, N. (2009). Using OSCE as an assessment tool for clinical skills: Nursing students’ feedback. Australian Journal of Basic and Applied Sciences, 3(3), 2465-2472. Retrieved from http://ajbasweb.com/old/ajbas/2009/2465-2472.pdf

Etheridge, L., & Boursicot, K. (2017). Performance and workplace assessment. In J. A. Dent, R. M. Harden, & D. Hunt (5th ed.), A practical guide for medical teachers (pp. 267-273). Edinburgh, Scotland: Elsevier.

Harden, R. M. (1988). What is an OSCE? Medical Teacher, 10(1), 19-22. https://doi.org/10.3109/01421598809019321

Harden, R. M., Stevenson, M., Downie, W. W., & Wilson, G. M. (1975). Assessment of clinical competence using objective structured examination. British Medical Journal, 1(5955), 447-451. Retrieved from https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1672423

Hasan, S., Malik, S., Hamad, A., Khan, H., & Bilal, M. (2009). Conventional/traditional practical examination (CPE/TDPE) versus objective structured practical evaluation (OSPE)/semi objective structured practical evaluation (SOSPE). Pakistan Journal of Physiology, 5(1), 58-64.

Idris, S. A., Hamza, A. A., Hafiz, M. M., & Eltayeb A., M. (2014). Teachers’ and students’ perceptions in surgical OSCE exam: A pilot study. Open Science Journal of Education, 2(1), 15-19. Retrieved from

http://www.openscienceonline.com/journal/archive2?journalId=733&paperId=273

Ilic, D. (2009). Assessing competency in evidence based practice: Strengths and limitations of current tools in practice. BioMed Central Medical Education, 9(53). https://doi.org/10.1186/1472-6920-9-53

Jonsson, A., & Svingby, G. (2007). The use of scoring rubrics: Reliability, validity and educational consequences. Educational Research Review, 2(2), 130-144. https://doi.org/10.1016/j.edurev.2007.05.002

Khan, A., Ayub, M., & Shah, Z. (2016). An audit of the medical students’ perceptions regarding objective structured clinical examination. Education Research International, 2016. https://doi.org/10.1155/2016/4806398

Kim, K. (2016). Factors associated with medical student test anxiety in objective structured clinical examinations: A preliminary study. International Journal of Medical Education, 7, 424-427. https://doi.org/10.5116/ijme.5845.caec

Lee, J., & Paek, I. (2014). In search of the optimal number of response categories in a rating scale. Journal of Psychoeducational Assessment, 32(7), 663-673. https://doi.org/10.1177/0734282914522200

Lozano, L. M., García-Cueto, E., & Muñiz, J. (2008). Effect of the number of response categories on the reliability and validity of rating scales. Methodology, 4(2), 73-79. https://doi.org/10.1027/1614-2241.4.2.73

Mahmoud, G. A., & Mostafa, M. F. (2011). The Egyptian nursing student’s perceptive view about an objective structured clinical examination (OSCE). Journal of American Science, 7(4), 730-738. Retrieved from http://www.jofamericanscience.org/journals/am-sci/am0704/102_5258am0704_730_738.pdf

Malhotra, S. D., Shah, K. N., & Patel, V. J. (2013). Objective structured practical examination as a tool for the formative assessment of practical skills of undergraduate students in pharmacology. Journal of Education and Health Promotion, 2, 53. https://doi.org/10.4103/2277-9531.119040

Peeters, M. J., Sahloff, E. G., & Stone, G. E. (2010). A standardized rubric to evaluate student presentations. American Journal of Pharmaceutical Education, 74(9), 171. Retrieved from

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2996761

Pierre, R. B., Wierenga, A. R., Barton, M. A., Branday, J. M., & Christie, C. D. C. (2004). Student evaluation of an OSCE in paediatrics at the University of the West Indies, Jamaica. BioMed Central Medical Education, 4, 22. https://doi.org/10.1186/1472-6920-4-22

Reddy, M. Y. (2007). Effect of rubrics on enhancement of student learning. The Journal of Doctoral research in Education (Educate~), 7(1), 3-17. Retrieved from

http://educatejournal.org/index.php/educate/article/viewFile/117/148

Small, L. F., Pretorius, L., Walters, A., Ackerman, M., & Tshifugula, P. (2013). Students’ perceptions regarding the objective, structured, clinical evaluation as an assessment approach. Health SA Gesondheid – Journal of Interdisciplinary Health Sciences, 18(1), 127-134. https://doi.org/10.4102/hsag.v18i1.629

Vishwakarma, K., Sharma, M., Matreja, P. S., & Giri, V. P. (2016). Introducing objective structured practical examination as a method of learning and evaluation for undergraduate pharmacology. Indian Journal of Pharmacology, 48(Suppl 1), S47-S51. https://doi.org/10.4103/0253-7613.193317

Zayyan, M. (2011). Objective structured clinical examination: The assessment of choice. Oman Medical Journal, 26(4), 219-222. https://dx.doi.org/10.5001%2Fomj.2011.55

*Chiara Marie Miranda Dimla

64 Aurora Blvd, Doña Imelda,

Quezon City, Philippines 1113,

University of the East-Ramon Magsaysay Memorial Medical Center

Telephone: +63 2715 8163 / +63 2713 3302

Email: cmdimla@uerm.edu.ph / chiaramdimla@gmail.com

Announcements

- Best Reviewer Awards 2024

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2024.

Refer here for the list of recipients. - Most Accessed Article 2024

The Most Accessed Article of 2024 goes to Persons with Disabilities (PWD) as patient educators: Effects on medical student attitudes.

Congratulations, Dr Vivien Lee and co-authors! - Best Article Award 2024

The Best Article Award of 2024 goes to Achieving Competency for Year 1 Doctors in Singapore: Comparing Night Float or Traditional Call.

Congratulations, Dr Tan Mae Yue and co-authors! - Fourth Thematic Issue: Call for Submissions

The Asia Pacific Scholar is now calling for submissions for its Fourth Thematic Publication on “Developing a Holistic Healthcare Practitioner for a Sustainable Future”!

The Guest Editors for this Thematic Issue are A/Prof Marcus Henning and Adj A/Prof Mabel Yap. For more information on paper submissions, check out here! - Best Reviewer Awards 2023

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2023.

Refer here for the list of recipients. - Most Accessed Article 2023

The Most Accessed Article of 2023 goes to Small, sustainable, steps to success as a scholar in Health Professions Education – Micro (macro and meta) matters.

Congratulations, A/Prof Goh Poh-Sun & Dr Elisabeth Schlegel! - Best Article Award 2023

The Best Article Award of 2023 goes to Increasing the value of Community-Based Education through Interprofessional Education.

Congratulations, Dr Tri Nur Kristina and co-authors! - Volume 9 Number 1 of TAPS is out now! Click on the Current Issue to view our digital edition.

- Best Reviewer Awards 2022

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2022.

Refer here for the list of recipients. - Most Accessed Article 2022

The Most Accessed Article of 2022 goes to An urgent need to teach complexity science to health science students.

Congratulations, Dr Bhuvan KC and Dr Ravi Shankar. - Best Article Award 2022

The Best Article Award of 2022 goes to From clinician to educator: A scoping review of professional identity and the influence of impostor phenomenon.

Congratulations, Ms Freeman and co-authors. - Volume 8 Number 3 of TAPS is out now! Click on the Current Issue to view our digital edition.

- Best Reviewer Awards 2021

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2021.

Refer here for the list of recipients. - Most Accessed Article 2021

The Most Accessed Article of 2021 goes to Professional identity formation-oriented mentoring technique as a method to improve self-regulated learning: A mixed-method study.

Congratulations, Assoc/Prof Matsuyama and co-authors. - Best Reviewer Awards 2020

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2020.

Refer here for the list of recipients. - Most Accessed Article 2020

The Most Accessed Article of 2020 goes to Inter-related issues that impact motivation in biomedical sciences graduate education. Congratulations, Dr Chen Zhi Xiong and co-authors.