Micro CEX vs Mini CEX: Less can be more

Submitted: 28 January 2023

Accepted: 17 August 2023

Published online: 2 January, TAPS 2024, 9(1), 3-19

https://doi.org/10.29060/TAPS.2024-9-1/OA2947

Thun How Ong1, Hwee Kuan Ong2, Adrian Chan1, Dujeepa D. Samarasekera3 & Cees Van der Vleuten4

1Department of Respiratory and Critical Care Medicine, Singapore General Hospital, Duke-NUS Medical School, Singapore; 2Department of Physiotherapy, Singapore General Hospital; 3Centre for Medical Education, Yong Loo Lin School of Medicine, National University of Singapore, Singapore; 4Department of Educational Development and Research, Maastricht University, Maastricht, The Netherlands

Abstract

Introduction: The mini-Clinical Evaluation Exercise (CEX) is meant to provide on the spot feedback to trainees. We hypothesised that an ultra-short assessment tool with just one global entrustment scale (micro-CEX) would encourage faculty to provide better feedback compared to the traditional multiple domain mini-CEX.

Methods: 59 pairs of faculty and trainees from internal medicine completed both the 7-item mini-CEX and a micro-CEX and were surveyed regarding their perceptions of the 2 forms. Wordcount and specificity of the feedback was assessed. Participants were subsequently interviewed to elicit their views on factors affecting the utility of the CEX.

Results: Quantity and quality of feedback increased with the micro-CEX compared to the mini-CEX. Wordcount increased from 9.5 to 17.5 words, and specificity increased from 1.6 to 2.3 on a 4-point scale, p < 0.05 in both cases. Faculty and residents both felt the micro-CEX provided better assessment and feedback. The micro-CEX, but not the mini-CEX, was able to discriminate between residents in different years of training. The mini-CEX showed a strong halo effect between different domains of scoring. In interviews, ease of administration, immediacy of assessment, clarity of purpose, structuring of desired feedback, assessor-trainee pairing and alignment with trainee learning goals were identified as important features to optimize utility of the (mini or micro or both) CEX.

Conclusions: Simplifying the assessment component of the CEX frees faculty to concentrate on feedback and this improves both quantity and quality of feedback. How the form is administered on the ground impacts its practical utility.

Keywords: Workplace Based Assessment, Mini-CEX, Micro-CEX, Feedback, Assessment

Practice Highlights

- Simplifying the assessment component of the CEX frees faculty to concentrate on feedback.

- A simpler form can result in better and more feedback.

- Making it easy for faculty to use the form is important and increases its utility in providing feedback and assessment.

I. INTRODUCTION

The Mini-CEX is one of the most widely used work-placed based assessment (WBA) tools and is supported by a large body of theoretical and empirical evidence which have shown that when used in the context of repeated sampling, it is both a valid assessment tool and is also an effective education tool in giving feedback to the trainee (Hawkins et al., 2010; Norcini et al., 2003). However, in practice, the educational value of the mini-CEX, as measured chiefly by trainee and faculty perceptions and satisfaction, varied significantly (Lorwald et al., 2018). Factors affecting the educational value have been described by Lorwald et al. and categorised into context of usage, and user, implementation and outcome factors (Lorwald et al., 2018).

Context refers to the situation in which the mini-CEX is executed, and factors which impact its actual usage, such as time needed for conducting the Mini-CEX, or the usability of the tool. Time constraint on the part of both the residents and the assessors is an especially frequent issue across multiple studies (Bindal et al., 2011; Brazil et al., 2012; Castanelli et al., 2016; Lörwald et al., 2018; Morris et al., 2006; Nair et al., 2008; Yanting et al., 2016). The mini-CEX was conceived as a 30-minute exercise of directly observed assessment, and there are 6 or 7 domains which faculty are expected to assess (Norcini et al., 2003). In a busy clinical environment however, what actually occurs is often a brief clinical encounter of 10-15 minutes or even less where only a few of the mini-CEX’s domains were assessed (Berendonk et al., 2018).

User factors refers to trainee and faculty knowledge of the mini-CEX and their perceptions of its use. Studies have found that the mini-CEX is frequently regarded as a check box exercise (Bindal et al., 2011; Sabey & Harris, 2011). Assessor’s and trainee’s training and attitudes, or unfamiliarity with the WBA tools also negatively impact the educational value of the mini-CEX (Lörwald et al., 2018). Reports have shown that educating faculty on the formative intent of mini-CEX can improve feedback provided (Liao et al., 2013).

Implementation factors refer to how the mini-CEX is actually executed on the ground. Some studies have reported that the mini-CEX often occurs without actual direct observation (Lörwald et al., 2018) or feedback provided (Weston & Smith, 2014). Implementation in turn affected outcome, which refers to the trainees appraisal of the feedback received (Lörwald et al., 2018).

One way of improving the educational value of the mini-CEX then might be to improve the context of its usage, by redesigning the mini-CEX to better fit the realities of the clinical workplace. In different clinical encounters, specific domains of performance are more easily and obviously observed and assessed than others (Crossley & Jolly, 2012). Reducing the number of dimensions the assessors are asked to rate was shown to decrease measured cognitive load and improved interobserver reliability (Tavares et al., 2016). It has also been shown that using rating scales that align with the clinician’s cognitive schema perform better, for instance, scales that ask the clinician assessors about the trainees ability to practice safely with decreasing levels of supervision (i.e. entrustability) showed better discrimination and higher reliability (Weller et al., 2014). Compared to multidimension rating scales, global rating scales have greater reliability and validity in assessing candidates in OSCE examinations (Regehr et al., 1998), assessing technical competence in procedures (Walzak et al., 2015) and in simulation-based training (Ilgen et al., 2015).

We proposed therefore to replace the multiple domains with a single rating asking faculty what level of supervision the resident would require in performing a similar task, i.e. a global entrustment scale. The shorter assessment task should refocus the faculty on the feedback component, whilst still retaining the ability to identify trainee progression. One such form has been proposed by Kogan and Holmboe (2018), and we designated this the micro-CEX.

We hypothesised that these changes would improve the usability (“context” as described by Lorwald et al.) and hence improve the educational value of the assessment, measured in this study by the specificity and quality of the feedback given by faculty.

Our study aims to show therefore that the shorter micro-CEX can provide better feedback than the usual mini-CEX. We also sought to find out, from the perspective of the end-users, what other adjustments to the implementation and design of the mini or micro-CEX can be made to improve its acceptability, educational value and validity.

The study focussed on the following questions:

Does the micro-CEX stimulate faculty to provide more specific and actionable feedback compared to the mini-CEX?

Can the micro-CEX provide discriminatory assessment for residents across different years of practice?

What are the perceptions of the faculty and residents regarding the factors affecting utility of the assessment instrument in providing feedback and assessment?

II. METHODS

A. Setting and Subjects

The study was conducted in the division of Internal Medicine in a 1700 bed hospital in Singapore between September and December 2018. All faculty and residents rotating through internal medicine were invited to participate via e-mail, and agreeable faculty and residents paired up. In usual practice, residents must complete at least 2 mini-CEX covering standard inpatient or outpatient encounters during each three-month internal medicine posting, hence both residents and faculty are familiar with the usual mini-CEX.

B. Design

In order to evaluate for any participant reactivity affecting the CEX data (i.e. a Hawthorne effect) (Paradis & Sutkin, 2017), a baseline sample of 30 of the usual mini-CEX performed in the 3 months prior to the study was randomly selected and deidentified (from June to August 2018) . The quantity and specificity of feedback in these was evaluated as detailed below.

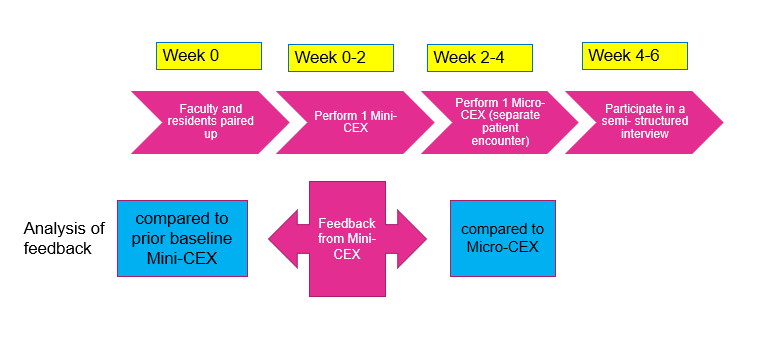

For the study itself, faculty and residents used the usual mini-CEX as the first assessment in the first 2 weeks of the month, followed by a second assessment using the micro-CEX in the next 2 weeks. This sequence was chosen as performing the micro-CEX first might affect how the subsequent mini-CEX was performed. Cases chosen for the mini-CEX and micro-CEX were inpatient or outpatient internal medicine encounters, and faculty were simply instructed to choose cases that represented typical cases of average difficulty with no restrictions on the exact cases to be chosen.

Faculty and residents completed an anonymised survey on their experiences at the end of the study and were invited to participate in a semi-structured group interview to elicit their views regarding which aspects of the mini-CEX exercise influenced feedback and assessment (Appendix 2). Both faculty and residents were informed that the survey and interviews were part of this study and participation in either was taken to be implied consent. The workflow of the study is seen in Figure 1.

Figure 1. Study workflow

C. Instruments

The mini-CEX used in the program is based on the one described by Norcini (Norcini et al., 2003).This form was hosted on the internet ( New Innovations, Ohio, USA) and could be accessed by faculty from their mobile devices or their email. The micro- CEX was hosted on an opensource online survey tool (LimeSurvey GmbH, Hamburg, Germany) and can be accessed from mobile devices. A copy of both forms is available in Appendix 1.

D. Analysis of Feedback

The quality of feedback was assessed firstly by a word count, and then by grading the specificity of the feedback on a three-point scale (Pelgrim et al., 2012) (Appendix 3) and finally by the presence or absence of an actionable plan for improvement. In order to avoid rater bias, the assessor for the specificity of the feedback was blinded to the source of feedback (mini or Micro CEX). The first 20 forms were independently graded by two separate assessors (OTH and AC) using the above criteria, achieving a kappa coefficient of 0.852; all subsequent forms were graded by OTH, with any uncertainty resolved by discussion between AC and OTH. Word count and specificity, as well as faculty and resident preferences between the forms, were analysed using paired samples T-test. Proportion of Feedback which showed an actionable plan was compared using a Chi-Square test.

E. Semi-structured Interviews

Faculty and residents were interviewed separately. 21 residents and 6 faculty were interviewed over 8 sessions lasting between 20 to 30 minutes each. Interviews were conducted by the investigator (OTH). The interviews were audiotaped and transcribed verbatim. Data collection ended when saturation was reached. Member checking of the transcripts was carried out.

The inductive template analysis as described by Nigel King was used to analyse the interview transcripts (King, 2012). Two transcripts were studied and coded separately by the investigator (OTH) and a collaborator (OHK). A priori themes of assessment, feedback and administration were used to structure the data so that the research question could be answered. Codes were discussed between OTH and OHK until a consensus was reached, and a codebook was created. The subsequent transcripts were coded by OTH. OHK, AC and OTH subsequently met to discuss the categories and emerging themes. NVivo 12 was used to store and manage the codes and transcripts. Results were triangulated with data from the quantitative surveys.

For all quantitative data, an alpha of 0.05 was used as the cut-off for significance. IBM SPSS 25 (IBM SPSS Statistics for Windows, Version 25.0. Armonk, NY) was used for calculations.

III. RESULTS

The data that support the findings of this study are openly available in Figshare repository, at https://doi.org/10.60 84/m9.figshare.21862068.v1 (Ong, 2023).

There were 33 internal medicine residents during the study period and 32 (97%) participated in the study; one resident declined to participate. They were paired with 39 different faculty over the three months of the study. 59 unique faculty- resident pairs completed both sets of CEX. 30.5% of the residents were in their first year of residency (R1), 47.9% in second year (R2), and 22.0% were in their third year (R3). The residents completed an average of 1.96 pairs of mini and micro CEX each. Time taken to complete the assessments was estimated by faculty to be 11.33 +/- 6.56 min for mini-CEX vs 9.42 +/-5.51 min for the micro-CEX (p = 0.02).

A. Evaluation of Feedback in the Mini-CEX: Baseline and During Study

30 de-identified mini-CEX were extracted randomly from the 3 months preceding the initiation of the study. These served as a baseline control and were compared to the feedback from the first, traditional mini-CEX done during the study (Table 1). During the period of the study, faculty using the same mini-CEX provided feedback that was more specific. Proportion of actionable feedback provided was much more in the mini-CEX done as part of the study compared to baseline controls (Table 1: 3.3% controls vs 28% study mini-CEX, p = 0.005).

|

|

Mini-CEX vs prior baseline control |

Mini-CEX vs Micro-CEX |

||||

|

Prior baseline control mini-CEX (mean+/-SD) |

Study Mini-CEX (mean +/- SD) |

p value |

Mini-CEX (mean +SD) |

Micro CEX (mean +SD) |

p value |

|

|

Q1 in which areas did the resident do well |

|

|||||

|

Word count |

12.1 +/-14.1 |

9.5 +/- 7.0 |

0.93 |

9.5 +/- 7.0 |

17.5 +/- 10.3 |

<0.001 |

|

Specificity* |

1.2 +/- 1.0 |

1.6 +/- 0.90 |

0.08 |

1.6+/- 0.9 |

2.3 +/- 0.7 |

<0.001 |

|

Q2/3 Areas needing improvement/ recommendations for future improvement |

||||||

|

Word count |

3.8 +/- 6.8 |

5.7 +/- 7.3 |

0.06 |

5.7 +/- 7.3 |

19.3 +/- 15.1 |

<0.001 |

|

Specificity* |

0.5 +/- 0.7 |

1.1 +/-1.1 |

0.01 |

1.1 +/- 1.0 |

1.8 +/- 0.9 |

<0.001 |

|

Actionable |

1/30 (3.3%) |

17/59(28.8%) |

0.005 |

17/59 (28.8%) |

18/59(30.5%) |

0.84 |

Table 1. Quality and quantity of feedback in prior baseline control vs study mini-CEX, and in mini vs Micro-CEX

B. Evaluation of Feedback in the Micro and Mini-CEX During Study

Comparison of the feedback given in the mini and micro-CEX during the study is shown in Table 1. Feedback wordcount increased and was more specific with micro-CEX compared to the contemporaneous mini-CEX done by the same pair. However, there were no differences in the proportion of actionable feedback given in both forms.

C. Discrimination Between Residents in Different Years of Training

The micro-CEX was able to show progression between the years of training, with a significant rise in the resident’s mean score across the three years of training. On a 4 point score the mean entrustment score increased from 2.45 in the first year of training to 3.30 by the third year (p<0.05). (Figure 2)

Figure 2. Level of entrustment vs Year of training

Correlation of residents’ year of training and grading of the mini-CEX domains was moderate (kappa 0.39 to 0.60). There was high correlation between all seven questions in the mini-CEX (kappa 0.7 to 0.8) (see appendix 4), implying that the resident’s score in one domain heavily influenced the score in other domains i.e. a halo effect.

D. Faculty and Resident Preferences

21 (out of total 32 participating residents, 65% response rate) residents and 25 (out of total 39 participating faculty, 64.9% response rate) responded to the survey. Faculty and residents felt that the Micro -CEX had better value for both assessment and feedback compared to the Mini-CEX (Table 2).

|

|

Mini-CEX Mean + SD |

Micro CEX Mean + SD |

p value |

t-Stats |

Cohen’s d |

|

Usefulness for assessment |

|||||

|

Faculty |

6.04 +/- 1.34 |

6.57 +/- 0.95 |

0.04 |

2.23 |

0.46 |

|

Residents |

6.00 +/- 1.62 |

6.9 +/- 0.91 |

0.03 |

-2.31 |

0.52 |

|

Usefulness for feedback |

|||||

|

Faculty |

6.00 +/- 1.35 |

6.87 +/- 1.10 |

0.01 |

-3.07 |

0.64 |

|

Residents |

5.43 +/- 1.40 |

6.81 +/- 1.57 |

0.09 |

-3.82 |

0.83 |

Table 2. Perceptions of faculty and residents regarding usefulness of mini and micro-CEX for assessment and feedback

*Scoring is on a 9-point Likert scale, with 1 = not useful at all …. 9 = very useful

E. Qualitative Data

Qualitative data from the semi-structured interviews was analysed to better understand what the features of the micro-CEX driving this preference were, and to look for helpful features in the CEX. Themes from the semi-structured interviews were distilled into 6 themes (Table 3):

1) Make it easy: A recurrent theme was that the micro-CEX was easier to use and the short form could be used by the bedside, on resident or faculty mobile devices.

2) Immediacy is important: Faculty and residents both prized the ability to integrate the assessment into their daily routines, and this immediacy was very important in enhancing the value of the feedback.

3) Tell us what it’s for: Faculty and residents both expressed that the intended purpose of the forms needed to be explicit. Uncertainty in purpose of the form resulted in a perception of redundancy with the other assessments, and confusion about summative vs formative intent of the assessment inhibited honest feedback and assessment.

4) Structure the form so we know what you want: Structuring the form with specific areas to remind them to provide narrative feedback, and what specific areas to provide feedback in, was useful. Faculty and residents both felt that the micro-CEX had better learning value than the mini-CEX.

5) Choice of assessor matters depending on objective of the tool: Faculty and residents agreed that assessments were frequently affected by the prior experiences between the two, impacting the objectivity of assessments via both the micro-and mini- CEX. Prior engagement with the resident facilitated provision of feedback. However, for assessment purposes, residents felt that a faculty with no prior knowledge of the trainee might be more objective.

6) Align assessment with learning goals: Many of the residents were preparing for their postgraduate medical examinations, and they found the mini-CEX exercise especially useful if it was conducted in a way similar to their examinations (the Royal College of Physicians PACES exam) – in other words, the utility of the exercise increased greatly when the assessment was aligned with the residents’ own learning goals.

|

S/N |

Themes |

Quotations |

|

1. |

Make it easy to do |

The micro-CEX was “more succinct. So, it’s, it’s much easier to administer” -F

“If it’s a shorter form, even though the quantity may be less maybe the fact that the quality of whatever feedback we’re given is better because they’re really giving the one or two points that really stood out to them that we need to improve on or the one or two things that we really did well” -R

(Regarding the mini-CEX) “The fact that it’s more detailed actually maybe reduces the quality of the feedback because … if you ask me for additional remarks for every single domain, then they just put nil, nil, nil because there’s no time” -F

|

|

2. |

Immediacy is important |

“Memory is also fresh because you’ve just done the case and so I think the learning value’s a lot better” -R

“I think looking at it in terms of like a learning experience also, um, when we have that micro-CEX on the spot, ah, not only can we address, like all the points immediately, like what the resident should, um, but at the same time, ah, you can kinda go through certain topics at the same setting as well” -F

|

|

3. |

What is this for |

“I think we need clear goals as to why we do these, rather than to simply check boxes.” -R

“The form should come with what is the expectation of this, uh, assessment, whether it’s for assessing, or it’s for a feedback, or it’s …. whether this person can work as a HO. I mean, the intention will drive how I assess” -R

“We have a lot of forms, the 360 and the mini-cex and all. Sometimes maybe I personally don’t really see what the difference is sometimes or how it can help to change assessment. I think it’s just extra admin for everyone and everyone just gets fed up doing it” -R

“I think the assessor, sometimes they’re very nice, they know it affects your, your grading or your, your overall performance in the residency, so they try not to be too strict” -R

|

|

4. |

Be specific about what you want to know |

The micro-CEX had “I think several features currently that are really quite useful. Number one is that there is the mandatory open-ended field, um, for areas that need improvement and areas that need to be reinforced” -F

“I find the comments, uh, quite useful. Maybe not the grades itself, because usually people would just give, like, mod- middle-grade. But, the written comments are actually quite useful” -R

|

|

5. |

Choice of assessor matters depending on objective for the tool |

“It’s quite easy for me to, to, to, remember each of them and give them dedicated feedback” -F

“It should be someone that you don’t really know, but maybe in the same department. So, that it can be like, really, like a proper case scenario, yeah. Instead of grading you based on what their other impressions are” -R

|

|

6. |

Align assessment with learning goals |

“So I had this one particular case, that was a very good PACES case, that I clerked in the morning, and, we impromptu made it into a mini-CEX kind of session and, and we went in quite in depth into the discussion, and PACES that sort of stuff, and I thought that was very useful.” -R

|

Table 3. Themes with supporting quotations

*1 PACES = Membership of Royal Collage Physicians clinical examination, a required exit certification for the residents.

IV. DISCUSSION

The most striking result from this study is that even without specific faculty training or other intervention, simplifying the assessment task alone led faculty to write longer, and more specific feedback. Faculty and residents also perceived that the feedback was better. By simplifying the assessment, the faculty’s attention was shifted from grading the resident in multiple domains toward qualitatively identifying good and bad points in the encounter, providing feedback for the residents.

Proportion of actual actionable feedback in the two forms, however, was not different. This is perhaps because there was no specific faculty training for the study as we felt that the additional training itself would impact results. Specific faculty training may be needed to improve this aspect.

A Hawthorne effect was noticed in the study (Adair, 1984). The proportion of actionable feedback provided was much more in the mini-CEX done as part of the study compared to baseline controls (Table 1: 3.3% controls vs 28% study mini-CEX, p = 0.005). Word count and specificity also increased. However, despite this, we were still able to show that the micro-CEX induced faculty to provide more and better feedback.

From the global entrustment scale used in the micro-CEX, it was possible to demonstrate progression from first year to third year of residency (Figure 2). One potential concern is loss of granularity in assessment of different domains, i.e., that we might lose the ability to identify the specific domain in which the resident is weak if we do not ask faculty to score physical examination, history taking, management etc. separately. However, we found a high correlation between the scores in all domains in the mini-CEX (kappa ranged from 0.7 to 0.8, see appendix 4), indicating a strong halo effect. This suggests that in practice, faculty are making a global assessment anyway rather than a separate assessment of separate domains. Faculty and residents perceived that the single global assessment with the micro-CEX provided better assessment.

The messages from faculty and residents about what they perceive to be important in making the CEX work for them speak for themselves. The importance of making the form easy to administer is very intuitive; the bureaucratic impracticality of paper portfolios was pointed out long ago and e-portfolios were touted as the preferred solution (Van Tartwijk & Driessen, 2009) but the message here is that administrative details have significant impact on the utility of the CEX – many of the issues cited such as the number of assessments an individual assessor has to make, whether the assessor is equipped to do the assessment on the spot, or whether the assessor has prior exposure to the resident or not – are administrative and educational design details that faculty training alone cannot solve.

Our study had several limitations. Variations in the clinical environment such as ward vs ambulatory clinic, variable workload or competing responsibilities of the faculty and residents might have affected how the CEX was administered. However, distractions in the ward do affect the performance of CEX in real life as well.

We also note that in this study design, the mini-CEX was performed before the micro-CEX. This was deliberate as the residents and faculty were used to doing the mini-CEX on an ongoing basis so the first mini-CEX would be a “usual” assessment followed by the new assessment. Performing the micro-CEX first might affect how the subsequent mini-CEX was performed.

In this study, we did not attempt to make judgements about reliability and validity of the micro-CEX as only one specific data point was obtained for each trainee. The mini-CEX is validated to be reliable mainly in the context of repeated tests , and preferably in the context of a coherent program of assessment (van der Vleuten & Schuwirth, 2005). Whether the micro-CEX is able to provide equivalent robust and valid assessment compared to the mini-CEX depends on how it is used and is an area ripe for future study.

V. CONCLUSION

Our study demonstrated that the micro-CEX has a high rate of acceptability amongst faculty and residents, as well as a measurable improvement in feedback characteristics compared to the usual mini-CEX. The context in which the form is administered in actual practice has significant impact on its utility for feedback and assessment.

Ethical Approval

The study protocol was reviewed by the hospital Institutional Review Board, who deemed this as an educational quality improvement project which did not require IRB approval (Singhealth CIRB Ref: 2018/2696).

Notes on Contributors

Thun How Ong conceptualised and designed the study, administered the interviews, analysed the data and wrote the manuscript.

Hwee Kuan Ong participated in data analysis and coding of the qualitative data.

Adrian Chan participated in data analysis and in grading of the feedback specificity.

Dujeepa D. Samarasekera provided input on initial study design and reviewed the manuscript.

C. P. M. van der Vleuten provided guidance and input at all stages of the study, from initial study design to data analysis and manuscript writing.

Data Availability

The data that support the findings of this study are openly available in Figshare repository, at

https://doi.org/10.6084/m9.figshare.21862068.v1

Acknowledgement

The authors would like to acknowledge the contributions of the following:

Tan Shi Hwee and Nur Suhaila who provided the administrative support that made the whole project feasible.

The Faculty and Residents who were willing to do the extra CEX and the interviews, and who labour daily in pursuit of the ultimate goal of providing better care for our patients.

Funding

No funding was obtained for this study.

Declaration of Interest

All authors have no declaration of interest.

References

Adair, J. G. (1984). The Hawthorne effect: A reconsideration of the methodological artifact. Journal of Applied Psychology, 69(2), 334-345. https://doi.org/10.1037/0021-9010.69.2.334

Berendonk, C., Rogausch, A., Gemperli, A., & Himmel, W. (2018). Variability and dimensionality of students’ and supervisors’ mini-CEX scores in undergraduate medical clerkships – A multilevel factor analysis. BMC Medical Education, 18(1), 100. https://doi.org/10.1186/s12909-018-1207-1

Bindal, T., Wall, D., & Goodyear, H. M. (2011). Trainee doctors’ views on workplace-based assessments: Are they just a tick box exercise? Medical Teacher, 33(11), 919-927. https://doi.org/10.3109/0142159X.2011.558140

Brazil, V., Ratcliffe, L., Zhang, J., & Davin, L. (2012). Mini-CEX as a workplace-based assessment tool for interns in an emergency department – Does cost outweigh value? Medical Teacher, 34(12), 1017-1023. https://doi.org/10.3109/0142159X.2012.719653

Castanelli, D. J., Jowsey, T., Chen, Y., & Weller, J. M. (2016). Perceptions of purpose, value, and process of the mini-Clinical Evaluation Exercise in anesthesia training. Canadian Journal of Anaesthesia, 63(12), 1345-1356. https://doi.org/10.1007/s12630-016-0740-9

Crossley, J., & Jolly, B. (2012). Making sense of work-based assessment: Ask the right questions, in the right way, about the right things, of the right people. Medical Education, 46(1), 28-37. https://doi.org/10.1111/j.1365-2923.2011.04166.x

Hawkins, R. E., Margolis, M. J., Durning, S. J., & Norcini, J. J. (2010). Constructing a validity argument for the mini-Clinical Evaluation Exercise: A review of the research. Academic Medicine, 85(9), 1453-1461. https://doi.org/10.1097/ACM.0b013 e3181eac 3e6

Ilgen, J. S., Ma, I. W., Hatala, R., & Cook, D. A. (2015). A systematic review of validity evidence for checklists versus global rating scales in simulation-based assessment. Medical Education, 49(2), 161-173. https://doi.org/10.1111/medu.12621

King, N. (2012). Doing template analysis. Sage Knowledge, 426-450. https://doi.org/10.4135/9781526435620.n24

Kogan, J. R., & Holmboe, E. (2018). Practical guide to the evaluation of clinical competence appendix 4.6 (2nd ed.). Elsevier.

Liao, K. C., Pu, S. J., Liu, M. S., Yang, C. W., & Kuo, H. P. (2013). Development and implementation of a mini-Clinical Evaluation Exercise (mini-CEX) program to assess the clinical competencies of internal medicine residents: From faculty development to curriculum evaluation. BMC Medical Education, 13, 31-31. https://doi.org/10.1186/1472-6920-13-31

Lörwald, A. C., Lahner, F. M., Greif, R., Berendonk, C., Norcini, J., & Huwendiek, S. (2018). Factors influencing the educational impact of Mini-CEX and DOPS: A qualitative synthesis. Medical Teacher, 40(4), 414-420. https://doi.org/10.1080/0142159X.2017 .1408901

Lorwald, A. C., Lahner, F. M., Nouns, Z. M., Berendonk, C., Norcini, J., Greif, R., & Huwendiek, S. (2018). The educational impact of mini-Clinical Evaluation Exercise (mini-CEX) and Direct Observation of Procedural Skills (DOPS) and its association with implementation: A systematic review and meta-analysis. PLoS One, 13(6), Article e0198009. https://doi.org/10.1371/jour nal.pone.0198009

Morris, A., Hewitt, J., & Roberts, C. M. (2006). Practical experience of using directly observed procedures, mini Clinical Evaluation Examinations, and peer observation in pre-registration house officer (FY1) trainees. Postgraduate Medical Journal, 82(966), 285-288. https://doi.org/10.1136/pgmj.2005.040477

Nair, B. R., Alexander, H. G., McGrath, B. P., Parvathy, M. S., Kilsby, E. C., Wenzel, J., Frank, I. B., Pachev, G. S., & Page, G. G. (2008). The mini clinical evaluation exercise (mini-CEX) for assessing clinical performance of international medical graduates. Medical Journal of Australia, 189(3), 159-161.

Norcini, J. J., Blank, L. L., Duffy, F. D., & Fortna, G. S. (2003). The mini-CEX: A method for assessing clinical skills. Annals Internal Medicine, 138(6), 476-481.

Ong, T. H., Ong, H. K., Chan, A., Samarasekera, D. D., van der Vleuten, C. (2023). Micro CEX vs Mini CEX: Less can be more [Dataset]. Figshare. https://doi.org/https://doi.org/10.6084/m9.Fig share.21862068.v3

Paradis, E., & Sutkin, G. (2017). Beyond a good story: From Hawthorne Effect to reactivity in health professions education research. Medical Education, 51(1), 31-39. https://doi.org/10.1111/medu.13122

Pelgrim, E. A. M., Kramer, A. W. M., & Van der Vleuten, P. M. (2012). Quality of written narrative feedback and reflection in a modified mini-Clinical Evaluation Exercise: An observational study. BMC Medical Education, 12(1), 97. https://doi.org/10.1186/1472-6920-12-97

Regehr, G., MacRae, H., Reznick, R. K., & Szalay, D. (1998). Comparing the psychometric properties of checklists and global rating scales for assessing performance on an OSCE-format examination. Academic Medicine, 73(9), 993-997.

Sabey, A., & Harris, M. (2011). Training in hospitals: What do GP specialist trainees think of workplace-based assessments? Education for Primary Care, 22(2), 90-99.

Tavares, W., Ginsburg, S., & Eva, K. W. (2016). Selecting and simplifying: Rater performance and behavior when considering multiple competencies. Teaching and Learning in Medicine, 28(1), 41-51. https://doi.org/10.1080/10401334.2015.1107489

van der Vleuten, C. P., & Schuwirth, L. W. (2005). Assessing professional competence: From methods to programmes. Medical Education, 39(3), 309-317. https://doi.org/10.1111/j.1365-2929. 2005.02094.x

Van Tartwijk, J., & Driessen, E. W. (2009). Portfolios for assessment and learning: AMEE Guide no. 45. Medical Teacher, 31(9), 790-801. https://doi.org/10.1080/01421590903139201

Walzak, A., Bacchus, M., Schaefer, J. P., Zarnke, K., Glow, J., Brass, C., McLaughlin, K., & Ma, I. W. (2015). Diagnosing technical competence in six bedside procedures: Comparing checklists and a global rating scale in the assessment of resident performance. Academic Medicine, 90(8), 1100-1108. https://doi.org/10.1097/acm.0000000000000704

Weller, J. M., Misur, M., Nicolson, S., Morris, J., Ure, S., Crossley, J., & Jolly, B. (2014). Can I leave the theatre? A key to more reliable workplace-based assessment. British Journal of Anaesthesia, 112(6), 1083-1091. https://doi.org/10.1093/bja/aeu052

Weston, P. S. J., & Smith, C. A. (2014). The use of mini-CEX in UK foundation training six years following its introduction: Lessons still to be learned and the benefit of formal teaching regarding its utility. Medical Teacher, 36(2), 155-163. https://doi.org/10.3109/0142159X.2013.836267

Yanting, S. L., Sinnathamby, A., Wang, D., Heng, M. T. M., Hao, J. L. W., Lee, S. S., Yeo, S. P., & Samarasekera, D. D. (2016). Conceptualizing workplace-based assessment in Singapore: Undergraduate mini-Clinical Evaluation Exercise experiences of students and teachers. Tzu-Chi Medical Journal, 28(3), 113-120. https://doi.org/10.1016/j.tcmj.2016.06.001

*Ong Thun How

Academia, 20 College Road,

Singapore 168609

97100638

Email: ong.thun.how@singhealth.com.sg

Announcements

- Best Reviewer Awards 2025

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2025.

Refer here for the list of recipients. - Most Accessed Article 2025

The Most Accessed Article of 2025 goes to Analyses of self-care agency and mindset: A pilot study on Malaysian undergraduate medical students.

Congratulations, Dr Reshma Mohamed Ansari and co-authors! - Best Article Award 2025

The Best Article Award of 2025 goes to From disparity to inclusivity: Narrative review of strategies in medical education to bridge gender inequality.

Congratulations, Dr Han Ting Jillian Yeo and co-authors! - Best Reviewer Awards 2024

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2024.

Refer here for the list of recipients. - Most Accessed Article 2024

The Most Accessed Article of 2024 goes to Persons with Disabilities (PWD) as patient educators: Effects on medical student attitudes.

Congratulations, Dr Vivien Lee and co-authors! - Best Article Award 2024

The Best Article Award of 2024 goes to Achieving Competency for Year 1 Doctors in Singapore: Comparing Night Float or Traditional Call.

Congratulations, Dr Tan Mae Yue and co-authors! - Best Reviewer Awards 2023

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2023.

Refer here for the list of recipients. - Most Accessed Article 2023

The Most Accessed Article of 2023 goes to Small, sustainable, steps to success as a scholar in Health Professions Education – Micro (macro and meta) matters.

Congratulations, A/Prof Goh Poh-Sun & Dr Elisabeth Schlegel! - Best Article Award 2023

The Best Article Award of 2023 goes to Increasing the value of Community-Based Education through Interprofessional Education.

Congratulations, Dr Tri Nur Kristina and co-authors! - Best Reviewer Awards 2022

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2022.

Refer here for the list of recipients. - Most Accessed Article 2022

The Most Accessed Article of 2022 goes to An urgent need to teach complexity science to health science students.

Congratulations, Dr Bhuvan KC and Dr Ravi Shankar. - Best Article Award 2022

The Best Article Award of 2022 goes to From clinician to educator: A scoping review of professional identity and the influence of impostor phenomenon.

Congratulations, Ms Freeman and co-authors.