Blueprinting and auditing a postgraduate medical education programme – Lessons from COVID-19

Submitted: 30 May 2022

Accepted: 7 December 2022

Published online: 4 July, TAPS 2023, 8(3), 35-44

https://doi.org/10.29060/TAPS.2023-8-3/OA2876

Rachel Jiayu Lee1*, Jeannie Jing Yi Yap1*, Abhiram Kanneganti1, Carly Yanlin Wu1, Grace Ming Fen Chan1, Citra Nurfarah Zaini Mattar1,2, Pearl Shuang Ye Tong1,2, Susan Jane Sinclair Logan1,2

1Department of Obstetrics and Gynaecology, National University Hospital, Singapore; 2Department of Obstetrics and Gynaecology, Yong Loo Lin School of Medicine, National University of Singapore, Singapore

*Co-first authors

Abstract

Introduction: Disruptions of the postgraduate (PG) teaching programmes by COVID-19 have encouraged a transition to virtual methods of content delivery. This provided an impetus to evaluate the coverage of key learning goals by a pre-existing PG didactic programme in an Obstetrics and Gynaecology Specialty Training Programme. We describe a three-phase audit methodology that was developed for this

Methods: We performed a retrospective audit of the PG programme conducted by the Department of Obstetrics and Gynaecology at National University Hospital, Singapore between January and December 2019 utilising a ten-step Training Needs Analysis (TNA). Content of each session was reviewed and mapped against components of the 15 core Knowledge Areas (KA) of the Royal College of Obstetrics & Gynaecology membership (MRCOG) examination syllabus.

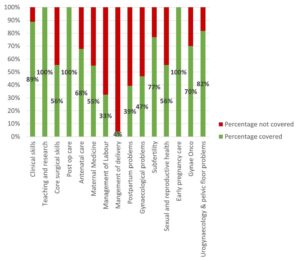

Results: Out of 71 PG sessions, there was a 64.9% coverage of the MRCOG syllabus. Four out of the 15 KAs were inadequately covered, achieving less than 50% of knowledge requirements. More procedural KAs such as “Gynaecological Problems” and those related to labour were poorly (less than 30%) covered. Following the audit, these identified gaps were addressed with targeted strategies.

Conclusion: Our audit demonstrated that our pre-pandemic PG programme poorly covered core educational objectives i.e. the MRCOG syllabus, and required a systematic realignment. The COVID-19 pandemic, while disruptive to our PG programme, created an opportunity to analyse our training needs and revamp our virtual PG programme.

Keywords: Medical Education; Residency; Postgraduate Education; Obstetrics and Gynaecology; Training Needs Analysis; COVID-19; Auditing Medical Education

Practice Highlights

- Regular audits of PG programmes ensure relevance to key educational objectives.

- Training Needs Analysis facilitates identification of learning goals, deficits & corrective change.

- Mapping against a milestone examination syllabus & using Delphi technique helps identify learning gaps.

- Procedural-heavy learning goals are poorly served by didactic PG and need individualised assessment.

- A central committee is needed to balance the learning needs of all departmental CME participants.

I. INTRODUCTION

Postgraduate medical education (PG) programmes are an important aspect in meeting core Specialty Trainees’ (ST) learning goals in addition to other modalities of instruction such as practical training (e.g. supervised patient-care or simulator-based training) (Bryant‐Smith et al., 2019) and workplace-based assessments (e.g. case-based discussions and Objective Structured Clinical Examinations [OSCEs] (Chan et al., 2020; Parry-Smith et al., 2014). In academic medical centres, PG education may often be nestled within a wider departmental or hospital Continuing Medical Education (CME) programme. While both PG and CME programmes indirectly improve patient outcomes by keeping clinicians abreast with the latest updates, reinforcing important concepts, and changing practice (Burr & Johanson, 1998; Forsetlund et al., 2021; Marinopoulos et al., 2007; Norman et al., 2004; Raza et al., 2009; Sibley et al., 1982), it is important to balance the learning needs of STs with that of other learners (E.g. senior clinicians, scientists and allied healthcare professionals). This can be challenging as multiple objectives need be fulfilled amongst various learners. Nevertheless, just as with any other component of good quality patient care, it is amenable to audit and quality improvement initiatives (Davies, 1981; Norman et al., 2004; Palmer & Brackwell, 2014).

The protracted COVID-19 pandemic has disrupted the way we deliver healthcare and conduct non-clinical services (Lim et al., 2009; Wong & Bandello, 2020). In response, the academic medical community has globally embraced the use of teleconferencing platforms such as ZoomⓇ, Microsoft TeamsⓇ and WebexⓇ (Kanneganti, Sia, et al., 2020; Renaud et al., 2021) as well as other custom-built solutions for the synchronous delivery of didactics and group discourse (Khamees et al., 2022). While surgical disciplines have suffered a decline in the quality of “hands-on” training due to reduced elective surgical load and safe distancing (English et al., 2020), the use of simulators (Bienstock & Heuer, 2022; Chan et al., 2020; Hoopes et al., 2020; Xu et al., 2022), remote surgical preceptorship, and teaching through surgical videos (Chick et al., 2020; Juprasert et al., 2020; Mishra et al., 2020) have helped mitigate some of these. Virtual options that that have been reproducibly utilised during the pandemic and will be a part of the regular armamentarium of post-graduate medical educationists include online didactic lectures, livestreaming or video repositories of surgical procedures, (Grafton-Clarke et al., 2022) and virtual case discussions and grand ward rounds (Sparkes et al., 2021). Notably, they facilitate the inclusion of a physically wider audience, be it trainer or trainee, and allow participants to tune in from different geographical locations.

At the Department of Obstetrics and Gynaecology, National University Hospital, Singapore, the forced, rapid transition to a virtual CME format (vCME) (Chan et al., 2020; Kanneganti, Lim, et al., 2020) provided an impetus to critically review and revamp the didactic component of our PG programmes. A large component of this had been traditionally baked into our departmental CME programme which comprises daily morning meetings covering recent specialty and scientific updates, journal clubs, guideline reviews, grand round presentations, surgical videos, exam preparation, topic modular series, and research and quality improvement presentations. The schedule and topics were previously arbitrarily decided by a lead consultant one month prior and were presented by a supervised ST or invited speaker. While attendance by STs at these sessions was mandatory and comprised the bulk of protected ST teaching time, no prior attempt had been made to assess its coverage of core ST learning objectives and in particular, the syllabus for milestone ST exams.

Our main aim was to conduct an audit on the coverage of our previous PG didactic sessions on the most important learning goals with the aim of subsequently restructuring them to better meet these goals.

II. METHODS

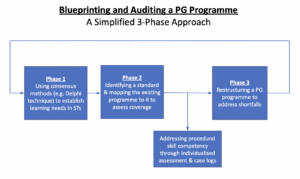

We audited and assessed our departmental CME programme’s relevance to the core learning goals of our STs by utilising a Training Needs Analysis (TNA) methodology. While there are various types of TNA used in healthcare and management (Donovan & Townsend, 2019; Gould et al., 2004; Hicks & Hennessy, 1996, 1997; Johnston et al., 2018; Markaki et al., 2021), in general they represent systematic approaches towards developing and implementing a training plan. The common attributes can be distilled into three common phases (Figure 1). Importantly for surgical and procedurally-heavy disciplines, an dimension that is not well covered by didactic sessions alone are assessments for procedural skill competency. These require separate attention that is beyond the scope of this audit.

Figure 1. A simplified three phase approach to blueprinting, mapping, and auditing a Postgraduate (PG) Education Programme

A. Phase 1: Identifying Organisational Goals and Specific Objectives

The overarching goal of a specialty PG education programme is to produce well-balanced clinicians with a strong knowledge base. Singapore’s Obstetrics and Gynaecology specialty training programmes have adopted the membership examinations for the Royal College of Obstetricians and Gynaecologists (MRCOG) (Royal College of Obstetricians and Gynaecologists, 2021) of the United Kingdom as the milestone examination for progression from junior to senior ST.

First, we adapted a ten-step TNA proposed by Donovan & Townsend (Table 1) to crystallise our our core learning goals, identify deficiencies, and subsequently propose steps to address these gaps in a systematic fashion that is catered to our specific context. While most aspects were followed without change, we adapted the last aspect i.e. Cost Benefit Analysis. As a general organisational and management tool, the original TNA primarily looked at the financial costs of implementing a training programme. At an academic medical institution, the “cost” is mainly non-financial and mainly refers to time taken away from important clinical service roles.

As part of formulating what were deemed to be core learning goals of an ideal PG programme (i.e. Steps 1 to 4), we had a focused group discussion comprising key stakeholders in postgraduate education, including core faculty (CF), physician faculty (PF), and representative STs. The discussions identified 18 goals specific to our department. We then used a modified Delphi method (Hasson et al., 2000; Humphrey-Murto et al., 2017) to distil what CF, PFs, and STs felt were important priorities for grooming future specialists. Three rounds of priority ranking were undertaken via an anonymised online voting form. At each round, these 18 goals were progressively ranked and distilled until five remained. These were then ranked from highest to lowest priority and comprised 1) exam preparedness, 2) clinical competency, 3) in-depth understanding of professional clinical guidelines, 4) interpretation of medical research literature, and 5) ability to conduct basic clinical research and audits.

|

Training Needs Analysis |

||

|

1 |

Strategic objectives |

|

|

2 |

Operational outcome |

|

|

3 |

Employee Behaviours |

|

|

4 |

Learnable Capabilities |

|

|

5 |

Gap Assessment |

|

|

6 |

Prioritise Learning and Training Needs |

|

|

7 |

Learning Approaches |

|

|

8 |

Roll-out Plan |

|

|

9 |

Evaluation Criteria |

|

|

10 |

Cost Benefit Analysis |

|

Table 1. 10-step Training Needs Analysis

Table adapted from Donovan, Paul and Townsend, John, Learning Needs Analysis (United Kingdom, Management Pocketbooks, 2019)

MRCOG: Member of the Royal College of Obstetricians and Gynaecologists, O&G: Obstetrics and Gynaecology

CREOG: Council on Resident Education in Obstetrics and Gynecology

ACGME: Accreditation Council for Graduate Medical Education,

PG: Post-Graduate Education

B. Phase 2: Identifying a Standard and Assessing for Coverage against This Standard

As with any audit, a “gold-standard” should be identified. As the focus group discussion and Delphi method identified exam preparedness as the highest priority, we created a “blueprint” based on the syllabus of the MRCOG examination (Royal College of Obstetricians and Gynaecologists, 2019). This comprised more than 200 Knowledge Requirements organised more than 200 knowledge requirements into 15 Knowledge Areas (KAs) (Table 2). We mapped the old CME programme against this blueprint to understand the extent of coverage of these KAs. We analyse the session contents between January and December 2019. We felt the best way to ensure systematic coverage of these KAs would be through sessions with pre-identified areas of topical focus conducted during protected teaching time as opposed to opportunistic and voluntary learning opportunities that may not be widely available to all STs. In our department, this applied to morning CME sessions which indeed formed the bulk of protected teaching time for STs, required mandatory attendance, and comprised sessions covering pre-defined topics. Thus, we excluded didactic sessions where 1) the content of the presentations was unavailable for audit, 2) they covered administrative aspects and did not have a pre-identified topical focus where learning was opportunistic (e.g. risk management meetings, labour ward audits), and 3) where the attendance was optional.

Mapping was conducted independently by two members of the study team (JJYY and CYW) with conflict resolved by a third member (RJL). The number of knowledge requirements fulfilled within a KA were expressed as a percentage.

|

Core knowledge areas |

|

Clinical skills |

|

Teaching and research |

|

Core surgical skills |

|

Post operative care |

|

Antenatal care |

|

Maternal Medicine |

|

Management of Labour |

|

Management of delivery |

|

Postpartum problems |

|

Gynaecological problems |

|

Subfertility |

|

Sexual and reproductive health |

|

Early pregnancy care |

|

Gynaecological Oncology |

|

Urogynaecology & pelvic floor problems |

Table 2. RCOG Core Knowledge Areas (Royal College of Obstetricians and Gynaecologists, 2019)

C. Phase 3: Restructuring a PG Programme

The final phase i.e. the restructuring of a PG programme, is directed by responses to Steps 7-10 of the 10-step TNA (Table 1). As the focus of our article is on the methodology of auditing the extent of coverage of our departmental didactic sessions over our core ST learning goals i.e. the MRCOG KAs, these subsequent efforts are detailed in the discussion section.

III. RESULTS

Altogether, 71 presentations were identified (Table 3) of which 12 CME sessions (16.9%) were unavailable and, thus, excluded from the mapping exercise. The most common types of CME sessions presented clinical updates (31.0%), original research (29.6%), journal clubs (16.9%), and exam-preparation sessions (e.g. Case Based Discussion and OSCE simulations) (12.6%). The overall coverage of the entire syllabus was 64.9% (Figure 2). The KAs demonstrating complete coverage (i.e. 100% of all requirements) were “Teaching and Research”, “Postoperative Care” and “Early Pregnancy Care”. Three KAs had a coverage of 75-100% in the CME programme i.e. “Clinical Skills” (89%), “Urogynaecology and Pelvic Floor” (82%), and “Subfertility” (77%) while three were covered below 50% i.e. “Management of Labour”, “Management of Delivery”, “Postpartum Problems”, and “Gynaecological Problems”. These were more practical KAs that were usually covered during ward covers, operating theatre, clinics, and labour ward as well as during practical skills training workshops and grand ward rounds where clinical vignettes were opportunistically discussed depending on in-patient case mix. Nevertheless, this “on-the-ground” training is often unplanned, unstructured and ‘bite-sized’, thus complicating integration with the deep and broad guideline and knowledge proficiency that may be needed to train STs to adapt to complex situations.

|

Type of presentation |

Number of sessions |

Percentage breakdown |

|

Clinical Updates |

22 |

31.0% |

|

Presentation of Original Research |

21 |

29.6% |

|

Journal Club |

12 |

16.9% |

|

Case Based Discussion |

5 |

7.0% |

|

OSCE practice |

4 |

5.6% |

|

Others* |

2 |

2.8% |

|

Audit |

2 |

2.8% |

|

Workshops |

3 |

3.0% |

|

Total |

71 |

100% |

|

*Others: ST Sharing of Overseas Experiences and Trainee Wellbeing |

||

Table 3. Type of CME presentations

Figure 2. Graph showing the percentage coverage of knowledge areas

IV. DISCUSSION

Our audit revealed a relatively low coverage of the MRCOG KAs with only 64.9% of the syllabus covered. While the morning CME programme caters to all members of the department, the sessions are an important didactic component for ST education and exam preparation as they are deemed “protected” teaching time. There had been no prior formal review assessing whether it catered to this very important section of the department’s workforce. We were also able to recognise those KAs which had exceptionally low coverage were those with a large amount of practical and “hands-on” skills (i.e. “Gynaecological Problems”, “Management of Labour”, “Management of Delivery”, and “Postpartum Problems”). As a surgical discipline, this highlighted that these areas needed directed solutions through other forms of practical instruction and evaluation. In the pandemic environment, this may involve virtual or home-based means (Hoopes et al., 2020). These “hands-on” KAs likely require at least semi-annual individualised assessment by the CF through verified case logs, Objective Structured Assessment of Technical Skills, Direct Observation of Procedural Skills, and Non-Technical Skills for Surgeons (NOTSS) (Bisson et al., 2006; Parry-Smith et al., 2014). This targeted assessment was even more crucial during the recovery “catch-up” phase due to de-skilling because of reduced elective surgical caseload (Amparore et al., 2020; Chan et al., 2020) and facilitated the redistributing of surgical training material to cover training deficits.

While there is significant literature on how to organise a robust PG didactic programme (Colman et al., 2020; Harden, 2001; Willett, 2008), little has been published on how to evaluate an established didactic programme’s coverage of its learner’s educational requirements (Davies, 1981). Most studies evaluating the efficacy of such programmes typically assess the effects of individual CME sessions on physician knowledge or performance and patient outcomes after a suitable interval (Davis et al., 1992; Mansouri & Lockyer, 2007), with most citing a small to medium effect. We believe, however, that our audit process permits a more holistic, reproducible, and structured means of evaluating an existing didactic programme and finding deficits that can be improve upon to brings value to any specialty training programme.

At our institution, safe distancing requirements brought on by the COVID-19 pandemic required a rapid transition to a video-conferencing-based approach i.e. vCME. As milestone examinations were still being held, the first six months were primarily focused on STs as examination preparation remained a high and undisputed priority and learning opportunities had been significantly disrupted by the pandemic. During this phase, our vCME programme was re-organised into three to four sessions per week which were peer-led and supervised by a faculty member. Video-conferencing platforms encouraged audience participation through live feedback, questions posed via the chat box, instantaneous online polling, and directed case-based discussions with ST participants. These facilitated real time feedback to the presenter in a way that was not possible in previous face-to-face sessions due to reasons such as shyness and difficulty conducting polls. Other useful features included being able to record presentations for digital storage in a hospital-based server for access on-demand for revision purposes by STs.

A previously published anonymised questionnaire within our department (Chan et al., 2020) found very favourable opinions of vCME as an effective mode of learning amongst 28 junior doctors (85.7%) and nine presenters (100%) with 75% hoping for it to continue even after normalisation of social distancing policies. Nevertheless, common issues reported included a lack of personal interaction, difficulties in engaging with speakers, technical difficulties, and inaccurate attendance confirmation as shared devices for participating on these vCME sessions sometimes failed to identify who was present. While there is altered teacher and learner engagement due to physical separation across a digital medium, studies have also found that the virtual platform provided a useful means of communication and feedback and created a psychologically safe learning environment (Dong et al., 2021; Wasfy et al., 2021).

While our audit focused primarily on STs, departmental CME programmes need to find balanced in catering to the educational outcome of various groups of participants within a clinical department (e.g. senior clinicians, nursing staff, allied healthcare professionals, clinical scientists). Indeed, as these groups started to return to the CME programme after about six months following the vCME transition, we created a core postgraduate committee comprising members representing the learning interests of each party i.e. Department research director, ST Programme Director and Assistant Director, and a representative senior ST in the fifth or sixth year of training, so that we could continue to meet the recommendations set in our TNA while rebalancing the programme to meet the needs of all participants. Out of an average of 20 CME sessions per month, four were dedicated to departmental and hospital grand rounds each. Of the remaining 12 sessions, two were dedicated towards covering KAs, four scientific presentations, three clinical governance aspects, and one journal club. The remaining two sessions would be “faculty wildcard” sessions to be used at the committee’s discretion of the committee to cover poorly covered, more contemporary, “breaking news” topics, or serve as a buffer in the event of cancellations of other topics. Indeed, the same TNA-based audit methodology can be employed any other group of CME participants.

A key limitation in our audit method is that it focused on the breadth of coverage of learning objectives, but not the quality of the teaching and its depth. Teaching efficacy is also important in the delivery of learning objectives (Bakar et al., 2012) and needs more specific assessment tools (Metheny et al., 2005). Evaluating the quality of PG training could take several forms and may be direct e.g. an evaluation by the learner (Gillan et al., 2011), or could be indirect e.g. charting the learner’s progress through OSCEs and CEXs, scheduled competency reviews, and ST examination pass rates (Pinnell et al., 2021). Importantly, despite the rise of virtual learning platforms, there is little consensus on the best way to evaluate e-learning methods (De Leeuw et al., 2019). Nevertheless, our main audit goal was to assess the extent of coverage of the MRCOG syllabus which is a key training outcome. Future audits, however, should incorporate this element to provide additional qualitative feedback to assess this dimension as well. Further research should be carried out in terms of evaluating the effects of optimising a PG didactic programme on key outcomes such as ST behaviour, perceptions, and objective outcomes such as examination results.

Finally, while these were the results of an audit conducted in a single hospital department and used a morning CME programme as a basis for evaluation, we believe that this audit methodology based on a ten-step TNA and also utilising the Delphi method and syllabus mapping techniques (Harden, 2001) can be reproduced to any academic department that has a regular didactic programme as long as a suitable standard is selected. The Delphi method can easily be conducted via online survey platforms (e.g. Google Forms) to crystallise the PG programme goals. Our audit shows that without a systematic evaluation of past didactic sessions, it is possible for even mature CME programme to fall significantly short of ameeting the needs of its learners and that PG didactic sessions need deliberate planning.

V. CONCLUSION

Just as any other aspect of healthcare delivery, CME and PG programmes are amenable to audits and must adjust to an ever-changing delivery landscape. Rather than curse the darkness during the COVID-19 pandemic, we explored the potential of reformatting the PG programme and adjusting course to better suit the needs of our STs. We demonstrate a method of auditing an existing programme, distilling important learning goals, comparing it against an appropriate standard (i.e. coverage of the MRCOG KAs), and implementing changes utilising reproducible techniques such as the Delphi method (Humphrey-Murto et al., 2017). This process should be a regular mainstay of any mature ST programme to ensure continued relevancy. As continual outbreaks, even amongst vaccinated populations (Amit et al., 2021; Bar-On et al., 2021; Bergwerk et al., 2021) auger a future of COVID-19 endemicity, we must accept a “new-normal” comprising of intermittent workplace infection control policies such as segregation, shift work, and restrictions for in-person meetings (Kwon et al., 2020; Liang et al., 2020). Through our experience, we have shown that this auditing methodology can also be applied to vCME programmes.

Notes on Contributors

Rachel Jiayu Lee participated in the data collection and review, the writing of the paper, and the formatting for publication.

Jeannie Jing Yi Yap participated in the data collection and review, the writing of the paper, and the formatting for publication.

Carly Yanlin Wu participated in data collection and review.

Grace Chan Ming Fen participated in data collection and review.

Abhiram Kanneganti was involved in the writing of the paper, editing, and formatting for publication. Citra Nurfarah Zaini Mattar participated in the editing and direction of the paper.

Pearl Shuang Ye Tong participated in the editing and direction of the paper.

Susan Jane Sinclair Logan participated in the editing and direction of the paper.

Ethical Approval

IRB approval for waiver of consent (National Healthcare Group DSRB 2020/00360) was obtained for the questionnaire assessing attitudes towards vCME.

Data Availability

There is no relevant data available for sharing in this paper.

Acknowledgement

We would like to acknowledge the roles of Mr Xiu Cai Wong Edwin, Mr Lee Boon Kai and Ms Teo Xin Yue in the administrative roles behind auditing and reformatting the PG medical education programme.

Funding

There was no funding for this article.

Declaration of Interest

The authors have no conflicts of interest in connection with this article.

References

Amit, S., Beni, S. A., Biber, A., Grinberg, A., Leshem, E., & Regev-Yochay, G. (2021). Postvaccination COVID-19 among healthcare workers, Israel. Emerging Infectious Diseases, 27(4), 1220. https://doi.org/10.3201/eid2704.210016

Amparore, D., Claps, F., Cacciamani, G. E., Esperto, F., Fiori, C., Liguori, G., Serni, S., Trombetta, C., Carini, M., Porpiglia, F., Checcucci, E., & Campi, R. (2020). Impact of the COVID-19 pandemic on urology residency training in Italy. Minerva Urologica e Nefrologica, 72(4), 505-509. https://doi.org/10.23736/s0393-2249.20.03868-0

Bakar, A. R., Mohamed, S., & Zakaria, N. S. (2012). They are trained to teach, but how confident are they? A study of student teachers’ sense of efficacy. Journal of Social Sciences, 8(4), 497-504. https://doi.org/10.3844/jssp.2012.497.504

Bar-On, Y. M., Goldberg, Y., Mandel, M., Bodenheimer, O., Freedman, L., Kalkstein, N., Mizrahi, B., Alroy-Preis, S., Ash, N., Milo, R., & Huppert, A. (2021). Protection of BNT162b2 vaccine booster against Covid-19 in Israel. New England Journal of Medicine, 385(15), 1393-1400. https://doi.org/10.1056/NEJMoa2114255

Bergwerk, M., Gonen, T., Lustig, Y., Amit, S., Lipsitch, M., Cohen, C., Mandelboim, M., Levin, E. G., Rubin, C., Indenbaum, V., Tal, I., Zavitan, M., Zuckerman, N., Bar-Chaim, A., Kreiss, Y., & Regev-Yochay, G. (2021). Covid-19 breakthrough infections in vaccinated health care workers. New England Journal of Medicine, 385(16), 1474-1484. https://doi.org/10.1056/NEJMoa2109072

Bienstock, J., & Heuer, A. (2022). A review on the evolution of simulation-based training to help build a safer future. Medicine, 101(25), Article e29503. https://doi.org/10.1097/MD.0000000000029503

Bisson, D. L., Hyde, J. P., & Mears, J. E. (2006). Assessing practical skills in obstetrics and gynaecology: Educational issues and practical implications. The Obstetrician & Gynaecologist, 8(2), 107-112. https://doi.org/10.1576/toag.8.2.107.27230

Bryant‐Smith, A., Rymer, J., Holland, T., & Brincat, M. (2019). ‘Perfect practice makes perfect’: The role of laparoscopic simulation training in modern gynaecological training. The Obstetrician & Gynaecologist, 22(1), 69-74. https://doi.org/10.1111/tog.12619

Burr, R., & Johanson, R. (1998). Continuing medical education: An opportunity for bringing about change in clinical practice. British Journal of Obstetrics and Gynaecology, 105(9), 940-945. https://doi.org/10.1111/j.1471-0528.1998.tb10255.x

Chan, G. M. F., Kanneganti, A., Yasin, N., Ismail-Pratt, I., & Logan, S. J. S. (2020). Well-being, obstetrics and gynaecology and COVID-19: Leaving no trainee behind. Australian and New Zealand Journal of Obstetrics and Gynaecology, 60(6), 983-986. https://doi.org/10.1111/ajo.13249

Chick, R. C., Clifton, G. T., Peace, K. M., Propper, B. W., Hale, D. F., Alseidi, A. A., & Vreeland, T. J. (2020). Using technology to maintain the education of residents during the COVID-19 pandemic. Journal of Surgical Education, 77(4), 729-732. https://doi.org/10.1016/j.jsurg.2020.03.018

Colman, S., Wong, L., Wong, A. H. C., Agrawal, S., Darani, S. A., Beder, M., Sharpe, K., & Soklaridis, S. (2020). Curriculum mapping: an innovative approach to mapping the didactic lecture series at the University of Toronto postgraduate psychiatry. Academic Psychiatry, 44(3), 335-339. https://doi.org/10.1007/s40596-020-01186-0

Davies, I. J. T. (1981). The assessment of continuing medical education. Scottish Medical Journal, 26(2), 125-134. https://doi.org/10.1177/003693308102600208

Davis, D. A., Thomson, M. A., Oxman, A. D., & Haynes, R. B. (1992). Evidence for the effectiveness of CME: A Rreview of 50 randomized controlled trials. Journal of the American Medical Association, 268(9), 1111-1117. https://doi.org/10.1001/jama.1992.03490090053014

De Leeuw, R., De Soet, A., Van Der Horst, S., Walsh, K., Westerman, M., & Scheele, F. (2019). How we evaluate postgraduate medical e-learning: systematic review. JMIR medical education, 5(1), e13128.

Dong, C., Lee, D. W.-C., & Aw, D. C.-W. (2021). Tips for medical educators on how to conduct effective online teaching in times of social distancing. Proceedings of Singapore Healthcare, 30(1), 59-63. https://doi.org/10.1177/2010105820943907

Donovan, P., & Townsend, J. (2019). Learning Needs Analysis. Management Pocketbooks.

English, W., Vulliamy, P., Banerjee, S., & Arya, S. (2020). Surgical training during the COVID-19 pandemic – The cloud with a silver lining? British Journal of Surgery, 107(9), e343-e344. https://doi.org/10.1002/bjs.11801

Forsetlund, L., O’Brien, M. A., Forsén, L., Mwai, L., Reinar, L. M., Okwen, M. P., Horsley, T., & Rose, C. J. (2021). Continuing education meetings and workshops: Effects on professional practice and healthcare outcomes. Cochrane Database of Systematic Reviews, 9(9), CD003030. https://doi.org/10.1002/14651858.CD003030.pub3

Gillan, C., Lovrics, E., Halpern, E., Wiljer, D., & Harnett, N. (2011). The evaluation of learner outcomes in interprofessional continuing education: A literature review and an analysis of survey instruments. Medical Teacher, 33(9), e461-e470. https://doi.org/10.3109/0142159X.2011.587915

Gould, D., Kelly, D., White, I., & Chidgey, J. (2004). Training needs analysis. A literature review and reappraisal. International Journal of Nursing Studies, 41(5), 471-486. https://doi.org/10.1016/j.ijnurstu.2003.12.003

Grafton-Clarke, C., Uraiby, H., Gordon, M., Clarke, N., Rees, E., Park, S., Pammi, M., Alston, S., Khamees, D., Peterson, W., Stojan, J., Pawlik, C., Hider, A., & Daniel, M. (2022). Pivot to online learning for adapting or continuing workplace-based clinical learning in medical education following the COVID-19 pandemic: A BEME systematic review: BEME Guide No. 70. Medical Teacher, 44(3), 227-243. https://doi.org/10.1080/0142159X.2021.1992372

Harden, R. M. (2001). AMEE Guide No. 21: Curriculum mapping: A tool for transparent and authentic teaching and learning. Medical Teacher, 23(2), 123-137. https://doi.org/10.1080/01421590120036547

Hasson, F., Keeney, S., & McKenna, H. (2000). Research guidelines for the Delphi survey technique. Journal of Advanced Nursing, 32(4), 1008-1015. https://doi.org/10.1046/j.1365-2648.2000.t01-1-01567.x

Hicks, C., & Hennessy, D. (1996). Applying psychometric principles to the development of a training needs analysis questionnaire for use with health visitors, district and practice nurses. NT Research, 1(6), 442-454. https://doi.org/10.1177/174498719600100608

Hicks, C., & Hennessy, D. (1997). The use of a customized training needs analysis tool for nurse practitioner development. Journal of Advanced Nursing, 26(2), 389-398. https://doi.org/https://doi.org/10.1046/j.1365-2648.1997.199702 6389.x

Hoopes, S., Pham, T., Lindo, F. M., & Antosh, D. D. (2020). Home surgical skill training resources for obstetrics and gynecology trainees during a pandemic. Obstetrics and Gynecology 136(1), 56-64. https://doi.org/10.1097/aog.0000000000003931

Humphrey-Murto, S., Varpio, L., Wood, T. J., Gonsalves, C., Ufholz, L.-A., Mascioli, K., Wang, C., & Foth, T. (2017). The use of the Delphi and other consensus group Methods in medical education research: A review. Academic Medicine, 92(10), 1491-1498. https://doi.org/10.1097/ACM.0000000000001812

Johnston, S., Coyer, F. M., & Nash, R. (2018). Kirkpatrick’s evaluation of simulation and debriefing in health care education: a systematic review. Journal of Nursing Education, 57(7), 393-398. https://doi.org/10.3928/01484834-20180618-03

Juprasert, J. M., Gray, K. D., Moore, M. D., Obeid, L., Peters, A. W., Fehling, D., Fahey, T. J., III, & Yeo, H. L. (2020). Restructuring of a general surgery residency program in an epicenter of the Coronavirus Disease 2019 pandemic: Lessons from New York City. JAMA Surgery, 155(9), 870-875. https://doi.org/10.1001/jamasurg.2020.3107

Kanneganti, A., Lim, K. M. X., Chan, G. M. F., Choo, S.-N., Choolani, M., Ismail-Pratt, I., & Logan, S. J. S. (2020). Pedagogy in a pandemic – COVID-19 and virtual continuing medical education (vCME) in obstetrics and gynecology. Acta Obstetricia et Gynecologica Scandinavica, 99(6), 692-695. https://doi.org/10.1111/aogs.13885

Kanneganti, A., Sia, C.-H., Ashokka, B., & Ooi, S. B. S. (2020). Continuing medical education during a pandemic: An academic institution’s experience. Postgraduate Medical Journal, 96, 384-386. https://doi.org/10.1136/postgradmedj-2020-137840

Khamees, D., Peterson, W., Patricio, M., Pawlikowska, T., Commissaris, C., Austin, A., Davis, M., Spadafore, M., Griffith, M., Hider, A., Pawlik, C., Stojan, J., Grafton-Clarke, C., Uraiby, H., Thammasitboon, S., Gordon, M., & Daniel, M. (2022). Remote learning developments in postgraduate medical education in response to the COVID-19 pandemic – A BEME systematic review: BEME Guide No. 71. Medical Teacher, 44(5), 466-485. https://doi.org/10.1080/0142159X.2022.2040732

Kwon, Y. S., Tabakin, A. L., Patel, H. V., Backstrand, J. R., Jang, T. L., Kim, I. Y., & Singer, E. A. (2020). Adapting urology residency training in the COVID-19 era. Urology, 141, 15-19. https://doi.org/10.1016/j.urology.2020.04.065

Liang, Z. C., Ooi, S. B. S., & Wang, W. (2020). Pandemics and their impact on medical training: Lessons from Singapore. Academic Medicine, 95(9), 1359-1361. https://doi.org/10.1097/ACM.0000000000003441

Lim, E. C., Oh, V. M., Koh, D. R., & Seet, R. C. (2009). The challenges of “continuing medical education” in a pandemic era. Annals Academy of Medicine Singapore, 38(8), 724-726. https://www.ncbi.nlm.nih.gov/pubmed/19736579

Mansouri, M., & Lockyer, J. (2007). A meta-analysis of continuing medical education effectiveness. Journal of Continuing Education in the Health Professions, 27(1), 6-15. https://doi.org/10.1002/chp.88

Marinopoulos, S. S., Dorman, T., Ratanawongsa, N., Wilson, L. M., Ashar, B. H., Magaziner, J. L., Miller, R. G., Thomas, P. A., Prokopowicz, G. P., Qayyum, R., & Bass, E. B. (2007). Effectiveness of continuing medical education. Evid Rep Technol Assess (Full Rep), 149, 1-69. https://www.ncbi.nlm.nih.gov/pubmed/17764217

Markaki, A., Malhotra, S., Billings, R., & Theus, L. (2021). Training needs assessment: Tool utilization and global impact. BMC Medical Education, 21(1), Article 310. https://doi.org/10.1186/s12909-021-02748-y

Metheny, W. P., Espey, E. L., Bienstock, J., Cox, S. M., Erickson, S. S., Goepfert, A. R., Hammoud, M. M., Hartmann, D. M., Krueger, P. M., Neutens, J. J., & Puscheck, E. (2005). To the point: Medical education reviews evaluation in context: Assessing learners, teachers, and training programs. American Journal of Obstetrics and Gynecology, 192(1), 34-37. https://doi.org/10.1016/j.ajog.2004.07.036

Mishra, K., Boland, M. V., & Woreta, F. A. (2020). Incorporating a virtual curriculum into ophthalmology education in the coronavirus disease-2019 era. Current Opinion in Ophthalmology, 31(5), 380-385. https://doi.org/10.1097/icu.0000000000000681

Norman, G. R., Shannon, S. I., & Marrin, M. L. (2004). The need for needs assessment in continuing medical education. BMJ (Clinical research ed.), 328(7446), 999-1001. https://doi.org/10.1136/bmj.328.7446.999

Palmer, W., & Brackwell, L. (2014). A national audit of maternity services in England. BJOG, 121(12), 1458-1461. https://doi.org/10.1111/1471-0528.12973

Parry-Smith, W., Mahmud, A., Landau, A., & Hayes, K. (2014). Workplace-based assessment: A new approach to existing tools. The Obstetrician & Gynaecologist, 16(4), 281-285. https://doi.org/10.1111/tog.12133

Pinnell, J., Tranter, A., Cooper, S., & Whallett, A. (2021). Postgraduate medical education quality metrics panels can be enhanced by including learner outcomes. Postgraduate Medical Journal, 97(1153), 690-694. https://doi.org/10.1136/postgradmedj-2020-138669

Raza, A., Coomarasamy, A., & Khan, K. S. (2009). Best evidence continuous medical education. Archives of Gynecology and Obstetrics, 280(4), 683-687. https://doi.org/10.1007/s00404-009-1128-7

Renaud, C. J., Chen, Z. X., Yuen, H.-W., Tan, L. L., Pan, T. L. T., & Samarasekera, D. D. (2021). Impact of COVID-19 on health profession education in Singapore: Adoption of innovative strategies and contingencies across the educational continuum. The Asia Pacific Scholar, 6(3), 14-23. https://doi.org/10.29060/TAPS.2021-6-3/RA2346

Royal College of Obstetricians and Gynaecologists. (2019). Core Curriculum for Obstetrics & Gynaecology: Definitive Document 2019. https://www.rcog.org.uk/media/j3do0i1i/core-curriculum-2019-definitive-document-may-2021.pdf

Sibley, J. C., Sackett, D. L., Neufeld, V., Gerrard, B., Rudnick, K. V., & Fraser, W. (1982). A randomized trial of continuing medical education. New England Journal of Medicine, 306(9), 511-515. https://doi.org/10.1056/NEJM198203043060904

Sparkes, D., Leong, C., Sharrocks, K., Wilson, M., Moore, E., & Matheson, N. J. (2021). Rebooting medical education with virtual grand rounds during the COVID-19 pandemic. Future Healthc J, 8(1), e11-e14. https://doi.org/10.7861/fhj.2020-0180

Wasfy, N. F., Abouzeid, E., Nasser, A. A., Ahmed, S. A., Youssry, I., Hegazy, N. N., Shehata, M. H. K., Kamal, D., & Atwa, H. (2021). A guide for evaluation of online learning in medical education: A qualitative reflective analysis. BMC Medical Education, 21(1), Article 339. https://doi.org/10.1186/s12909-021-02752-2

Willett, T. G. (2008). Current status of curriculum mapping in Canada and the UK. Medical Education, 42(8), 786-793. https://doi.org/10.1111/j.1365-2923.2008.03093.x

Wong, T. Y., & Bandello, F. (2020). Academic ophthalmology during and after the COVID-19 pandemic. Ophthalmology, 127(8), e51-e52. https://doi.org/10.1016/j.ophtha.2020.04.029

Xu, J., Zhou, Z., Chen, K., Ding, Y., Hua, Y., Ren, M., & Shen, Y. (2022). How to minimize the impact of COVID-19 on laparoendoscopic single-site surgery training? ANZ Journal of Surgery, 92, 2102-2108. https://doi.org/10.1111/ans.17819

*Abhiram Kanneganti

Department of Obstetrics and Gynaecology,

NUHS Tower Block, Level 12,

1E Kent Ridge Road,

Singapore 119228

Email: abhiramkanneganti@gmail.com

Announcements

- Best Reviewer Awards 2025

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2025.

Refer here for the list of recipients. - Most Accessed Article 2025

The Most Accessed Article of 2025 goes to Analyses of self-care agency and mindset: A pilot study on Malaysian undergraduate medical students.

Congratulations, Dr Reshma Mohamed Ansari and co-authors! - Best Article Award 2025

The Best Article Award of 2025 goes to From disparity to inclusivity: Narrative review of strategies in medical education to bridge gender inequality.

Congratulations, Dr Han Ting Jillian Yeo and co-authors! - Best Reviewer Awards 2024

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2024.

Refer here for the list of recipients. - Most Accessed Article 2024

The Most Accessed Article of 2024 goes to Persons with Disabilities (PWD) as patient educators: Effects on medical student attitudes.

Congratulations, Dr Vivien Lee and co-authors! - Best Article Award 2024

The Best Article Award of 2024 goes to Achieving Competency for Year 1 Doctors in Singapore: Comparing Night Float or Traditional Call.

Congratulations, Dr Tan Mae Yue and co-authors! - Best Reviewer Awards 2023

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2023.

Refer here for the list of recipients. - Most Accessed Article 2023

The Most Accessed Article of 2023 goes to Small, sustainable, steps to success as a scholar in Health Professions Education – Micro (macro and meta) matters.

Congratulations, A/Prof Goh Poh-Sun & Dr Elisabeth Schlegel! - Best Article Award 2023

The Best Article Award of 2023 goes to Increasing the value of Community-Based Education through Interprofessional Education.

Congratulations, Dr Tri Nur Kristina and co-authors! - Best Reviewer Awards 2022

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2022.

Refer here for the list of recipients. - Most Accessed Article 2022

The Most Accessed Article of 2022 goes to An urgent need to teach complexity science to health science students.

Congratulations, Dr Bhuvan KC and Dr Ravi Shankar. - Best Article Award 2022

The Best Article Award of 2022 goes to From clinician to educator: A scoping review of professional identity and the influence of impostor phenomenon.

Congratulations, Ms Freeman and co-authors.