Examiner training for the Malaysian anaesthesiology exit level assessment: Factors affecting the effectiveness of a faculty development intervention during the COVID-19 pandemic

Submitted: 30 June 2022

Accepted: 31 October 2022

Published online: 4 July, TAPS 2023, 8(3), 26-34

https://doi.org/10.29060/TAPS.2023-8-3/OA2834

Noorjahan Haneem Md Hashim1, Shairil Rahayu Ruslan1, Ina Ismiarti Shariffuddin1, Woon Lai Lim1, Christina Phoay Lay Tan2 & Vinod Pallath3

1Department of Anaesthesiology, Faculty of Medicine, Universiti Malaya, Malaysia; 2Department of Primary Care Medicine, Faculty of Medicine, Universiti Malaya, Malaysia; 3Medical Education Research & Development Unit, Dean’s Office, Faculty of Medicine, Universiti Malaya, Malaysia

Abstract

Introduction: Examiner training is essential to ensure the trustworthiness of the examination process and results. The Anaesthesiology examiners’ training programme to standardise examination techniques and standards across seniority, subspecialty, and institutions was developed using McLean’s adaptation of Kern’s framework.

Methods: The programme was delivered through an online platform due to pandemic constraints. Key focus areas were Performance Dimension Training (PDT), Form-of-Reference Training (FORT) and factors affecting validity. Training methods included interactive lectures, facilitated discussions and experiential learning sessions using the rubrics created for the viva examination. The programme effectiveness was measured using the Kirkpatrick model for programme evaluation.

Results: Seven out of eleven participants rated the programme content as useful and relevant. Four participants showed improvement in the post-test, when compared to the pre-test. Five participants reported behavioural changes during the examination, either during the preparation or conduct of the examination. Factors that contributed to this intervention’s effectiveness were identified through the MOAC (motivation, opportunities, abilities, and communality) model.

Conclusion: Though not all examiners attended the training session, all were committed to a fairer and transparent examination and motivated to ensure ease of the process. The success of any faculty development programme must be defined and the factors affecting it must be identified to ensure engagement and sustainability of the programme.

Keywords: Medical Education, Health Profession Education, Examiner Training, Faculty Development, Assessment, MOAC Model, Programme Evaluation

Practice Highlights

- A faculty development initiative must be tailored to faculty’s learning needs and context.

- A simple framework of planning, implementing, and evaluating can be used to design a programme.

- Target outcome measures and evaluation plans must be included in the planning process.

- The Kirkpatrick model is a useful tool to use in programme evaluation: to answer if the programme has met its objectives.

- The MOAC model is a useful tool to explain why a programme has met its objective.

I. INTRODUCTION

Anaesthesiology specialist training in Malaysia comprises a 4-year clinical master’s programme. At the time of our workshop, five local public universities offer the programme. The course content is similar in all universities, but the course delivery may differ to align with each university’s rules and regulations. The summative examinations are held as a Conjoint Examination. Examiners include lecturers from all five universities, specialists from the Ministry of Health and external examiners from international Anaesthesiology training programmes. The examination consists of a written and a viva voce examination. The areas examined are the knowledge and cognitive skills in patient management.

A speciality training programme’s exit level assessment is an essential milestone for licensing. In our programme, the exit examination occurs at the end of the training before trainees practise independently in the healthcare system and are eligible for national specialist registration. Therefore, aligning the curriculum and assessment to licensing requirements is necessary.

Examiners play an important role during this high-stakes summative examination, making decisions regarding allowing graduating trainees to work as specialists in the community. Therefore, examiners must understand their role. In recent years, the anaesthesiology training programme providers in Malaysia have been taking measures to improve the validity of the examination. These include a stringent vetting process to ensure examination content reflects the syllabus, questions are unambiguous, and the examiners agree on the criteria for passing. However, previous examinations revealed that although examiners were clear on the aim of the examination, some utilised different assessment approaches, which were possibly coloured by personal and professional experiences, and thus needed constant calibration on the passing criteria. In addition, during examiner discussions, different examiners were found to have different skill levels in constructing focused higher-order questions and were not fully aware of potential cognitive biases that may affect the examination results.

These insights from previous examinations warranted a specific skill training session to ensure the trustworthiness of the examination process and results (Blew et al., 2010; Iqbal et al., 2010; Juul et al., 2019, Chapter 8, pp. 127-140; McLean et al., 2008). The examiners and the Specialty committee were keen to ensure that these issues were addressed with a training programme that complements the current on-the-job examiner training.

II. METHODS

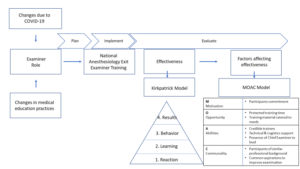

An examiner training module was developed using McLean’s adaptation of Kern’s framework for curriculum development: Planning, Implementation and Evaluation (McLean et al., 2008; Thomas et al., 2015). A conceptual framework for the examiner training programme was drawn up from the programme’s conception stage to the evaluation of its outcome, as illustrated in Figure 1 (Steinert et al., 2016).

Figure 1: The conceptual framework for the examiner training programme and evaluation of its effectiveness

A. Planning

Three key focus areas were identified for the training programme: (1) Performance Dimension Training (PDT); (2) examiner calibration with Frame-Of-Reference Training (FORT); as well as (3) identifying factors affecting the validity of results and measures that can be taken to prevent them.

1) Performance dimension training (Feldman et al., 2012): The aim was to improve examination validity by reducing examiner errors or biases unrelated to the examinees’ targeted performance behaviours. Finalised marking schemes outlining competencies to be assessed required agreement by all the examiners ahead of time. These needed to be clearly defined and easily understood by all the examiners, and consistency was key to reducing examiner bias.

2) Examiner calibration with Frame-of-Reference Training (FORT) (Newman et al., 2016): Differing levels of experience among all the participants meant that there were differing expectations and performances among them. The examiner training programme needed to assist examiners in resetting expectations and criteria for assessing the candidates’ competencies. This examiner calibration was achieved using pre-recorded simulated viva sessions in which the participants rated candidates’ performances in each simulated viva session and received immediate feedback on their ability and criteria for scoring the candidates.

3) Identifying factors affecting the validity of results (Lineberry, 2019): Factors that may affect the validity of examination results may be related to construct underrepresentation (CU), where the results only reflect one part of an attribute being examined; or construct-irrelevant variance (CIV), where the results are being affected by areas or issues other than the attribute being examined.

An example of CU is sampling issues where only a limited area of the syllabus is examined, or an answer key is limited by the availability of evidence or content expertise.

Examples of CIV include the different ways a concept can be interpreted in different cultures or training centres, ambiguous questions, examiner cognitive biases, examiner fatigue, examinee language abilities, and examinees guessing or cheating. The examiner training programme was designed with the objectives listed in Table 1.

|

Malaysian Anaesthesiology Exit Level Examiner Training Programme |

|

1. Participants should be able to define the purpose and competencies to be assessed in the viva examination. |

|

2. Participants should be able to construct high-order questions (elaborating, probing, and justifying). |

|

3. Participants should be able to agree on anchors on rating scales of examination and narrow the range of ratings for the same encounter everyone observes. |

|

4. Participants should be able to calibrate the scoring of different levels of responses. |

Table 1: Objectives of the Faculty Development Intervention

B. Implementation

The faculty intervention programme was designed as a one-day online programme to be attended by potential examiners for the Anaesthesiology Exit Examination. The programme objectives were prioritised from the needs assessment and designed based on Tekian & Norcini’s recommendations (Tekian & Norcini, 2016). Due to time constraints, training was performed using an online platform closer to the examination dates after obtaining university clearance on confidentiality regarding assessment issues.

The structure and contents of the examiner training programme are outlined in Table 2 and is further elaborated in Appendix A.

|

General content |

Specific content |

|

Lectures |

1. Orientation to the examination regulations, objectives, structure and format of the final examination. |

|

|

2. Ensuring validity of the viva examination: elaborating on the threats present to the process and how to mitigate these concerns. |

|

|

3. Creating high-order questions based on competencies to be assessed and promoting appropriate examiner behaviours through consistency and increasing reliability. |

|

|

4. Utilising marking schemes, anchors and making inferences with:

|

|

Experiential learning sessions |

1. Participants discuss and agree on the competencies to be assessed. 2. Participants work in groups to construct questions based on a given scenario and competencies to be assessed. 3. Participants finalise a rating scale to be used in the examination. 4. Participants observe videos of simulated examination candidates performing at various levels of competencies and rate their performance. The discussion here focused on the similarities and differences between examiners. |

|

Participant feedback and evaluation |

A question-and-answer session is held to iron out any doubts and queries from the participants. |

Table 2: Contents and structure of the examiner training programme

Based on the objectives, the organisers invited a multidisciplinary group of facilitators. The group consisted of anaesthesiologists, medical education experts in assessment and faculty development, and a technical and logistics support team to ensure efficient delivery of the online programme.

A multimodal approach to delivery was adopted to accommodate the diversity of the examiner group (gender, seniority, subspeciality, and examination experience). Explicit ground rules were agreed upon to underpin the safe and respectful learning environment. The educational strategy included interactive lectures, hands-on practice using rubrics created and calibration using video-assisted scenarios. The programme objectives were embedded and reinforced with each strategy. Pre- and post-tests were performed to help participants gauge their learning and assist the programme organisers in evaluating the participants’ learning.

This would be the first time such a programme was held within the local setting. Participants were all anaesthesiologists by profession, were actively involved in clinical duties within a tertiary hospital setting and consented to participate in this programme. As potential examiners, they all had prior experience as observers of the examination process, with the majority having previous experience as examiners as well.

The programme was organised during the peak of the COVID-19 pandemic and was managed on a fully online platform to ensure safety and minimise the time taken away from clinical duties. In addition, participants received protected time for this programme, a necessary luxury as anaesthesiologists were at the forefront of managing the pandemic.

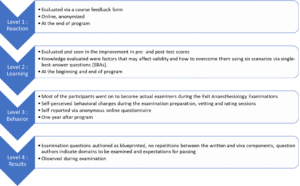

C. Evaluation

The Kirkpatrick model (McLean et al., 2008; Newstrom, 1995;) was used to evaluate the programme’s effectiveness described and elaborated in Figure 2.

Figure 2: The Kirkpatrick model, elaborated for this programme

The MOAC model (Vollenbroek, 2019), expanded from the MOA (Marin-Garcia & Martinez Tomas, 2016) model by Blumberg & Pringle (Blumberg & Pringle, 1982) was used to examine factors that contributed to the effectiveness of the programme. Motivation, opportunity, ability, and communality are factors that drives action and performance.

III. RESULTS

Eleven participants attended the programme. These participants were examiners for the 2021 examinations from the university training centres and the Ministry of Health, Malaysia. Only one of the participants would be a first-time examiner in the Exit Examination. Four of the would-be examiners could not attend due to service priorities.

A. Level 1: Reaction

Seven of the eleven participants completed the programme evaluation form, which is openly available in Figshare at https://doi.org/10.6084/m9.figshare.20189309.v1 (Tan & Pallath, 2022). All of them rated the programme content as useful and relevant to their examination duties and stated that the content and presentations were pitched at the correct level, with appropriate visual aids and reference materials. The online learning opportunity was also rated as good.

All seven also aimed to make behavioural changes after attending the programme, as indicated below. Some of the excerpts include:

“I am more cognizant of the candidates’ understanding to questions and marking schemes”

“Yes. We definitely need the rubric/marking scheme for standardisation. Will also try to reduce all the possible biases as mentioned in the programme.”

“Yes, as I will be more agreeable to question standardisation in viva examination because it makes it fairer for the candidates.”

The participants also shared their understanding of the importance of standardisation and examiner training and would recommend this programme to be conducted annually. They agreed that the examiner training programme should be made mandatory for all new examiners, with the option of refresher courses for veteran examiners if appropriate.

B. Level 2: Learning

All 11 participants completed the pre-and post-tests. The data supporting these findings of this is openly available in Figshare at https://doi.org/10.6084/m9.figshare.20186582.v1 (Md Hashim, 2021). The participants’ marks in both tests are shown in Appendix B. The areas that showed improvement in scores were identifying why under-sampling is a problem and methods to prevent validity threats. Understanding the source of validity threat from cognitive biases showed a decline in scores (question 2 with scores of 11 to 8 and question 3 with scores of 10 to 8), respectively.

Comparing the post-test scores to pre-test scores, four participants showed improvement, four showed no change (one of the participants answered all questions correctly in both tests) and three participants showed a decline in test scores.

C. Level 3: Behavioural Change

Six participants responded to the follow-up questionnaire, which is openly available in Figshare at https://doi.org/10.6084/m9.figshare.20186591.v2 (Md Hashim, 2022). This questionnaire was administered about a year after the examiner training programme and after the completion of two examinations. Only one respondent did not make any self-perceived behavioural change while preparing the examination questions and conducting the viva examinations. Two respondents did not make any changes while marking or rating candidates.

The specific changes in the three areas of behavioural change that were consciously noted by the respondents were explored. Respondents reported increased awareness and being more systematic in question preparation, making questions more aligned to the curriculum, preparing better quality questions, and being more cognizant of candidates’ understanding of the questions.

They also reported being more objective and guided during marking and rating as the passing criteria were better defined and structured.

Regarding the conduct of the viva examination, respondents shared that they were better prepared during vetting and felt it was easier to rate candidates as the marking schemes and questions were standardised and could ensure candidates could answer all the required questions to pass.

D. Level 4: Results

The examiners who attended the training programme were able to prepare questions as blueprinted and were able to identify areas to be examined and provided recommended criteria for passing each question. This has led to a smooth vetting process and examination.

E. Factors Affecting Effectiveness

Even though the programme was not attended by all the potential examiners, those who did were committed to the idea of a fairer and more transparent examination process. This formed the motivation aspect of the model.

In terms of opportunity, protected training time is important, followed by prioritising the content of the training material according to the most pressing needs.

The ability aspect encompassed the abilities of the facilitators and participants. To emphasise the learning process, credible trainers were invited to this programme to facilitate the lectures and experiential learning sessions. In this aspect, the Faculty Development team comprised an experienced clinician, a basic medical scientist, and an anaesthesiologist, all with medical education qualifications and were vital in ensuring the success of this programme. The whole team was led by the Chief Examiner who focused on the dimensions to be tested and calibrated, while simultaneously managing the expectations of the examiners and their abilities to give and accept feedback. Communication and the skill to be receptive to the proposed changes were also crucial to make the intervention work.

In terms of communality, all the participants were of similar professional backgrounds and shared the common realisation that this training programme was essential and would only yield positive results. Hence this ensured the programme’s overall success.

IV. DISCUSSION

The progressive change seen in this attempt to improve the examination system is aligned with the general progress in medical education. Training of examiners is important (Holmboe et al., 2011), as it is not the tool used for assessment, but rather the person using the tool, that makes the difference. As it is difficult to design the ‘perfect tool’ for performance tests and redesigning a tool only changes 10% of the variance in rating (Holmboe et al., 2011; Williams et al., 2003), educators must now train the faculty in observation and assessment. It is not irrational to extrapolate this effect on written and oral examinations. Holmboe et al. (2011) also share the reasons for a training programme for assessors, which are changing curriculum structure, content and delivery and emerging evidence regarding assessment, building a system reserve, utilising training programmes as opportunities to identify and engage change agents and allow the faculty to form a mental picture of how changes will affect them and improve practice. Enlisting the help and support of a respected faculty member during training will promote the depth and breadth of change.

Khera et al. (2005) described their paediatric examination experiences, in which the Royal College of Paediatrics and Child Health defined examiners’ competencies, selection process and training programme components. The training programme included principles of assessment, examination design, writing questions, interpersonal skills, professional attributes, managing diversity, and assessing the examiners’ skills. They believe these contents will ensure the assessment is valid, reliable, and fair. As Anaesthesiology examiners have different knowledge levels and experiences, it had been crucial to assess their learning needs and provide them with appropriate learning opportunities.

In the emergency brought on by the COVID-19 pandemic, online training was the safest and most feasible platform for conducting this programme. Online faculty development activities have the perceived advantages of being convenient, flexible, and allowing interdisciplinary interaction and providing an experience of being an online student(Cook & Steinert, 2013). Forming the facilitation team together with the dedicated technical and logistics team and creating a chat group prior to conducting the programme were key in anticipating and handling communication and technical issues (Cook & Steinert, 2013).

Though participants were engaged and the results of the workshop were encouraging, the programme delivery and the content will be reviewed based on the feedback received. The convenience of an online activity must be balanced with the participant engagement and facilitator presence of a face-to-face-activity. Since the results of both methods of delivery differs (Arias et al., 2018; Daniel, 2014; Kemp & Grieve, 2014), the best solution may to ask the participants what would best work for them, as they are adult learners and experienced examiners. The programme must be designed with participants involvement, with opportunities to participate and engaging facilitators and support teams that would be able to support the participants’ learning need (Singh et al., 2022).

At the end of the programme, the effectiveness of the programme was measured by referencing the Kirkpatrick model. The Kirkpatrick model (Newstrom, 1995; Steinert et al., 2006) was the most helpful in helping us identify the success of the intervention, which included behavioural change. Measuring behavioural change and impact on the examination results, organisational changes and changes in student learning may be difficult and may not be directly caused by a single intervention (McLean et al., 2008). The key, is perhaps to involve examiners, students and other stakeholders in the evaluation process, using various validated tools, and to ensure that the effort is ongoing, with sustained support, guidance and feedback (McLean et al., 2008).

To explain the overall effectiveness of the programme (with regards to reaction, learning and behavioural change), the MOAC model (Vollenbroek, 2019) expanded from the original MOA model was used. The MOAC model not only describes factors that affect an individual’s performance in a group, but also the group behaviour.

Motivation is an important driving force of action, and members are more motivated when a subject becomes relevant on a personal level, leading to action. The motivation to be informed and to improve has led to active participation in the knowledge sharing session, processing new information presented in the programme and adopting changes learnt during the programme. Presence of a group of motivated individuals with the same goals supported each other’s learning.

Opportunity, especially time, space and resources, must be allocated to reflect the value and relevance of any activity. Work autonomy, allows professionals to engage in what they consider relevant or important, and be accountable for their work outcomes. Facilitating conditions, for example, technology, facilitators, and a platform to practise what is being learnt are also important aspects of opportunity. Allowing protected time with the appropriate facilitating conditions, indicates institutional support and has enabled participants to fully optimise the learning experience.

Ability positively affects knowledge exchange and willingness to participate. Having prior knowledge improves a participant’s ability to absorb and utilise new knowledge. The programme participants, being experienced clinical teachers and examiners are fully aware of their capabilities and are able to process and share important information. Experienced faculty development facilitators who are also clinical teachers and examiners were able to identify areas to focus and provide relevant examples for application.

Communality is the added dimension to the original MOA model. Participants of this programme are members in a complex system, who already know each other. Having shared identity, language and challenges have allowed them to develop trust while pursuing the common goal of improving the system they were working in. This facilitated knowledge sharing and behavioural change.

The limitation in our programme is the small sample size. However, we believe that is important to review the effectiveness of a programme, especially with regards to behavioural change, and to share how other programmes can benefit from using the frameworks we shared. The findings from this programme will also inform how we conduct future faculty development programmes. With pandemic restrictions lifted, we hope to conduct this programme face-to-face, to facilitate engagement and communication.

V. CONCLUSION

For this faculty development programme to succeed, targets for success must first be defined and factors that contribute to its success need to be identified. This will ensure active engagement from the participants and promote the sustainability of the programme.

Notes on Contributors

Noorjahan Haneem Md Hashim designed the programme, assisted in content creation, curation and matching learning activities, moderated the programme, and conceptualised and wrote this manuscript.

Shairil Rahayu Ruslan participated as a committee of the programme, assisted as a simulated candidate during the training sessions, as well as contributed to the conceptualisation, writing, and formatting of this manuscript. She also compiled the bibliography and cross-checked the references for this manuscript.

Ina Ismiarti Shariffuddin created the opportunity for the programme (Specialty board and interdisciplinary buy-in, department funding), prioritised the programme learning outcomes, chaired the programme, and contributed to the writing and review of this manuscript.

Woon Lai Lim participated as a committee member of the programme and contributed to the writing of this manuscript.

Christina Phoay Lay Tan designed and conducted the faculty development training programme, and reviewed and contributed to the writing of this manuscript. She also cross-checked the references for this manuscript.

Vinod Pallath designed and conducted the faculty development training programme, and reviewed and contributed to the writing of this manuscript.

All authors verified and approved the final version of the manuscript.

Ethical Approval

Ethical approval was applied for the follow-up questionnaire that was distributed to the participants, which was approved on the 6th of May 2022 (Reference number: UM.TNC2/UMREC_1879). The programme evaluation and pre- and post-tests are accepted as part of the programme evaluation procedures.

Data Availability

De-identified individual participant data collected are available in the Figshare repository immediately after publication without an end date, as below :

https://doi.org/10.6084/m9.figshare.20189309.v1

https://doi.org/10.6084/m9.figshare.20186582.v1

https://doi.org/10.6084/m9.figshare.20186591.v2

The authors confirm that all data underlying the findings are freely available for view from the Figshare data repository. However, the reuse and resharing of the programme evaluation form, pre- and posttest questions, as well as followup questionnaire, despite being easily accessible from the data repository, should warrant a reasonable request from the corresponding author out of courtesy.

Acknowledgement

The authors would like to acknowledge Dr Selvan Segaran and Dr Siti Nur Jawahir Rosli from the Medical Education, Research and Development Unit (MERDU) for their logistics and technical support in all stages of this programme; Professor Dr Jamuna Vadivelu, Head, MERDU for her insight and support; Dr Nur Azreen Hussain and Dr Wan Aizat Wan Zakaria from the Department of Anaesthesiology, UMMC and UM, for their acting skills in the training videos; and the Visibility and Communication Unit, Faculty of Medicine, Universiti Malaya for their video editing services.

Funding

There is no funding source for this manuscript.

Declaration of Interest

There are no conflicts of interest among the authors of this manuscript.

References

Arias, J. J., Swinton, J., & Anderson, K. (2018). Online vs. face-to-face: A comparison of student outcomes with random assignment. E-Journal of Business Education & Scholarship of Teaching, 12(2), 1–23. https://eric.ed.gov/?id=EJ1193426

Blew, P., Muir, J. G., & Naik, V. N. (2010). The evolving Royal College examination in anesthesiology. Canadian Journal of Anesthesia/Journal canadien d’anesthésie, 57(9), 804-810. https://doi.org/10.1007/s12630-010-9341-1

Blumberg, M., & Pringle, C. D. (1982). The missing opportunity in organizational research: Some implications for a theory of work performance. The Academy of Management Review, 7(4), 560–569. https://doi.org/10.2307/257222

Cook, D. A., & Steinert, Y. (2013). Online learning for faculty development: A review of the literature. Medical Teacher, 35(11), 930–937. https://doi.org/10.3109/0142159X.2013.827328

Daniel, C. M. (2014). Comparing online and face-to-face professional development [Doctoral dissertation, Nova Southeastern University]. https://doi.org/10.13140/2.1.3157.5042

Feldman, M., Lazzara, E. H., Vanderbilt, A. A., & DiazGranados, D. (2012). Rater training to support high-stakes simulation-based assessments. Journal of Continuing Education in the Health Professions, 32(4), 279–286. https://doi.org/10.1002/chp.21156

Holmboe, E. S., Ward, D. S., Reznick, R. K., Katsufrakis, P. J., Leslie, K. M., Patel, V. L., Ray, D. D., & Nelson, E. A. (2011). Faculty development in assessment: The missing link in competency-based medical education. Academic Medicine, 86(4), 460–467. https://doi.org/10.1097/ACM.0b013e31820cb2a7

Iqbal, I., Naqvi, S., Abeysundara, L., & Narula, A. (2010). The value of oral assessments: A review. The Bulletin of the Royal College of Surgeons of England, 92(7), 1–6. https://doi.org/10.1308/147363510×511030

Juul, D., Yudkowsky, R., & Tekian, A. (2019). Oral Examinations. In R. Yudkowsky, Y. S. Park, & S. M. Downing (Eds.), Assessment in Health Professions Education. Routledge. https://doi.org/10.4324/9781315166902-8

Kemp, N., & Grieve, R. (2014). Face-to-face or face-to-screen? Undergraduates’ opinions and test performance in classroom vs. online learning. Frontiers in Psychology, 5. https://doi.org/10.3389/fpsyg.2014.01278

Khera, N., Davies, H., Davies, H., Lissauer, T., Skuse, D., Wakeford, R., & Stroobant, J. (2005). How should paediatric examiners be trained? Archives of Disease in Childhood, 90(1), 43–47. https://doi.org/10.1136/adc.2004.055103

Lineberry, M. (2019). Validity and quality. Assessment in Health Professions Education, 17-32. https://doi.org/10.4324/9781315166902-2

Marin-Garcia, J. A., & Martinez Tomas, J. (2016). Deconstructing AMO framework: A systematic review. Intangible Capital, 12(4), 1040. https://doi.org/10.3926/ic.838

McLean, M., Cilliers, F., & Van Wyk, J. M. (2008). Faculty development: Yesterday, today and tomorrow. Medical Teacher, 30(6), 555–584. https://doi.org/10.1080/01421590802109834

Md Hashim, N. H. (2021). Pre- and Post-test [Dataset]. Figshare. https://doi.org/10.6084/m9.figshare.20186582.v1

Md Hashim, N. H. (2022). Followup Questionnaire [Dataset]. Figshare. https://doi.org/10.6084/m9.figshare.20186591.v2

Newman, L. R., Brodsky, D., Jones, R. N., Schwartzstein, R. M., Atkins, K. M., & Roberts, D. H. (2016). Frame-of-reference training: Establishing reliable assessment of teaching effectiveness. Journal of Continuing Education in the Health Professions, 36(3), 206–210. https://doi.org/10.1097/CEH.0000000000000086

Newstrom, J. W. (1995). Evaluating training programs: The four levels, by Donald L. Kirkpatrick. (1994). San Francisco: Berrett-Koehler. 229 pp., $32.95 cloth. Human Resource Development Quarterly, 6(3), 317-320. https://doi.org/10.1002/hrdq.3920060310

Singh, J., Evans, E., Reed, A., Karch, L., Qualey, K., Singh, L., & Wiersma, H. (2022). Online, hybrid, and face-to-face learning through the eyes of faculty, students, administrators, and instructional designers: Lessons learned and directions for the post-vaccine and post-pandemic/COVID-19 World. Journal of Educational Technology Systems, 50(3), 301–326. https://doi.org/10.1177/00472395211063754

Steinert, Y., Mann, K., Anderson, B., Barnett, B. M., Centeno, A., Naismith, L., Prideaux, D., Spencer, J., Tullo, E., Viggiano, T., Ward, H., & Dolmans, D. (2016). A systematic review of faculty development initiatives designed to enhance teaching effectiveness: A 10-year update: BEME Guide No. 40. Medical Teacher, 38(8), 769-786. https://doi.org/10.1080/0142159x.2016.1181851

Steinert, Y., Mann, K., Centeno, A., Dolmans, D., Spencer, J., Gelula, M., & Prideaux, D. (2006). A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME Guide No. 8. Medical Teacher, 28(6), 497–526. https://doi.org/10.1080/01421590600902976

Tan, C. P. L., & Pallath, V. (2022). Workshop Evaluation Form [Dataset]. Figshare. https://doi.org/10.6084/m9.figshare.20189309.v1

Tekian, A., & Norcini, J. J. (2016). Faculty development in assessment : What the faculty need to know and do. In M. Mentkowski, P.F. Wimmers (Eds.), Assessing Competence in Professional Performance across Disciplines and Professions (1st ed., pp. 355–374). Springer Cham. https://doi.org/10.1007/978-3-319-30064-1

Thomas, P. A., Kern, D. E., Hughes, M. T., & Chen, B. Y. (2015). Curriculum development for medical education : A six-step approach. John Hopkins University Press. https://jhu.pure.elsevier.com/en/publications/curriculum-development-for-medical-education-a-six-step-approach

Vollenbroek, W. B. (2019). Communities of Practice: Beyond the Hype – Analysing the Developments in Communities of Practice at Work [Doctoral dissertation, University of Twente]. https://doi.org/10.3990/1.9789036548205

Williams, R. G., Klamen, D. A., & McGaghie, W. C. (2003). SPECIAL ARTICLE: Cognitive, social and environmental sources of bias in clinical performance ratings. Teaching and Learning in Medicine, 15(4), 270–292. https://doi.org/10.1207/S15328015TLM1504_11

*Shairil Rahayu Ruslan

50604, Kuala Lumpur,

Malaysia

03-79492052 / 012-3291074

Email: shairilrahayu@gmail.com, shairil@ummc.edu.my

Announcements

- Best Reviewer Awards 2025

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2025.

Refer here for the list of recipients. - Most Accessed Article 2025

The Most Accessed Article of 2025 goes to Analyses of self-care agency and mindset: A pilot study on Malaysian undergraduate medical students.

Congratulations, Dr Reshma Mohamed Ansari and co-authors! - Best Article Award 2025

The Best Article Award of 2025 goes to From disparity to inclusivity: Narrative review of strategies in medical education to bridge gender inequality.

Congratulations, Dr Han Ting Jillian Yeo and co-authors! - Best Reviewer Awards 2024

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2024.

Refer here for the list of recipients. - Most Accessed Article 2024

The Most Accessed Article of 2024 goes to Persons with Disabilities (PWD) as patient educators: Effects on medical student attitudes.

Congratulations, Dr Vivien Lee and co-authors! - Best Article Award 2024

The Best Article Award of 2024 goes to Achieving Competency for Year 1 Doctors in Singapore: Comparing Night Float or Traditional Call.

Congratulations, Dr Tan Mae Yue and co-authors! - Best Reviewer Awards 2023

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2023.

Refer here for the list of recipients. - Most Accessed Article 2023

The Most Accessed Article of 2023 goes to Small, sustainable, steps to success as a scholar in Health Professions Education – Micro (macro and meta) matters.

Congratulations, A/Prof Goh Poh-Sun & Dr Elisabeth Schlegel! - Best Article Award 2023

The Best Article Award of 2023 goes to Increasing the value of Community-Based Education through Interprofessional Education.

Congratulations, Dr Tri Nur Kristina and co-authors! - Best Reviewer Awards 2022

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2022.

Refer here for the list of recipients. - Most Accessed Article 2022

The Most Accessed Article of 2022 goes to An urgent need to teach complexity science to health science students.

Congratulations, Dr Bhuvan KC and Dr Ravi Shankar. - Best Article Award 2022

The Best Article Award of 2022 goes to From clinician to educator: A scoping review of professional identity and the influence of impostor phenomenon.

Congratulations, Ms Freeman and co-authors.