The predictive brain model in diagnostic reasoning

Submitted: 4 August 2020

Accepted: 14 October 2020

Published online: 4 May, TAPS 2021, 6(2), 1-8

https://doi.org/10.29060/TAPS.2021-6-2/RA2370

Tow Keang Lim

Department of Medicine, National University Hospital, Singapore

Abstract

Introduction: Clinical diagnosis is a pivotal and highly valued skill in medical practice. Most current interventions for teaching and improving diagnostic reasoning are based on the dual process model of cognition. Recent studies which have applied the popular dual process model to improve diagnostic performance by “Cognitive De-biasing” in clinicians have yielded disappointing results. Thus, it may be appropriate to also consider alternative models of cognitive processing in the teaching and practice of clinical reasoning.

Methods: This is critical-narrative review of the predictive brain model.

Results: The theory of predictive brains is a general, unified and integrated model of cognitive processing based on recent advances in the neurosciences. The predictive brain is characterised as an adaptive, generative, energy-frugal, context-sensitive action-orientated, probabilistic, predictive engine. It responds only to predictive errors and learns by iterative predictive error management, processing and hierarchical neural coding.

Conclusion: The default cognitive mode of predictive processing may account for the failure of de-biasing since it is not thermodynamically frugal and thus, may not be sustainable in routine practice. Exploiting predictive brains by employing language to optimise metacognition may be a way forward.

Keywords: Diagnosis, Bias, Dual Process Theory, Predictive Brains

Practice Highlights

- According to the dual process model of cognition diagnostic errors are caused by bias reasoning.

- Interventions to improve diagnosis based on “Cognitive De-biasing” methods report disappointing results.

- The predict brain is a unified model of cognition which accounts for diagnostic errors, the failure of “Cognitive De-biasing” and may point to effective solutions.

- Using appropriate language as simple rules or thumb, to fine-tune predictive processing meta-cognitively may be a practical strategy to improve diagnostic problem solving.

I. INTRODUCTION

Clinical diagnostic expertise is a critical, highly valued, and admired skill (Montgomery, 2006). However, diagnostic errors are common and important adverse events which merit research and effective prevention (Gupta et al., 2017; Singh et al., 2014; Skinner et al., 2016). Thus, it is now widely acknowledged and recognized that concerted efforts are required to improve the research, training and practice of clinical reasoning in improving diagnosis (Simpkin et al., 2017; Singh & Graber, 2015; Zwaan et al., 2013). The consensus among practitioners, researchers and preceptors is that most preventable diagnostic errors are associated with bias reasoning during rapid, non-analytical, default cognitive processing of clinical information (Croskerry, 2013). The most widely held theory which accounts for this observation is the dual process model of cognition (B. Djulbegovic et al., 2012; Evans, 2008; Schuwirth, 2017). It posits that most diagnostic errors reside in intuitive, non-analytical or systems 1 thinking (Croskerry, 2009). Thus, the logical, practical and common sense implication which follows from this assumption is that we should activate and apply analytical or system 2 thinking to counter-check or “De-bias” system 1 errors (Croskerry, 2009). This is a popular notion and it has facilitated the emergence of many schools of clinical reasoning based on training methods designed to deliberately understand, recognise, categorise and avoid specific diagnostic errors arising from system thinking 1 or cognitive bias (Reilly et al., 2013; Rencic et al., 2017; Restrepo et al., 2020). However, careful research on the merits of these interventions under controlled conditions do not show consistent nor clear benefits (G. Norman et al., 2014; G. R. Norman et al., 2017; O’Sullivan & Schofield, 2019; Sherbino et al., 2014; Sibbald et al., 2019; J. N. Walsh et al., 2017). Moreover, even the recognition and categorization of these cognitive error events themselves are deeply confounded by hindsight bias itself (Zwaan et al., 2016). Perhaps, at this juncture, it might be appropriate to consider alternative models of cognition based on advances in multi-disciplinary neuroscience research which have expanded greatly in recent years (Monteiro et al., 2020).

Over the past decade the theory of predictive brains has emerged as an ambitious, unified, convergent and integrated model of cognitive processing from research in a large variety of core domains in cognition which include philosophy, meta-physics, cellular physics, thermodynamics, Associative Learning theory, Bayesian-probability theory, Information theory, machine learning, artificial intelligence, behavioural science, neuro-cognition, neuro-imaging, constructed emotions and psychiatry (Bar, 2011; Barrett, 2017a; Barrett, 2017b; Clark, 2016; Friston, 2010; Hohwy, 2013; Seligman, 2016; Teufel & Fletcher, 2020). It may have profound and practical implications on how we live, work and learn. However, to my knowledge, there is almost no discussion of this novel proposition in either medical education pedagogy or research. Thus, in this presentation I will review recent developments in the predictive brain model of cognition, map its key elements which impacts on pedagogy and research in medical education and propose an application in the training of diagnostic reasoning based on it.

An early version of this work had been presented as an abstract (Lim & Teoh, 2018).

II. METHODS

This is a critical-narrative review of the predictive brain model from Friston’s “The free energy principle” proposition a decade ago to more recent critical examination of the emerging supportive evidence based on neurophysiological studies over the past 5 years (Friston, 2010; K. S. Walsh et al., 2020).

III. RESULTS

A. The Brain is a Frugal Predictive Engine

The Brain Is A Frugal Predictive Engine (General references (Bar, 2011; Barrett, 2017a; Barrett, 2017b; Clark, 2013; Clark, 2016; Friston, 2010; Gilbert & Wilson, 2007; Hohwy, 2013; Seligman, 2016; Seth et al., 2011; Sterling, 2012).

In contrast with traditional top-down, feed-forward models of cognition, the predictive brain model reverses and inverts this process. Perception is characterised as an entirely inferential rapidly adaptive, generative, energy-frugal, context-sensitive action-orientated, probabilistic, predictive process (Tschantz et al., 2020). This system is governed by the need to respond rapidly to ever changing demands from the external environmental and our body’s internal physiological signals (intero-ception) and yet minimise free energy expenditure (or waste) (Friston, 2010; Kleckner et al., 2017; Sterling, 2012). Thus, it is not passive and reactive to new information but predictive and continuously proactive. From very early, elemental and sparse cues it is continuously generating predictive representations based on remembered similar experiences in the past which may include simulations. It performs iterative matching of top down prior representations with bottom up signals and cues in a hierarchy of categories of abstractions and content specificity over scales of space and time (Clark, 2013; Friston & Kiebel, 2009; Spratling, 2017a). This matching process is also sensitive to variations in context and thus enable us to make sense of rapidly changing and complex situations (Clark, 2016).

Cognitive resource, in terms of allocating attention, is only focused on the management of errors in prediction or the mismatch between prior representations and new emergent information. It seeks to minimise prediction errors (PEs) and there is repetitive, recognition-expectation-based signal suppression when this is achieved. Thus, this is a system which only responds to the unfamiliar situation or what it considers as news worthy. This is analogous to Claude Shannons’s classic analysis of “surprisals” in information theory (Shannon et al., 1993). Learning is based on the generation and neural coding of a new predictive representations in memory. The most direct and powerful evidence for this process comes from optogenetic experiments with their exquisitely high degree of resolution in the monitoring and manipulations over space-time of neuronal signalling and behaviour in freely forging rats which show causal linkages between PE, dopamine neurons and learning (Nasser et al., 2017; Steinberg et al., 2013).

The brain intrinsically generates representations of the world in which it finds itself from past experience which is refined by sensory data. New sensory information is represented and inferred in terms of these known causes. Determining which combination of the many possible causes best fits the current sensory data is achieved through a process of minimising the error between the sensory data and the sensory inputs predicted by the expected causes, i.e. the PE. In the service of PE reduction, the brain will also generate motor actions such as saccadic eye movement and foraging behaviour. The prediction arises from a process of “backwards thinking” or inferential Bayesian best guess or approximation based simultaneously on sensory data and prior experience (Chater & Oaksford, 2008; Kersten et al., 2004; Kwisthout et al., 2017a; Kwisthout et al., 2017b; Ting et al., 2015). It is a hierarchical predictive coding process, reflecting the serial organization of the neuronal architecture of cerebral cortex; higher levels are abstract, whereas the lowest level amounts to a prediction of the incoming sensory data (Kolossa et al., 2015; Shipp, 2016; Ting et al., 2015). The actual sensory data is compared to the predicted sensory data, and it is the discrepancies, or ‘error’ that ascends up the hierarchy to refine all higher levels of abstraction in the model. Thus, this is a learning process whereby, with each iteration, the model representations are optimised and encoded in long term memory as the PEs minimise (Friston, FitzGerald, Rigoli et al., 2017; Spratling, 2017b).

This system of neural responses is regulated and fine-tuned by varying the gains on the weightage of the reliability (or precision) of the PE estimate itself. In other words, it is the level of confidence (versus uncertainty) in the PE which determines the intensity of attention allocated to it and strength of coding in memory following its resolution (Clark, 2013; Clark, 2016; Feldman & Friston, 2010; Hohwy, 2013). This regulatory, neuro-modulatory process is impacted by the continuous cascade of action relevant information which is sensitive to both external context and internal interoceptive (i.e. from perception of our own physiological responses) and affective signals (Clark, 2016). This metacognitive capacity to effectively manipulate and re-calibrate the precision of PE itself may be a critical aspect of decision making, problem solving behaviour and learning. (Hohwy, 2013; Picard & Friston, 2014).

B. Clinical Reasoning is Predictive Error Processing and Learning is Predictive Coding

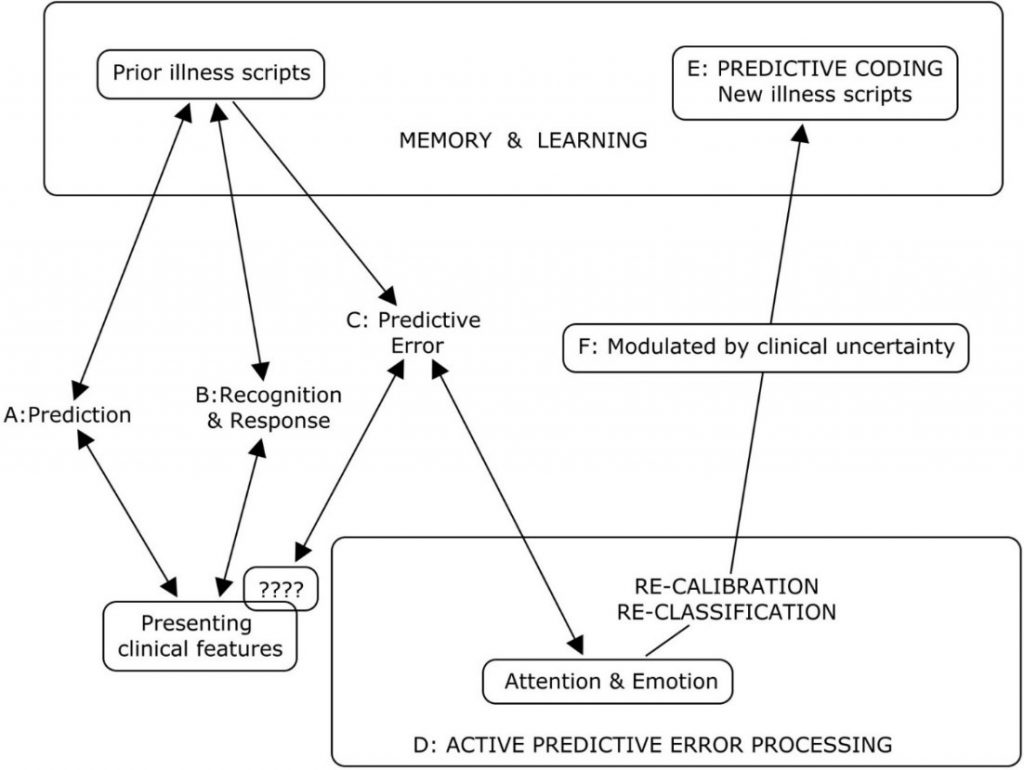

The core processes of the predictive brain which are engaged during diagnostic reasoning are summarised in Table 1 and Figure 1.

|

Core features of the predictive brain model |

Clinical reasoning features and processes |

|

The frugal brain and free energy principle(Friston, 2010) |

Cognitive load in problem solving (Young et al., 2014)

|

|

Iterative matching of top down priors Vs bottom up signals |

Inductive foraging (Donner-Banzhoff & Hertwig, 2014; Donner-Banzhoff et al., 2017) |

|

Predictive error processing |

Pattern recognition in diagnosis |

|

Recognition-expectation-based signal suppression |

Premature closure (Blissett & Sibbald, 2017; Melo et al., 2017) |

|

Hierarchical predictive error coding as learning |

Development of illness scripts (Custers, 2014) |

|

Probabilistic-Bayesian inferential approximations |

Bayesian inference in clinical reasoning |

|

Context sensitivity |

Contextual factors in diagnostic errors(Durning et al., 2010) |

|

Action orientation |

Foraging behaviour in clinical diagnosis (Donner-Banzhoff & Hertwig, 2014; Donner-Banzhoff et al., 2017) |

|

Interoception and affect in prediction error management |

Gut feel and regret (metacognition) |

|

The precision(reliability/uncertainty) of prediction errors |

Clinical uncertainty (metacognition) (Bhise et al., 2017; Simpkin & Schwartzstein, 2016) |

Table 1: Core features of the predictive brain model of cognition manifested as clinical reasoning processes

Legend to Figure 1

A summary of the cognitive processes engaged by the predict brain model during clinical diagnosis

A: Active search for diagnostic clues based on prior experience of similar patients in similar situations.

B: Recognition of key features will activate a series of familiar illness script from long term memory to match with the new case. If this is successful, a diagnosis made and any prediction error signals are rapidly silenced.

C & D: When the illness scripts do not match the presenting features (????), cognition slows down, attention is heightened and further searches are made for additional matching clues and illness scripts. This is iterated until a satisfactory match is found or a new illness script is generated to account for the mismatch.

E: A new variation in the presenting features for that disease is then encoded in memory as a new illness script in memory and thus, a valuable learning moment.

F: The degree of uncertainty or level of confidence in matching key presenting features to a diagnosis is a meta-cognitive skill and a critical expertise in clinical diagnosis. This corresponds to the precision or gain/weightage of prediction errors (Meta cognition) in the predictive brain model.

Figure 1: A summary of the cognitive processes engaged by the predict brain model during clinical diagnosis

Thermodynamic frugality is a central feature of the predictive brain model and in this system, the primacy of attending only to surprises or PEs is pivotal (Friston, 2010). This might be regard as an energy efficient strategy in coping with cognitive load which has been long recognised as an important consideration in clinical problem solving and learning (Young et al., 2014; Van Merrienboer & Sweller, 2010).

From the first moments of a diagnostic encounter the clinician is alert to clues which might point to the diagnosis and begins to generate possible diagnosis scenarios and simulations based upon her prior experience of similar patients and situations (Donner-Banzhoff & Hertwig, 2014). This is iterative and, from a scanty set of presenting features, a plausible diagnosis may be considered within a few seconds to minutes (Donner-Banzhoff & Hertwig, 2014; Donner-Banzhoff et al., 2017). Thus, a familiar illness script is activated from long term memory to match with the new case (Custers, 2014). If this is successful, a particular diagnosis is recognised and any PE signal is rapidly silenced. Functional MRI studies of clinicians during this process showed that highly salient diagnostic information, reducing uncertainty about the diagnosis, rapidly decreased monitoring activity in the frontoparietal attentional network and may contribute to premature diagnostic closure, an important cause of diagnostic errors (Melo et al., 2017). This may be considered a form of diagnosis or recognition related PE signal suppression analogous to the well know phenomenon of repetitive suppression (Blissett & Sibbald, 2017; Bunzeck & Thiel, 2016; Krupat et al., 2017).

In cases where the illness scripts do not match the presenting features, a PE event is encountered, cognition slows down, attention is heightened and further searches are made for additional matching clues and illness scripts (Custers, 2014). This is iterated until a satisfactory match is found or a new illness script is generated to account for the mismatch. This is then encoded in memory as a new variation in the presenting features for that disease and thus, a valuable learning moment. Bayesian inference is a fundamental feature of both clinical diagnostic reasoning and the predictive brain model (Chater & Oaksford, 2008).

As in the predictive brain model, external contextual factors and internal emotional and physiological responses such as gut feeling and regret, exert profound effects on clinical decision making (M. Djulbegovic et al., 2015; Durning et al., 2010; Stolper & van de Wiel, 2014; Stolper et al., 2014). Also active inductive foraging behaviour in searching for diagnostic clues described in experienced primary physicians is analogous to behaviour directed at reducing PEs (Donner-Banzhoff & Hertwig, 2014; Donner-Banzhoff et al., 2017). The precision or gain/weightage of PEs is manifested metacognitively as uncertainties or levels of confidence in clinical reasoning (Sandved-Smith et al., 2020). Metacognition is a critical capacity and expertise in effective decision making. (Bhise et al., 2017; Fleming & Frith, 2014; Simpkin & Schwartzstein, 2016).

C. Why Applying the Dual Process Model May Not Improve Clinical Reasoning

Recent studies which have applied the popular dual process model to improve diagnostic performance by “cognitive de-biasing” in clinicians have yielded disappointing results (G. R. Norman et al., 2017). Cognitive processing of the predictive brain as the dominant default network mode of operation may account for this setback since de-biasing is not naturistic, requires retrospective “off line” processing after the monitoring salience network has already shut off (Krupat et al., 2017; Melo et al., 2017). It is not thermodynamically frugal and thus, may not be sustainable in routine practice (Friston, 2010; Young et al., 2014). Even Daniel Kahneman himself admits that, despite decades of research in cognitive bias he is unable to exert agency of the moment and de-bias himself (Kahneman, 2013). This will be more so in novice diagnosticians in the training phase who have scanty illness scripts and limited tolerance of any further cognitive loading (Young et al., 2014). The failure to even identify cognitive biases reliably by clinicians due to hindsight bias itself suggests that this intervention will be the least effective one in improving diagnostic reasoning (Zwaan et al., 2016).

D. Using Words to Fine Tune the Precision of Diagnostic Prediction Error

Daniel Kahneman, the foremost expert on cognitive bias, cautions that, contrary to what some experts in medical education advice, avoiding bias is ineffective in improving decision making under uncertainty (Restrepo et al., 2020). By contrast he suggested that we apply simple, common sense, rules of thumb (Kahneman et al., 2016). I hypothesise that instructing clinical trainees to use appropriate words to self in the diagnostic setting during active, naturalistic PE processing before the diagnosis is made and not as a retrospective counter check to cognition afterwards may be a way forward (Betz et al., 2019; Clark, 2016; Lupyan, 2017). In a multi-center, iterative thematic content analysis of over 2,000 cases of diagnostic errors with a structured taxonomy, Schiff and colleagues identified a limited number of pitfall themes which were overlooked and predisposed physicians to reasoning errors (Reyes Nieva H et al., 2017). These pitfall themes included three which are of particular interest in relation to naturalistic PE processing namely: (1) counter diagnostic cues, (2) things that do not fit and (3) red flags (Reyes Nieva H et al., 2017). Thus, we instructed our student interns and internal medicine residents to pay particular attend to these three diagnostic pitfalls during review of new patients and clinical problems (Lim & Teoh, 2018). They were required to append the following sub-headings to their clerking impression in the patient’s electronic health record (eHR): (a) Counter diagnostic features; (b) Things that do not fit; (c) Red flags. This template was added after the resident had entered his or her numerated list of diagnoses or issues. “Counter diagnostic features” was defined as symptoms, signs or investigations which were inconsistent with the proposed primary diagnosis. “Things that do not fit” was defined as any finding that could not be reasonably accounted for taking into account the main and differential diagnoses. “Red flags” were defined as findings which raised the possibility of a more serious underlying illness requiring early diagnosis or intervention. The attending physicians were required, during bedside rounds, to give feedback on these points and make amendments to the eHR as appropriate. This exercise may give us an opportunity to see if we can improve diagnostic accuracy by using pivotal words-to-self in the appropriate setting to maintain cognitive openness, flexibility and thus, avoid premature (Krupat et al., 2017). It is also a valuable critical, metacognitive thinking habit to inculcate in tyro diagnosticians (Carpenter et al., 2019).

IV. CONCLUSION

The theory of predictive brains has emerged as a major narrative in the understanding of how our mind works. It may account for the limitations of interventions designed to improve diagnostic problem solving which are based on the dual process theory of cognition. Exploiting predictive brains by employing language to optimise metacognition may be a way forward.

Note on Contributor

Lim designed the paper, reviewed the literature, drafted and revised it.

Ethical Approval

There is no ethical approval associated with this paper.

Funding

No funding sources are associated with this paper.

Declaration of Interest

No conflicts of interest are associated with this paper.

References

Bar, M. (2011). Predictions in the brain using our past to generate a future (pp. xiv, 383 p. ill. (some col.) 327 cm.).

Barrett, L. F. (2017a). How emotions are made: the secret life of the brain. Houghton Mifflin Harcourt.

Barrett, L. F. (2017b). The theory of constructed emotion: An active inference account of interoception and categorization. Social Cognitive and Affective Neuroscience, 12(1), 1-23. https://doi.org/10.1093/scan/nsw154

Betz, N., Hoemann, K., & Barrett, L. F. (2019). Words are a context for mental inference. Emotion, 19(8), 1463-1477. https://doi.org/10.1037/emo0000510

Bhise, V., Rajan, S. S., Sittig, D. F., Morgan, R. O., Chaudhary, P., & Singh, H. (2017). Defining and measuring diagnostic uncertainty in medicine: A systematic review. Journal of General Internal Medicine 33, 103–115. https://doi.org/10.1007/s11606-017-4164-1

Blissett, S., & Sibbald, M. (2017). Closing in on premature closure bias. Medical Education, 51(11), 1095-1096. https://doi.org/10.1111/medu.13452

Bunzeck, N., & Thiel, C. (2016). Neurochemical modulation of repetition suppression and novelty signals in the human brain. Cortex, 80, 161-173. https://doi.org/10.1016/j.cortex.2015.10.013

Carpenter, J., Sherman, M. T., Kievit, R. A., Seth, A. K., Lau, H., & Fleming, S. M. (2019). Domain-general enhancements of metacognitive ability through adaptive training. Journal of Experimental Psychology. General, 148(1), 51-64. https://doi.org/10.1037/xge0000505

Chater, N., & Oaksford, M. (2008). The probabilistic mind : prospects for Bayesian cognitive science. Oxford University Press.

Clark, A. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. The Behavioral and Brain Sciences, 36(3), 181–204. https://doi.org/10.1017/S0140525X12000477

Clark, A. (2016). Surfing uncertainty : Prediction, action, and the embodied mind: Oxford University Press.

Croskerry, P. (2009). Clinical cognition and diagnostic error: Applications of a dual process model of reasoning. Advances in Health Sciences Education : Theory and Practice, 14 Suppl 1, 27–35. https://doi.org/10.1007/s10459-009-9182-2

Croskerry, P. (2013). From mindless to mindful practice–cognitive bias and clinical decision making. The New England Journal of Medicine, 368(26), 2445–2448. https://doi.org/10.1056/NEJMp1303712

Custers, E. J. (2014). Thirty years of illness scripts: Theoretical origins and practical applications. Medical Teacher, 1-6. https://doi.org/10.3109/0142159X.2014.956052

Djulbegovic, B., Hozo, I., Beckstead, J., Tsalatsanis, A., & Pauker, S. G. (2012). Dual processing model of medical decision-making. BMC Medical Informatics and Decision Making, 12, 94. https://doi.org/10.1186/1472-6947-12-94

Djulbegovic, M., Beckstead, J., Elqayam, S., Reljic, T., Kumar, A., Paidas, C., & Djulbegovic, B. (2015). Thinking styles and regret in physicians. Public Library of Science One, 10(8), e0134038. https://doi.org/10.1371/journal.pone.0134038

Donner-Banzhoff, N., & Hertwig, R. (2014). Inductive foraging: Improving the diagnostic yield of primary care consultations. European Journal of General Practice, 20(1), 69–73. https://doi.org/10.3109/13814788.2013.805197

Donner-Banzhoff, N., Seidel, J., Sikeler, A. M., Bosner, S., Vogelmeier, M., Westram, A., & Gigerenzer, G. (2017). The phenomenology of the diagnostic process: A primary care-based survey. Medical Decision Making, 37(1), 27-34. https://doi.org/10.1177/0272989X16653401

Durning, S. J., Artino, A. R., Jr., Pangaro, L. N., van der Vleuten, C., & Schuwirth, L. (2010). Perspective: redefining context in the clinical encounter: Implications for research and training in medical education. Academic Medicine: Journal of the Association of American Medical Colleges, 85(5), 894–901. https://doi.org/10.1097/ACM.0b013e3181d7427c

Evans, J. S. (2008). Dual-processing accounts of reasoning, judgment, and social cognition. Annual Review of Psychology, 59, 255–278. https://doi.org/10.1146/annurev.psych.59.103006.093629

Feldman, H., & Friston, K. J. (2010). Attention, uncertainty, and free-energy. Frontiers in Human Neuroscience, 4, 215. https://doi.org/10.3389/fnhum.2010.00215

Fleming, S. M., & Frith, C. D. (2014). The cognitive neuroscience of metacognition. Springer.

Friston, K. (2010). The free-energy principle: A unified brain theory? Nature Reviews. Neuroscience, 11(2), 127–138. https://doi.org/10.1038/nrn2787

Friston, K., FitzGerald, T., Rigoli, F., Schwartenbeck, P., & Pezzulo, G. (2017). Active inference: A process theory. Neural Computation, 29(1), 1–49. https://doi.org/10.1162/NECO_a_00912

Friston, K., & Kiebel, S. (2009). Predictive coding under the free-energy principle. Philosophical Transactions of the Royal Society of London. Series B, Biological sciences, 364(1521), 1211–1221. https://doi.org/10.1098/rstb.2008.0300

Gilbert, D. T., & Wilson, T. D. (2007). Prospection: Experiencing the future. Science, 317(5843), 1351-1354. https://doi.org/10.1126/science.1144161

Gupta, A., Snyder, A., Kachalia, A., Flanders, S., Saint, S., & Chopra, V. (2017). Malpractice claims related to diagnostic errors in the hospital. BMJ Quality and Safety, 27(1), 53-60. https://doi.org/10.1136/bmjqs-2017-006774

Hohwy, J. (2013). The predictive mind. Oxford University Press..

Kahneman, D. (2013). Thinking, fast and slow (1st pbk. ed.). Farrar, Straus and Giroux.

Kahneman, D., Rosenfield, A. M., Gandhi, L., & Blaser, T. O. M. (2016). NOISE: How to overcome the high, hidden cost of inconsistent decision making. (cover story). Harvard Business Review, 94(10), 38-46. Retrieved from http://libproxy1.nus.edu.sg/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=buh&AN=118307773&site=ehost-live

Kersten, D., Mamassian, P., & Yuille, A. (2004). Object perception as bayesian inference. Annual Review of Psychology, 55, 271–304. https://doi.org/10.1146/annurev.psych.55.090902.142005

Kleckner, I. R., Zhang, J., Touroutoglou, A., Chanes, L., Xia, C., Simmons, W. K., & Feldman Barrett, L. (2017). Evidence for a large-scale brain system supporting allostasis and interoception in humans. Nature Human Behaviour, 1, 0069. https://doi.org/10.1038/s41562-017-0069

Kolossa, A., Kopp, B., & Fingscheidt, T. (2015). A computational analysis of the neural bases of Bayesian inference. Neuroimage, 106, 222-237. https://doi.org/10.1016/j.neuroimage.2014.11.007

Krupat, E., Wormwood, J., Schwartzstein, R. M., & Richards, J. B. (2017). Avoiding premature closure and reaching diagnostic accuracy: Some key predictive factors. Medical Education, 51(11), 1127-1137. https://doi.org/10.1111/medu.13382

Kwisthout, J., Bekkering, H., & van Rooij, I. (2017a). To be precise, the details don’t matter: On predictive processing, precision, and level of detail of predictions. Brain and Cognition, 112, 84–91. https://doi.org/10.1016/j.bandc.2016.02.008

Kwisthout, J., Phillips, W. A., Seth, A. K., van Rooij, I., & Clark, A. (2017b). Editorial to the special issue on perspectives on human probabilistic inference and the ‘Bayesian brain’. Brain and Cognition, 112, 1-2. https://doi.org/10.1016/j.bandc.2016.12.002

Lim T.K., & Teoh, C. M. (2018). Exploiting predictive brains for better diagnosis. Diagnosis (Berl), 5(3), eA40. Retrieved from https://www.degruyter.com/view/journals/dx/5/3/article-peA1.xml

Lupyan, G. (2017). Changing what you see by changing what you know: The role of attention. Frontiers in Psychology, 8, 553. https://doi.org/10.3389/fpsyg.2017.00553

Melo, M., Gusso, G. D. F., Levites, M., Amaro, E., Jr., Massad, E., Lotufo, P. A., & Friston, K. J. (2017). How doctors diagnose diseases and prescribe treatments: An fMRI study of diagnostic salience. Scientific Reports, 7(1), 1304. http://observatorio.fm.usp.br/handle/OPI/19951

Monteiro, S., Sherbino, J., Sibbald, M., & Norman, G. (2020). Critical thinking, biases and dual processing: The enduring myth of generalisable skills. Medical Education, 54(1), 66-73. https://doi.org/10.1111/medu.13872

Montgomery, K. (2006). How doctors think: Clinical judgement and the practice of medicine. Oxford University Press.

Nasser, H. M., Calu, D. J., Schoenbaum, G., & Sharpe, M. J. (2017). The dopamine prediction error: Contributions to associative models of reward learning. Frontiers in Psychology, 8, 244. https://doi.org/10.3389/fpsyg.2017.00244

Norman, G., Sherbino, J., Dore, K., Wood, T., Young, M., Gaissmaier, W., & Monteiro, S. (2014). The etiology of diagnostic errors: A controlled trial of system 1 versus system 2 reasoning. Academic Medicine: Journal of the Association of American Medical Colleges, 89(2), 277–284. https://doi.org/10.1097/ACM.0000000000000105

Norman, G. R., Monteiro, S. D., Sherbino, J., Ilgen, J. S., Schmidt, H. G., & Mamede, S. (2017). The Causes of Errors in Clinical Reasoning: Cognitive Biases, Knowledge Deficits, and Dual Process Thinking. Academic Medicine: Journal of the Association of American Medical Colleges, 92(1), 23–30. https://doi.org/10.1097/ACM.0000000000001421

O’Sullivan, E. D., & Schofield, S. J. (2019). A cognitive forcing tool to mitigate cognitive bias – A randomised control trial. BMC Medical Education, 19(1), 12. https://doi.org/10.1186/s12909-018-1444-3

Picard, F., & Friston, K. (2014). Predictions, perception, and a sense of self. Neurology, 83(12), 1112-1118. https://doi.org/10.1212/WNL.0000000000000798

Reilly, J. B., Ogdie, A. R., Von Feldt, J. M., & Myers, J. S. (2013). Teaching about how doctors think: A longitudinal curriculum in cognitive bias and diagnostic error for residents. BMJ Quality & Safety, 22(12), 1044–1050. https://doi.org/10.1136/bmjqs-2013-001987

Rencic, J., Trowbridge, R. L., Jr., Fagan, M., Szauter, K., & Durning, S. (2017). Clinical reasoning education at us medical schools: Results from a national survey of internal medicine clerkship directors. Journal of General Internal Medicine, 32(11), 1242–1246. https://doi.org/10.1007/s11606-017-4159-y

Restrepo, D., Armstrong, K. A., & Metlay, J. P. (2020). Annals Clinical Decision Making: Avoiding Cognitive Errors in Clinical Decision Making. Annals of Internal Medicine, 172(11), 747–751. https://doi.org/10.7326/M19-3692

Reyes Nieva H., V. M., Wright A, Singh H, Ruan E, Schiff G. (2017). Diagnostic Pitfalls: A New Approach to Understand and Prevent Diagnostic Error. In Diagnosis (Vol. 4, pp. eA1). https://www.degruyter.com/view/journals/dx/5/4/article-peA59.xml

Sandved-Smith, L., Hesp, C., Lutz, A., Mattout, J., Friston, K., & Ramstead, M. (2020, June 10). Towards a formal neurophenomenology of metacognition: Modelling meta-awareness, mental action, and attentional control with deep active inference. https://doi.org/10.31234/osf.io/5jh3c

Schuwirth, L. (2017). When I say … dual-processing theory. Medical Education, 51(9), 888–889. https://doi.org/10.1111/medu.13249

Seligman, M. E. P. (2016). Homo Prospectus. Oxford University Pres.

Seth, A. K., Suzuki, K., & Critchley, H. D. (2011). An interoceptive predictive coding model of conscious presence. Frontiers in Psychology, 2, 395. https://doi.org/10.3389/fpsyg.2011.00395

Shannon, C. E., Sloane, N. J. A., Wyner, A. D., & IEEE Information Theory Society. (1993). Claude Elwood Shannon : Collected Papers. IEEE Press.

Sherbino, J., Kulasegaram, K., Howey, E., & Norman, G. (2014). Ineffectiveness of cognitive forcing strategies to reduce biases in diagnostic reasoning: A controlled trial. Canadian Journal of Emergency Medicine, 16(1), 34–40. https://doi.org/10.2310/8000.2013.130860

Shipp, S. (2016). Neural Elements for Predictive Coding. Frontiers in Psychology, 7, 1792. https://doi.org/10.3389/fpsyg.2016.01792

Sibbald, M., Sherbino, J., Ilgen, J. S., Zwaan, L., Blissett, S., Monteiro, S., & Norman, G. (2019). Debiasing versus knowledge retrieval checklists to reduce diagnostic error in ECG interpretation. Advances in Health Sciences Education: Theory and Practice, 24(3), 427–440. https://doi.org/10.1007/s10459-019-09875-8

Simpkin, A. L., & Schwartzstein, R. M. (2016). Tolerating uncertainty – The next medical revolution? The New England Journal of Medicine, 375(18), 1713–1715. https://doi.org/10.1056/NEJMp1606402

Simpkin, A. L., Vyas, J. M., & Armstrong, K. A. (2017). Diagnostic Reasoning: An endangered competency in internal medicine training. Annals of Internal Medicine, 167(7), 507–508. https://doi.org/10.7326/M17-0163

Singh, H., & Graber, M. L. (2015). Improving diagnosis in health care- The next imperative for patient safety. The New England Journal of Medicine, 373(26), 2493–2495. https://doi.org/10.1056/NEJMp1512241

Singh, H., Meyer, A. N., & Thomas, E. J. (2014). The frequency of diagnostic errors in outpatient care: Estimations from three large observational studies involving US adult populations. BMJ Quality & Safety, 23(9), 727–731. https://doi.org/10.1136/bmjqs-2013-002627

Skinner, T. R., Scott, I. A., & Martin, J. H. (2016). Diagnostic errors in older patients: A systematic review of incidence and potential causes in seven prevalent diseases. International Journal of General Medicine, 9, 137–146. https://doi.org/10.2147/IJGM.S96741

Spratling, M. W. (2017a). A hierarchical predictive coding model of object recognition in natural images. Cognitive Computation, 9(2), 151–167. https://doi.org/10.1007/s12559-016-9445-1

Spratling, M. W. (2017b). A review of predictive coding algorithms. Brain and Cognition, 112, 92–97. https://doi.org/10.1016/j.bandc.2015.11.003

Steinberg, E. E., Keiflin, R., Boivin, J. R., Witten, I. B., Deisseroth, K., & Janak, P. H. (2013). A causal link between prediction errors, dopamine neurons and learning. Nature Neuroscience, 16(7), 966–973. https://doi.org/10.1038/nn.3413

Sterling, P. (2012). Allostasis: A model of predictive regulation. Physiology & Behavior, 106(1), 5–15. https://doi.org/10.1016/j.physbeh.2011.06.0044

Stolper, C. F., & van de Wiel, M. W. (2014). EBM and gut feelings. Medical Teacher, 36(1), 87-88. https://doi.org/10.3109/0142159X.2013.835390

Stolper, C. F., Van de Wiel, M. W., Hendriks, R. H., Van Royen, P., Van Bokhoven, M. A., Van der Weijden, T., & Dinant, G. J. (2014). How do gut feelings feature in tutorial dialogues on diagnostic reasoning in GP traineeship? Advances in Health Sciences Education: Theory and Practice, 20(2), 499–513. https://doi.org/10.1007/s10459-014-9543-3

Teufel, C., & Fletcher, P. C. (2020). Forms of prediction in the nervous system. Nature Reviews Neuroscience, 21(4), 231–242. https://doi.org/10.1038/s41583-020-0275-5

Ting, C. C., Yu, C. C., Maloney, L. T., & Wu, S. W. (2015). Neural mechanisms for integrating prior knowledge and likelihood in value-based probabilistic inference. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience, 35(4), 1792–1805. https://doi.org/10.1523/JNEUROSCI.3161-14.2015

Tschantz, A., Seth, A. K., & Buckley, C. L. (2020). Learning action-oriented models through active inference. PLoS Computational Biology, 16(4), e1007805. https://doi.org/10.1371/journal.pcbi.1007805

Van Merrienboer, J. J., & Sweller, J. (2010). Cognitive load theory in health professional education: Design principles and strategies. Medical Education, 44(1), 85-93. https://doi.org/10.1111/j.1365-2923.2009.03498.x

Walsh, J. N., Knight, M., & Lee, A. J. (2017). Diagnostic errors: Impact of an educational intervention on pediatric primary care. Journal of Pediatric Health Care : Official Publication of National Association of Pediatric Nurse Associates & Practitioners, 32(1), 53–62. https://doi.org/10.1016/j.pedhc.2017.07.004

Walsh, K. S., McGovern, D. P., Clark, A., & O’Connell, R. G. (2020). Evaluating the neurophysiological evidence for predictive processing as a model of perception. Annals of the New York Academy of Sciences, 1464(1), 242–268. https://doi.org/10.1111/nyas.14321

Young, J. Q., Van Merrienboer, J., Durning, S., & Ten Cate, O. (2014). Cognitive load theory: Implications for medical education: AMEE Guide No. 86. Medical Teacher, 36(5), 371-384. https://doi.org/10.3109/0142159X.2014.889290

Zwaan, L., Monteiro, S., Sherbino, J., Ilgen, J., Howey, B., & Norman, G. (2016). Is bias in the eye of the beholder? A vignette study to assess recognition of cognitive biases in clinical case workups. BMJ Quality & Safety. 26(2), 104–110. https://doi.org/10.1136/bmjqs-2015-005014

Zwaan, L., Schiff, G. D., & Singh, H. (2013). Advancing the research agenda for diagnostic error reduction. BMJ Quality & Safety, 22 Suppl 2, ii52-ii57. https://doi.org/10.1136/bmjqs-2012-001624

*Lim Tow Keang

Department of Medicine

National University Hospital

5 Lower Kent Ridge Rd

Singapore 119074

Email: mdclimtk@nus.edu.sg

Announcements

- Best Reviewer Awards 2025

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2025.

Refer here for the list of recipients. - Most Accessed Article 2025

The Most Accessed Article of 2025 goes to Analyses of self-care agency and mindset: A pilot study on Malaysian undergraduate medical students.

Congratulations, Dr Reshma Mohamed Ansari and co-authors! - Best Article Award 2025

The Best Article Award of 2025 goes to From disparity to inclusivity: Narrative review of strategies in medical education to bridge gender inequality.

Congratulations, Dr Han Ting Jillian Yeo and co-authors! - Best Reviewer Awards 2024

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2024.

Refer here for the list of recipients. - Most Accessed Article 2024

The Most Accessed Article of 2024 goes to Persons with Disabilities (PWD) as patient educators: Effects on medical student attitudes.

Congratulations, Dr Vivien Lee and co-authors! - Best Article Award 2024

The Best Article Award of 2024 goes to Achieving Competency for Year 1 Doctors in Singapore: Comparing Night Float or Traditional Call.

Congratulations, Dr Tan Mae Yue and co-authors! - Best Reviewer Awards 2023

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2023.

Refer here for the list of recipients. - Most Accessed Article 2023

The Most Accessed Article of 2023 goes to Small, sustainable, steps to success as a scholar in Health Professions Education – Micro (macro and meta) matters.

Congratulations, A/Prof Goh Poh-Sun & Dr Elisabeth Schlegel! - Best Article Award 2023

The Best Article Award of 2023 goes to Increasing the value of Community-Based Education through Interprofessional Education.

Congratulations, Dr Tri Nur Kristina and co-authors! - Best Reviewer Awards 2022

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2022.

Refer here for the list of recipients. - Most Accessed Article 2022

The Most Accessed Article of 2022 goes to An urgent need to teach complexity science to health science students.

Congratulations, Dr Bhuvan KC and Dr Ravi Shankar. - Best Article Award 2022

The Best Article Award of 2022 goes to From clinician to educator: A scoping review of professional identity and the influence of impostor phenomenon.

Congratulations, Ms Freeman and co-authors.