Clickers: Enhancing the residency selection process

Published online: 2 January, TAPS 2019, 4(1), 51-54

DOI: https://doi.org/10.29060/TAPS.2019-4-1/SC1052

Jill Cheng Sim Lee1, Muhammad Fairuz Abdul Rahman1, Weng Yan Ho1, Mor Jack Ng2, Kok Hian Tan1,2 & Bernard Su Min Chern1,2

1Division of Obstetrics and Gynaecology, KK Women’s and Children’s Hospital, Singapore; 2SingHealth Duke-NUS Obstetrics and Gynaecology (OBGYN) Academic Clinical Program, SingHealth Duke-NUS Academic Medical Centre, Singapore

Abstract

Background: Residency selection panels commonly use time consuming manual voting processes which are easily subjected to bias and influence of others to select successful candidates. We explored the use of an electronic audience response system (ARS) or ‘clickers’ in obstetrics and gynaecology resident selection; studying the voting process and examiner feedback on confidentiality and efficiency.

Methods: All 10 interviewers were provided with clickers to vote for each of the 25 candidates at the end of the residency selection interview. Votes were cast using a 5-point Likert scale. The number of clickers provided to each interviewer was weighted according to the rank of the interviewer. Voting scores and time for each candidate was recorded by the ARS and interviewers completed a questionnaire evaluating their experience of using clickers for resident selection.

Results: The 10 successful candidates scored a mean of 4.28 (SD 0.27, range 3.86-4.73), compared to 2.99 (SD 0.71, 1.50–3.79) for the 15 unsuccessful candidates (p<0.001). Average voting time was 26 seconds per candidate. Total voting time for all candidates was 650 seconds. All interviewers favoured the use of clickers, for its confidentiality, instantaneous results, and more discerning graduated response.

Conclusion: Clickers provide a rapid and anonymous method of collating interviewer decisions following a rigorous selection process. It was well-received by interviewers and highly recommended for use by other residencies in their selection process.

Keywords: Resident Selection, Clickers, Electronic Audience Response System

PRACTICE HIGHLIGHTS

- Clickers provide a time-efficient way of collating resident selection interview outcomes following a rigorous structured selection process.

- It is important that individual interviewers are able to select successful candidates anonymously to reduce risk of bias from external influences as use of multiple observers rather than single interviewers improves reliability of the resident selection interview.

- Numerical ratings using clickers provide objective and transparent data easily available should an inquiry arise about the resident selection process.

- Clickers are increasingly becoming standard educational tools within the medical classroom. Faculty and resident familiarity with audience response systems allows development of creative extensions of clickers beyond the classroom context.

I. INTRODUCTION

There is increasing evidence that radiofrequency electronic Audience Response System (ARS) or “clickers” are useful in undergraduate and postgraduate medical education (Caldwell, 2007). ARS instantly collects real-time data through hand-held keypads and graphs participant responses. Each clicker unit has a unique signal allowing answers from each assessor to be identified and recorded.

Clickers bridge the communication gap between speaker and audience, and is used to assess understanding, engage attention, enhance learner enjoyment and interaction, improve knowledge retention and encourage clinical reasoning and problem solving. The positive uptake, feedback and experience of clickers by the medical education community has led to its introduction in innovative new areas, providing solutions to many problems within medical education. Outside medicine, clickers have been used for research data collection, enhanced social norms marketing campaigns and surveying vulnerable, low literacy groups.

The SingHealth Obstetrics and Gynaecology (OBGYN) Residency Program recently explored clickers as a way to improve efficiency and efficacy in the residency selection process. Traditional selection interviews typically concluded with a voting process where members of the selection panel raised hands to decide on the best candidates. This was time-consuming and individuals’ voting decisions could be swayed by openly visible votes of other panel members. Furthermore, hand-raising only allowed binary responses. This paper describes the OBGYN resident selection process using clickers and studies interviewer feedback on its confidentiality and efficiency. To our knowledge, no literature exists on the use of ARS during recruitment interviews.

II. METHODS

The SingHealth OBGYN residency selection process was conducted over two interview sessions assessing 25 candidates for selection for the academic year of 2015. Prior to this, candidates were shortlisted from an annual national specialty training application process open to final year medical students, house officers and medical officers. Following review of applications, portfolios, medical school grades and letters of support, candidates participated in a national level multiple mini interview prior to undergoing selection at individual sponsoring institutions.

The SingHealth selection panel comprised ten interviewers; the Program Director (PD), two Associate Program Directors (APDs), Academic Chair, four core faculty and two chief residents. The selection format comprised a round robin three-station interview; a large panel interview with the PD, Academic Chair, an APD, a core faculty member and chief resident; a small panel interview with an APD and one core faculty; and a less formal ‘bull pen’ interview with two core faculty and a chief resident. Candidates were interviewed alone in the large panel, where they were asked about self-appraisal and reactions to residency and healthcare industry. In the small panel, they were interviewed about goals, ambitions and work experience. Candidates awaiting panel interviews were interviewed about personal information and life questions in a group in the ‘bull pen’. Interviewers convened at the end to discuss candidate performances and review the multisource feedback obtained from within SingHealth OBGYN. Interviewers then scored each candidate using clickers.

All interviewers were given clickers to vote for each candidate. The ARS in this study was the Classroom Performance Systems Pulse, utilising the INTERWRITERESPONSE® 6.0 software. The number of clickers given to each interviewer was weighted, with the PD receiving three and APDs and Academic Chair receiving two each. Other interviewers each received one. This weightage policy was decided by the program in recognition of leadership and experience. Program administrators allocated numbered clickers to each interviewer. Interviewers were blinded regarding which clickers were allocated to other interviewers.

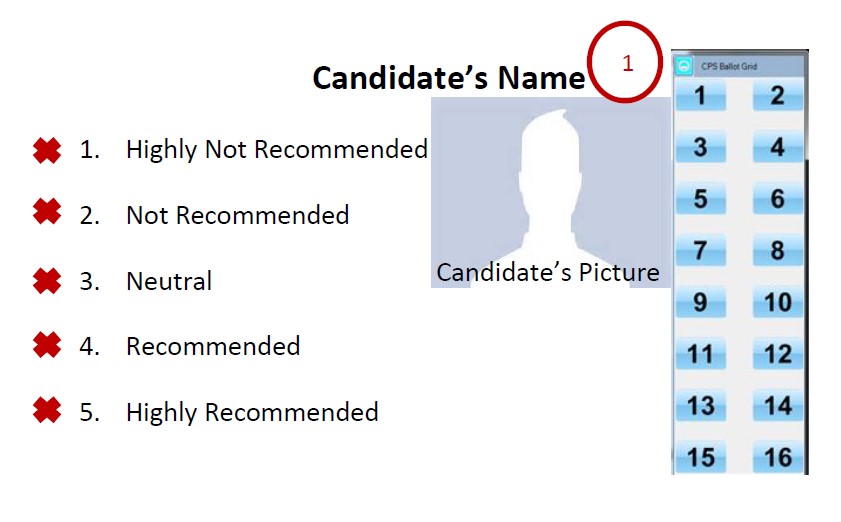

Candidates’ names and photographs would be shown on screen (Figure 1) and interviewers were asked to vote using a 5-point Likert scale, with 1 being “Highly Not Recommended”, 2, “Not Recommended”, 3, “Neutral”, 4, “Recommended” and 5, “Highly Recommended”. The ARS instantly processed and displayed results to interviewers, enabling immediate visualisation of scores and ranking of candidates. Time taken for interviewers to vote for each candidate was recorded as part of the ARS. No limit was imposed to the voting time taken by interviewers to decide on each candidate. The total time taken for voting each candidate was measured as the time from which ARS voting was activated for the candidate until all clicker responses were recorded. Interviewers were not allowed to abstain from voting. The 10 candidates with the best mean score were admitted into the SingHealth OBGYN Residency Program.

All interviewers were asked the following questions via email a week later:

- Did you like the anonymity of the response system?

- Did you like the graduated response allowed by the clickers instead of binary “Yes” or “No” responses?

- Did you like the instantaneous results generated by the clicker software?

Microsoft Excel 2010 was used to analyse differences in scores between successful and unsuccessful candidates using t-test. Data on time and questionnaire responses were analysed using descriptive statistics.

This study was undertaken as part of a larger study to understand residency selection interviews in OBGYN. SingHealth Centralised Institutional Review Board exempted this study from further review.

Figure 1: Example of voting display screen

III. RESULTS

Ten interviewers participated in the selection process of which a total of 15 clickers were utilised in accordance with the weightage described above.

The mean score for all 25 candidates was 3.51 (SD 0.86, 1.50-4.73), with a combined score of 87.75. The mean score for the 10 successful candidates was 4.28 (SD 0.27, range 3.86-4.73), compared to 2.99 (SD 0.71, 1.50–3.79) for the 15 unsuccessful candidates (p<0.001). The total time taken to vote for 25 candidates was 650 seconds with a mean of 26 seconds per candidate. All interviewers recorded decisions within two minutes.

All (100%) ten interviewers returned the questionnaire and answered “Yes” to the three questions, favouring the confidentiality, instantaneous results, and graduated response provided by ARS.

IV. DISCUSSION

Clickers are increasingly used in medical education and familiar to most college faculty members (Lewin, Vinson, Stetzer, & Smith, 2016). The ARS in this study is a shared system used by all SingHealth OBGYN faculty and residents. The familiarity of the interviewers with clickers made it a rapid and accurate way for administrators to process votes following the interview. The total time taken for all interviewers to record votes for all 25 candidates was under 11 minutes. Average cost for the recruitment of one postgraduate year 1 position was US$9899, of which 96% were attributed to efforts, and therefore time, contributed by the faculty, chief residents, and administrative staff (Brummond et al., 2013). Time-saving strategies are crucial to reduce the growing costs of resident recruitment. No studies were available studying time efficiency following implementation of clickers apart from one that reported no difference in time spent lecturing between clicker and non-clicker classes in the University-level science, technology, engineering and mathematics setting (Lewin et al., 2016). However, our use of clickers did not involve lecturing and further studies are needed to compare the time difference between traditional and clicker voting methods in resident selection.

The interviewers liked the anonymity provided by clickers. Anonymity to vulnerable groups through clickers has been echoed in non-medical literature (Keifer, Reyes, Liebman, & Juarez-Carrillo, 2014). In the selection interview context, it reduces uncontrolled swaying of votes by dominant individuals causing severe bias to voting results. We believe clickers allow investigators to control the influence of each assessor with the use of predetermined and prior agreed weighted votes through the allocation of greater numbers of clickers to residency leaders in recognition of their greater experience. In other situations, equal weightage for each voter may be more appropriate and can be manipulated to suit needs. Immediate tabulation of results further adds transparency. Should an inquiry arise about the resident selection process, data can easily be reviewed through the program administrator as to decisions recorded by each interviewer.

Interviewers liked the instant response provided by clickers. This mirrors feedback of other ARS users such as a graduate student population study which reported the primary benefit of clickers related to providing immediate feedback (Benson, Szucs, & Taylor, 2016).

This study has limitations. This single cohort study utilised a single ARS which may not reflect practices of other programs or ARS platforms. However, many currently available ARS platforms share common functions. We did not compare the time taken to record the decisions of interviewers during interview sessions which did not utilise clickers nor did we evaluate reasons for delay in decision-making time beyond the mean. Further study of these factors may identify individual and system-based problems such as interviewers’ variation in familiarity with clickers and coping with multiple keypads.

V. CONCLUSION

In summary, study of interviewer feedback suggests that clickers enhance residency selection by providing a rapid and anonymous method of collating interviewer decisions following a rigorous selection process. The introduction of clickers to the selection process was well-received by all interviewers in this study and highly recommended for similar use by other residencies.

This simple, novel extension to the use of clickers beyond the classroom illustrates how we can extend the use of facilities already available within our medical institutions to improve existing systems. Such attitudes need to continue to be encouraged within healthcare services.

Research is currently in progress to study the multi-station interview process and its correlation with success during residency.

Notes on Contributors

Dr. Jill Cheng Sim Lee is a senior resident in OBGYN at SingHealth and former Chief Resident for Education within her department. She has a Master of Science in Clinical Education and is involved in undergraduate medical education at Lee Kong Chian School of Medicine, Nanyang Technological University.

Dr. Muhammad Fairuz Abdul Rahman is a 4th year resident in OBGYN at SingHealth.

Dr. Weng Yan Ho is a senior resident in OBGYN at SingHealth and former Chief Resident for Administration within her department.

Mor Jack Ng is the Manager of the SingHealth-Duke-National University of Singapore (NUS) OBGYN Academic Clinical Program (ACP).

Prof. Kok Hian Tan is Senior Associate Dean of Academic Medicine at Duke-NUS, Group Director of Academic Medicine at SingHealth and Head of Perinatal Audit and Epidemiology at KK Women’s and Children’s Hospital (KKH). He is also editor-in-chief of the Singapore Journal of Obstetrics and Gynaecology.

A/Prof. Bernard Su Min Chern is Chairman of Division of OBGYN and Head and Senior Consultant of both the Department of OBGYN and Minimally Invasive Surgery Unit at KKH. He is also Chairman of the SingHealth-Duke-NUS OBGYN ACP and was formerly Program Director of the SingHealth OBGYN Residency Program.

Ethical Approval

This study was undertaken as part of a larger study to understand residency selection interviews in OBGYN. SingHealth Centralised Institutional Review Board approved this study with an exempt status.

Acknowledgements

The authors would like to acknowledge the support and cooperation provided by the faculty and staff at the SingHealth OBGYN Residency Program during the period of this study.

Funding

Funding for this study was borne internally by SingHealth OBGYN Residency Program. No external funding sources were required.

Declaration of Interest

All authors have no potential conflicts of interest.

References

Benson, J. D., Szucs, K. A., & Taylor, M. (2016). Student Response Systems and Learning: Perceptions of the Student. Occupational Therapy In Health Care, 30(4), 406–414. https://doi.org/10.1080/07380577.2016.1222644.

Brummond, A., Sefcik, S., Halvorsen, A. J., Chaudhry, S., Arora, V., Adams, M., … Reed, D. A. (2013). Resident Recruitment Costs: A National Survey of Internal Medicine Program Directors. The American Journal of Medicine, 126(7), 646–653. https://doi.org/10.1016/j.amjmed.2013.03.018.

Caldwell, J. E. (2007). Clickers in the large classroom: Current research and best-practice tips. CBE-Life Sciences Education, 6(1), 9–20.

Keifer, M. C., Reyes, I., Liebman, A. K., & Juarez-Carrillo, P. (2014). The use of audience response system technology with limited-english-proficiency, low-literacy, and vulnerable populations. Journal of Agromedicine, 19(1), 44–52.

Lewin, J. D., Vinson, E. L., Stetzer, M. R., & Smith, M. K. (2016). A Campus-Wide Investigation of Clicker Implementation: The Status of Peer Discussion in STEM Classes. Cell Biology Education, 15(1), ar6,1-ar6,12. https://doi.org/10.1187/cbe.15-10-0224.

*Dr Jill C. S. Lee

Email: jill.lee.c.s.@singhealth.com.sg

Division of Obstetrics and Gynaecology,

KK Women’s and Children’s Hospital,

100 Bukit Timah Road, Singapore 229899

Tel: +65 6225 5554

Announcements

- Best Reviewer Awards 2025

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2025.

Refer here for the list of recipients. - Most Accessed Article 2025

The Most Accessed Article of 2025 goes to Analyses of self-care agency and mindset: A pilot study on Malaysian undergraduate medical students.

Congratulations, Dr Reshma Mohamed Ansari and co-authors! - Best Article Award 2025

The Best Article Award of 2025 goes to From disparity to inclusivity: Narrative review of strategies in medical education to bridge gender inequality.

Congratulations, Dr Han Ting Jillian Yeo and co-authors! - Best Reviewer Awards 2024

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2024.

Refer here for the list of recipients. - Most Accessed Article 2024

The Most Accessed Article of 2024 goes to Persons with Disabilities (PWD) as patient educators: Effects on medical student attitudes.

Congratulations, Dr Vivien Lee and co-authors! - Best Article Award 2024

The Best Article Award of 2024 goes to Achieving Competency for Year 1 Doctors in Singapore: Comparing Night Float or Traditional Call.

Congratulations, Dr Tan Mae Yue and co-authors! - Best Reviewer Awards 2023

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2023.

Refer here for the list of recipients. - Most Accessed Article 2023

The Most Accessed Article of 2023 goes to Small, sustainable, steps to success as a scholar in Health Professions Education – Micro (macro and meta) matters.

Congratulations, A/Prof Goh Poh-Sun & Dr Elisabeth Schlegel! - Best Article Award 2023

The Best Article Award of 2023 goes to Increasing the value of Community-Based Education through Interprofessional Education.

Congratulations, Dr Tri Nur Kristina and co-authors! - Best Reviewer Awards 2022

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2022.

Refer here for the list of recipients. - Most Accessed Article 2022

The Most Accessed Article of 2022 goes to An urgent need to teach complexity science to health science students.

Congratulations, Dr Bhuvan KC and Dr Ravi Shankar. - Best Article Award 2022

The Best Article Award of 2022 goes to From clinician to educator: A scoping review of professional identity and the influence of impostor phenomenon.

Congratulations, Ms Freeman and co-authors.