Predictors of clinical reasoning in neurological localisation: A study in internal medicine residents

Submitted: 7 July 2019

Accepted: 30 January 2020

Published online: 1 September, TAPS 2020, 5(3), 54-61

https://doi.org/10.29060/TAPS.2020-5-3/OA2170

Kieng Wee Loh1, Jerome Ingmar Rotgans2, Kevin Tan3, Nigel Choon Kiat Tan3

1National Healthcare Group, Ministry of Health Holdings, Singapore; 2Office of Medical Education, Lee Kong Chian School of Medicine, Nanyang Technological University, Singapore; 3Office of Neurological Education, Department of Neurology, National Neuroscience Institute, Singapore

Abstract

Introduction: Clinical reasoning is the cognitive process of weighing clinical information together with past experience to evaluate diagnostic and management dilemmas. There is a paucity of literature regarding predictors of clinical reasoning at the postgraduate level. We performed a retrospective study on internal medicine residents to determine the sociodemographic and experiential correlates of clinical reasoning in neurological localisation, measured using validated tests.

Methods: We recruited 162 internal medicine residents undergoing a three-month attachment in neurology at the National Neuroscience Institute, Singapore, over a 2.5 year period. Clinical reasoning was assessed on the second month of their attachment via two validated tests of neurological localisation–Extended Matching Questions (EMQ) and Script Concordance Test (SCT). Data on gender, undergraduate medical education (local vs overseas graduates), graduate medical education, and amount of clinical experience were collected, and their association with EMQ and SCT scores evaluated via multivariate analysis.

Results: Multivariate analysis indicated that local graduates scored higher than overseas graduates in the SCT (adjusted R2 = 0.101, f2 = 0.112). Being a local graduate and having more local experience positively predicted EMQ scores (adjusted R2 = 0.049, f2 = 0.112).

Conclusion: Clinical reasoning in neurological localisation can be predicted via a two-factor model–undergraduate medical education and the amount of local experience. Context specificity likely underpins the process.

Keywords: Clinical Reasoning, Context Specificity, Extended Matching Questions, Neurological Localization, Script Concordance Test

Practice Highlights

- Clinical reasoning is a combination of two concurrent processes–pattern recognition in familiar circumstances (illness scripts); and deliberate analysis in unfamiliar scenarios (hypothetico-deductive approach).

- Validated tools exist to assess aspects of clinical reasoning–Script Concordance Tests (SCTs) for illness scripts; and Extended Matching Questions (EMQs) for hypothetico-deductive reasoning.

- Doctors who (a) were educated locally; and (b) worked locally for a longer period, tend to reason more similarly to local expert clinicians in the area of neurological localization.

- Development of clinical reasoning in neurology appears to be specific to a given clinical context

- To optimize the development of clinical reasoning in neurology, internal medicine residency programmes could consider maximizing trainees’ exposure to the local medical context before rotating them to a neurology posting.

I. INTRODUCTION

Clinical reasoning is the cognitive process of integrating and weighing clinical information together with past experiences to evaluate diagnostic and management dilemmas (Monteiro & Norman, 2013). Together with an appropriate knowledge base, this is central to clinical competence (Elstein, Shulman, & Sprafka, 1990; Groen & Patel, 1985), Clinical reasoning is especially important for the skill of neurological localisation (Gelb, Gunderson, Henry, Kirshner, & Jozefowicz, 2002; Nicholl & Appleton, 2015), which involves interpreting clinical signs and symptoms to identify the site of neuroanatomical abnormalities–a crucial first step in making a neurologic diagnosis. Accurate clinical reasoning is an important core competency (Connor, Durning & Rencic, 2019), and is essential in minimising diagnostic errors (Durning, Trowbridge, & Schuwirth, 2019).

The ‘dual process’ paradigm of clinical reasoning proposes that a combination of rapid intuition and deliberate analysis is employed in clinical decision making (Elstein, 2009; Eva, 2005; Monteiro & Norman, 2013). In familiar circumstances, relevant clinical information is compared with past experiences to arrive at a diagnosis (Elstein, 2009), akin to pattern recognition. This content-specific knowledge is organised into mental networks (‘illness scripts’) for easy retrieval (Boushehri, Arabshahi, & Monajemi, 2015; Norman, Young, & Brooks, 2007). In unfamiliar situations, however, a ‘hypothetico-deductive’ approach is utilised instead, where hypotheses are formulated through conscious deliberations and later tested (Boushehri et al., 2015; Elstein, 2009; Monteiro & Norman, 2013). These reasoning processes work in parallel, but experts are more adept at switching between both approaches whilst maintaining a higher performance level in each (Boushehri et al., 2015; Eva, 2005; Monteiro & Norman, 2013).

Several studies have examined predictors of academic performance in medical undergraduates (Ferguson, James, & Madeley, 2002; Hamdy et al., 2006; Kanna, Gu, Akhuetie, & Dimitrov, 2009). Previous studies have examined sociodemographic characteristics and educational background as potential predictors, as these have practical relevance in reviewing admission criteria and teaching methods for undergraduate programmes. Female gender (Adams et al., 2008; Ferguson et al., 2002; Guerrasio, Garrity, & Aagaard, 2014; Stegers-Jager, Themmen, Cohen-Schotanus, & Steyerberg, 2015; Woolf, Haq, McManus, Higham, & Dacre, 2008), ethnic majority status (Stegers-Jager et al., 2015; Vaughan, Sanders, Crossley, O’Neill, & Wass, 2015; Woolf, Cave, Greenhalgh, & Dacre, 2008; Woolf & Haq et al., 2008; Woolf, Potts, & McManus, 2011) and older age (Kusurkar, Kruitwagen, Ten Cate, & Croiset, 2010) were found to be significant predictors; educational background (Kusurkar et al., 2010) and past academic performance (Ferguson et al., 2002; Hamdy et al., 2006; Kanna et al., 2009; Stegers-Jager et al., 2015; Woloschuk, McLaughlin, & Wright, 2010) also showed positive associations. However, academic performance does not solely reflect reasoning skill, especially in postgraduates (Woloschuk et al., 2010; Woloschuk, McLaughlin, & Wright, 2013).

The ‘dual process’ theory also identifies clinical experience as important for clinical reasoning, especially in the formation of illness scripts (Elstein, 2009; Eva, 2005; Monteiro & Norman, 2013). Yet this is seldom explored, with few studies on postgraduates, a group where clinical experience might be more relevant.

The current Singapore postgraduate training system is based on the United States’ residency system (Huggan et al., 2012). Medical graduates, whether local or overseas-trained, must first register with the Singapore Medical Council (SMC) to start practising medicine locally. They can then apply for graduate medical education programmes (‘residency’) in various sponsoring institutions to train in a speciality; residency entry can occur immediately after or several years after graduation.

In Singapore, internal medicine residents rotate between subspecialty departments (such as cardiology or neurology) in no fixed order, hence two residents rotated to neurology may differ in the amount of working experience as a resident, as a clinician practising locally, and as a doctor in general. Moreover, experiences may differ between the various sponsoring institutions, and also between disparate undergraduate medical programmes. These differences might influence clinical reasoning.

Additionally, most Singaporean male graduates defer their medical careers to complete a two-year stint with the Singapore Armed Forces (SAF), as part of their compulsory National Service. Within the SAF, medicine is rarely practised in conventional clinical settings, and the quality of clinical experience may be affected. Clinical experience may hence differ between genders.

Several instruments have been designed to assess clinical reasoning (Amini et al., 2011; Boushehri et al., 2015), but these were infrequently used in studies (Groves, O’rourke, & Alexander, 2003; Postma & White, 2015). Some studies utilised unvalidated questionnaires (Groves et al., 2003); others did not specifically assess clinical reasoning (Postma & White, 2015). Moreover, the focus of each instrument varies–Extended Matching Questions (EMQ; Beullens, Struyf, & Van Damme, 2005) on ‘hypothetico-deductive’ reasoning; Script Concordance Test (SCT; Lubarsky, Charlin, Cook, Chalk, & van der Vleuten, 2011) on illness scripts (Amini et al., 2011; Boushehri et al., 2015). As both approaches are complementary, it may thus be prudent to employ multiple instruments to better evaluate clinical reasoning as an outcome measure, especially for the important skill of neurological localisation (Gelb et al., 2002; Nicholl & Appleton, 2015).

Given the gaps in the extant literature, we thus aimed to determine predictors of postgraduate performance in clinical reasoning tests, within the context of neurological localisation.

II. METHODS

A. Subjects

Subjects comprised 162 internal medicine residents from two sponsoring institutions (National Healthcare Group and Singapore Health Services). Each resident completed a three-month neurology rotation at the National Neuroscience Institute (NNI), Singapore, from January 2014 to June 2016. Waiver of further ethical deliberation was granted by the SingHealth Centralised Institutional Review Board (CIRB) for this education program improvement project; subjects were anonymised and implied informed consent was obtained from all participants. We excluded 17 subjects who failed to complete the required assessments, leaving 145 (90%) subjects for eventual analysis.

B. Predictor Variables

We investigated three sociodemographic characteristics–gender, undergraduate medical education (UME) and graduate medical education (GME). UME denotes the location of undergraduate training institution, and was classified into local (Singapore) and overseas. GME refers to the residency programmes of the two sponsoring institutions, anonymised as ‘A’ and ‘B’.

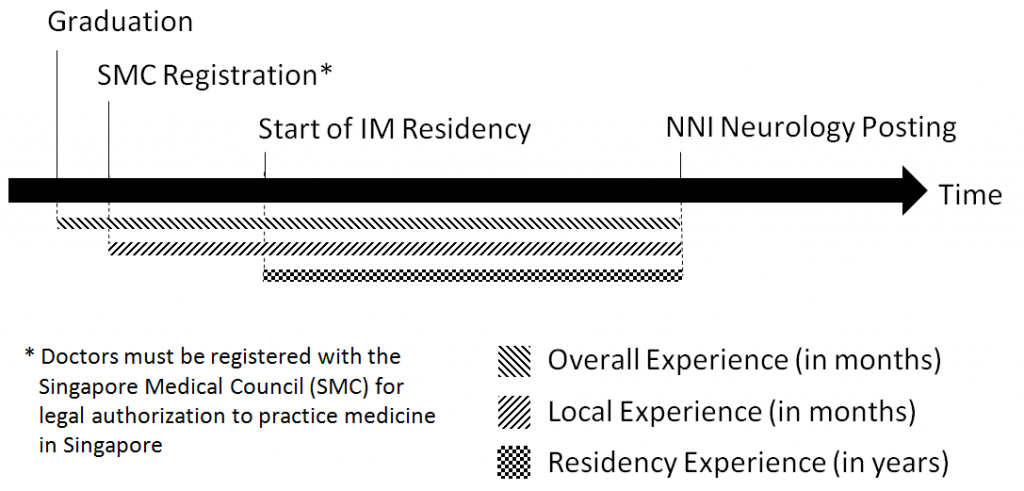

Clinical experience was judged by three metrics–overall experience (OE), local experience (LE) and residency experience (RE; Figure 1). OE and LE were calculated as the number of months from graduation and SMC registration respectively, to the month of test attempt. RE, defined as the residency training year, was categorised as ‘Year 1’, ‘Year 2’ and ‘Year 3’.

Figure 1. Measures of clinical experience

We obtained data on gender, GME and RE from our institution records; UME and month of SMC registration were obtained from the SMC Registry of Doctors. Graduation month was derived from our institution records for local graduates and estimated for overseas graduates from the dates of their alma mater’s most recent graduation ceremony, available online.

C. Outcome Measures

We used two validated methods of assessment, Script Concordance Test (SCT; Lubarsky et al., 2011) and Extended Matching Questions (EMQ; Beullens et al., 2005), to evaluate clinical reasoning in neurological localisation. We specifically selected the SCT and EMQ tests that had previously demonstrated construct validity and reliability in our Singapore context (Tan, Tan, Kandiah, Samarasekera, & Ponnamperuma, 2014; Tan et al., 2017).

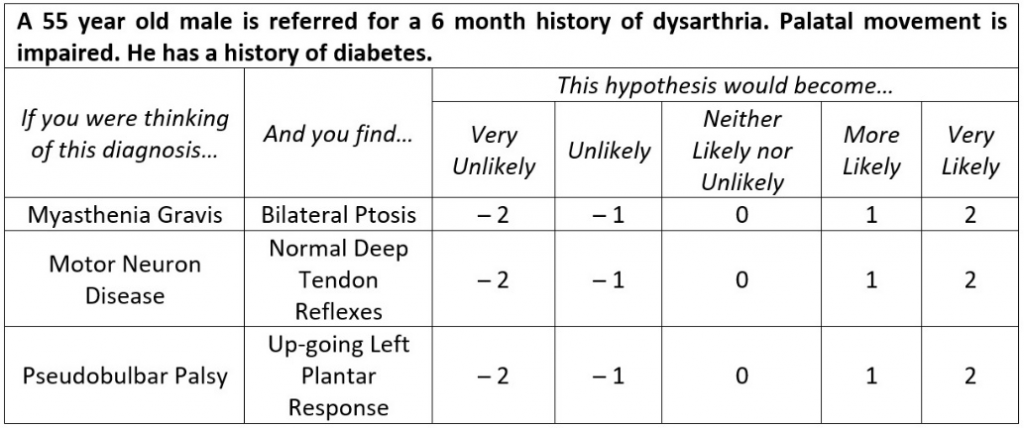

An SCT contains case scenarios with 3-5 part questions (Fournier, Demeester, & Charlin, 2008; Figure 2)–a relevant diagnostic or management option; a new clinical finding; and a five-point Likert Scale indicating the new finding’s effect on the initial option. A scoring key is derived from scores by an expert panel; subsequent test-takers are then scored for degree of concordance to the experts (Fournier et al., 2008; Wan, 2015).

Our locally-validated SCT (Tan et al., 2014) contained 14 scenarios, each with 3–5 question items, totalling 53 items; reliability and generalisability were acceptable (Cronbach α 0.75, G-coefficient 0.74). Questions and scoring keys were derived from local experts. We analysed only the neurological localisation component (7 scenarios).

Figure 2. Script Concordance Test (SCT)–Sample questions (Tan et al., 2017)

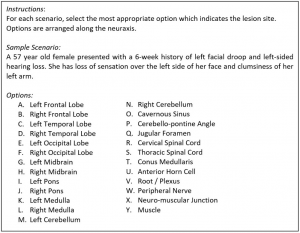

EMQs are multiple-choice questions consisting of case scenarios, each with a single answer drawn from a shared list of at least 7 options (Case & Swanson, 1993; Fenderson, Damjanov, Robeson, Veloski, & Rubin, 1997). Our locally-validated EMQ (Tan et al., 2017) contained 45 scenarios with a shared answer list of 25 options (Figure 3); reliability and generalisability were excellent (Cronbach α 0.85, G-coefficient 0.85).

Figure 3. Extended Matching Questions (EMQ)–Sample questions (Tan et al., 2014)

Subjects completed both timed closed-book tests via an online portal during the second month of their three-month neurology rotation, done as a formative assessment. Scores were expressed in percentages. Subjects had no prior exposure to the SCT or EMQ during their neurology rotation or as practising doctors, and were introduced to the test format on the day of assessment. One worked example of the SCT and EMQ was provided to the subjects before the test.

D. Statistical Analysis

Descriptive statistics were calculated to test assumptions of normality before proceeding with multivariate analysis. We used SPSS Statistics version 20, and considered p-values <0.05 as statistically significant; all tests were two-tailed.

Multivariate stepwise linear regression models were used to assess the relationship between predictor variables (gender, UME, GME, OE, LE and RE) and outcome measures (SCT and EMQ scores). Tolerance values were computed to assess multicollinearity, with values below 0.60 considered problematic (Chan, 2004). Overall model performance was assessed using Nagelkerke’s R2, and effect sizes measured with Cohen’s f2. Effect sizes of 0.02, 0.15 and 0.35 were considered low, medium and large respectively (Cohen, 1988).

III. RESULTS

The majority of the 145 subjects were female, local graduates and belonged to residency ‘B’ (Table 1).Mean and standard deviation of SCT and EMQ scores were 68.03 ± 8.24% and 81.84 ± 12.17% respectively. Population statistics did not reveal a need for non-parametric tests.

|

|

|

n |

% |

|

Sociodemographic Characteristics |

|||

|

Gender |

Male |

61 |

42.07 |

|

Female |

84 |

57.93 |

|

|

Undergraduate Medical Education (UME) |

Local |

87 |

60.00 |

|

Overseas |

58 |

40.00 |

|

|

Graduate Medical Education (GME) |

Residency ‘A’ |

46 |

31.72 |

|

Residency ‘B’ |

99 |

68.28 |

|

|

Clinical Experience (months)* |

|||

|

Overall Experience (OE) |

38.34 ± 21.32 |

||

|

Local Experience (LE) |

33.43 ± 16.50 |

||

|

Residency Experience (RE) |

Year 1 |

55 |

37.93 |

|

Year 2 |

55 |

37.93 |

|

|

Year 3 |

35 |

24.14 |

|

|

Test Scores (%)* |

|||

|

Script Concordance Test (SCT) |

68.03 ± 8.24 |

||

|

Extended Matching Question (EMQ) |

81.84 ± 12.17 |

||

|

* Values expressed in Mean ± Standard Deviation |

|||

Table 1. Characteristics of subject population (n = 145)

Since both EMQ and SCT assess clinical reasoning, albeit different aspects, their inclusion in Multivariate analysis (Table 2, Model A) were potentially contentious. Additional models excluding these were therefore created (Model B).

|

Model |

Outcome |

Co-Variable |

B |

95% CI‡ |

SE |

Sig. |

Adjusted R2 |

f2 |

|

1A |

SCT Score |

UME* |

4.5 |

2.0 – 7.0 |

1.3 |

0.001 |

0.204 |

0.256 |

|

EMQ Score |

0.2 |

0.1 – 0.3 |

0.1 |

< 0.001 |

||||

|

1B |

SCT Score |

UME |

5.5 |

2.9 – 8.1 |

1.3 |

< 0.001 |

0.101 |

0.112 |

|

2A |

EMQ Score |

LE† |

0.1 |

0.0 – 0.2 |

0.1 |

0.025 |

0.164 |

0.196 |

|

SCT Score |

0.5 |

0.3 – 0.8 |

0.1 |

< 0.001 |

||||

|

2B |

EMQ Score |

UME |

4.7 |

0.7 – 8.7 |

2.0 |

0.021 |

0.049 |

0.052 |

|

LE |

0.1 |

0.0 – 0.2 |

0.1 |

0.041 |

||||

|

* Undergraduate Medical Education, Overseas (reference) vs Local (comparator) † Local Experience ‡ All Tolerance values >0.89 |

||||||||

Table 2. Multivariate correlation of outcome measures with predictor variables

Local graduates and better EMQ performers tended to have higher SCT scores (adjusted R2 = 0.204, f2 = 0.256; Model 1A). Residents with more local experience and higher SCT scores also had higher EMQ scores (adjusted R2 = 0.164, f2 = 0.196; Model 2A).

In the additional models, UME remained as the sole association for SCT scores (adjusted R2 = 0.101, f2 = 0.112; Model 1B). However, UME became significant for EMQ scores (adjusted R2 = 0.049, f2 = 0.052), with local graduates scoring higher (Model 2B).

IV. DISCUSSION

As there is a paucity of literature about postgraduate performance in clinical reasoning, this study provides a unique opportunity to evaluate its predictors, especially clinical experience. We used validated instruments to measure clinical reasoning skills in neurological localisation, and elucidated multivariate associations between clinical reasoning, clinical experience, and sociodemographic characteristics of our subjects.

Our results suggest that local graduates tend to score better in both clinical reasoning tests. Consistent with the existing literature (Postma & White, 2015), this indicates that educational background plays an important role in the development of clinical reasoning skills. Since SCT performance reflects the degree of concordance with verdicts made by local experts (Tan et al., 2014; Tan et al., 2017), this suggests that being educated locally may promote a similar outcome of reasoning. It is also possible that local undergraduate programmes provide better training in neurological localisation, as local graduates performed better in the EMQ, an instrument where scoring is independent of local experts’ views.

Interestingly, we found no significant associations between clinical experience and SCT performance. The accumulation of context-specific experiential knowledge is crucial for developing effective illness scripts (Elstein, 2009), hence SCT scores were expected to rise with increasing clinical experience (Boushehri et al., 2015; Kazour, Richa, Zoghbi, El-Hage, & Haddad, 2017; Lubarsky, Chalk, Kazitani, Gagnon, & Charlin, 2009; Norman et al., 2007). However, heuristics also play an important role (Boushehri et al., 2015; Elstein, 2009; Norman et al., 2007), suggesting that efficiency of knowledge organisation may be independent of clinical exposure. Alternatively, the study period may be insufficient for subjects to fully develop their illness scripts.

In contrast, more experienced doctors performed better at the EMQ, validating the premise that expertise is at least partially linked to experience and acquiring a strong knowledge base (Elstein et al., 1990; Monteiro & Norman, 2013; Neufeld, Norman, Feightner, & Barrows, 1981). Interestingly, only local experience was a significant predictor, but not overall experience. This suggests that overseas experience may not significantly improve clinical reasoning skills in neurological localisation and that the acquisition of such skills is a context-specific process (Durning, Artino, Pangaro, van der Vleuten, & Schuwirth, 2011; Durning et al., 2012; McBee et al., 2015).

Our findings have two potential implications for graduate medical education in Singapore. Firstly, the design of internal medicine residency programmes. To optimise the development of clinical reasoning in neurology, programmes could maximise local experience by assigning residents with less local experience to neurology only in the final year of the three-year residency. However, as local experience may also influence clinical reasoning in other subspecialties, further research is necessary to ascertain the optimal posting configuration that maximises clinical reasoning development across all disciplines.

Secondly, context appears to influence clinical reasoning in neurological localisation. Our results suggest that training location plays a role at both undergraduate and postgraduate levels. This might be due to context specificity, which attributes performance variations to situational factors (Durning et al., 2011; Durning et al., 2012; McBee et al., 2015). Since local exposure appears to be beneficial, it implies that overseas graduates and clinicians may require more time to acclimatise or familiarise themselves with the Singapore clinical context.

Our study has several strengths. We used validated, reliable tests to specifically assess clinical reasoning skills for neurological localisation. Homogeneity of the subject cohort, along with the consistent time-frame of test attempts, also allowed us to minimise confounders such as intrinsic motivation (Ferguson et al., 2002; Kusurkar et al., 2010; Vaughan et al., 2015), instructional design (Postma & White, 2015), and duration of neurology exposure.

There were also limitations. This is a single-centre, single subspecialty study with moderate sample size, and our results may not be applicable to other aspects of neurology besides neurological localisation. Further studies are needed to validate whether the results are generalisable beyond the neurology SCT and EMQ, and to other postgraduate populations. The study design was also limited due to the nature of secondary data analysis, thus information on other potentially important variables such as ethnicity (Adams et al., 2008; Guerrasio et al., 2014; Stegers-Jager et al., 2015; Woolf & Cave et al., 2008; Woolf & Haq et al., 2008), age (Woolf et al., 2011) and previous academic performance (Ferguson et al., 2002; Hamdy et al., 2006; Kanna et al., 2009; Stegers-Jager et al., 2015; Woloschuk et al., 2010) could not be fully obtained for evaluation. There is thus a possibility that other confounding variables may influence the findings in this study. Test questions may also intrinsically favour local graduates as they were formulated by local experts, but this is less likely as globally relevant clinical scenarios were tested.

V. CONCLUSION

In conclusion, our study suggests that local clinical experience and site of undergraduate education predict postgraduate clinical reasoning skill in validated tests of neurological localization. We believe context specificity likely underpins a significant part of clinical reasoning. Our findings have practical implications on residency programme design and highlight the need to provide overseas graduates and clinicians time to adapt to the local clinical context.

Notes on Contributors

Dr Loh Kieng Wee is a Medical Officer under the Ministry of Health Holdings, Singapore.

Jerome Ingmar Rotgans is an Assistant Professor at the Lee Kong Chian School of Medicine, Nanyang Technological University, Singapore.

Dr Kevin Tan is Education Director, Vice-Chair (Education) and a Senior Consultant at the Department of Neurology, National Neuroscience Institute, Singapore.

Dr Nigel Choon Kiat Tan is Deputy Group Director Education (Undergraduate), Singapore Health Services, and a Senior Consultant at the Department of Neurology, National Neuroscience Institute, Singapore.

Ethical Approval

Ethical exemption has been granted from the SingHealth Centralised Institutional Review Board A, CIRB Ref: 2020/2228.

Acknowledgements

The authors would like to acknowledge the technical assistance & support provided by the Office of Neurological Education, National Neuroscience Institute, Singapore.

Funding

The research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Declaration of Interest

Authors have no conflict of interest, including financial, institutional and other relationships that might lead to bias.

References

Adams, A., Buckingham, C. D., Lindenmeyer, A., McKinlay, J. B., Link, C., Marceau, L., & Arber, S. (2008). The influence of patient and doctor gender on diagnosing coronary heart disease. Sociology of Health and Illness, 30(1), 1–18.

Amini, M., Moghadami, M., Kojuri, J., Abbasi, H., Abadi, A. A., Molaee, N., & Charlin, B. (2011). An innovative method to assess clinical reasoning skills: Clinical reasoning tests in the second national medical science Olympiad in Iran. BMC Research Notes, 4(1), 418.

Beullens, J., Struyf, E., & Van Damme, B. (2005). Do extended matching multiple-choice questions measure clinical reasoning? Medical Education, 39(4), 410–417.

Boushehri, E., Arabshahi, K. S., & Monajemi, A. (2015). Clinical reasoning assessment through medical expertise theories: Past, present and future directions. Medical Journal of The Islamic Republic of Iran, 29(1), 222.

Case, S. M., & Swanson, D. B. (1993). Extended‐matching items: A practical alternative to free‐response questions. Teaching and Learning in Medicine, 5(2), 107–115.

Chan, Y. H. (2004). Biostatistics 201: Linear regression analysis. Singapore Medical Journal, 45(2), 55–61.

Cohen, J. (1988). Statistical Power Analysis for the Behavioural Sciences. Hillsdale, NJ: Lawrence Earlbaum Associates.

Connor, D. M., Durning, S. J., & Rencic, J. J. (2019). Clinical reasoning as a core competency. Academic Medicine. http://dx.doi.org/10.1097/ACM.0000000000003027

Durning, S., Artino, A. R., Pangaro, L., van der Vleuten, C. P., & Schuwirth, L. (2011). Context and clinical reasoning: Understanding the perspective of the expert’s voice. Medical Education, 45(9), 927–938.

Durning, S. J., Artino, A. R., Boulet, J. R., Dorrance, K., van der Vleuten, C., & Schuwirth, L. (2012). The impact of selected contextual factors on experts’ clinical reasoning performance (Does context impact clinical reasoning performance in experts?). Advances in Health Sciences Education, 17(1), 65–79.

Durning, S. J., Trowbridge, R. L., & Schuwirth, L. (2019). Clinical reasoning and diagnostic error: A call to merge two worlds to improve patient care. Academic Medicine. http://dx.doi.org/10.1097/ACM.0000000000003041

Elstein, A. S. (2009). Thinking about diagnostic thinking: A 30-year perspective. Advances in Health Science Education, 14(sup1), 7–18.

Elstein, A., Shulman, L., & Sprafka, S. (1990). Medical problem solving: A ten-year retrospective. Evaluation and the Health Professions, 13, 5–36.

Eva, K. W. (2005). What every teacher needs to know about clinical reasoning. Medical Education, 39(1), 98–106.

Fenderson, B. A., Damjanov, I., Robeson, M. R., Veloski, J. J., & Rubin, E. (1997). The virtues of extended matching and uncued tests as alternatives to multiple choice questions. Human Pathology, 28(5), 526–532.

Ferguson, E., James, D., & Madeley, L. (2002). Factors associated with success in medical school: Systematic review of the literature. British Medical Journal, 324(7343), 952–957.

Fournier, J. P., Demeester, A., & Charlin, B. (2008). Script concordance tests: Guidelines for construction. BMC Medical Informatics and Decision Making, 8, 18.

Gelb, D. J., Gunderson, C. H., Henry, K. A., Kirshner, H. S., & Jozefowicz, R. F. (2002). The neurology clerkship core curriculum. Neurology, 58(6), 849–852.

Groen, G. J., & Patel, V. L. (1985). Medical problem solving: some questionable assumptions. Medical Education, 19(2), 95–100.

Groves, M., O’rourke, P., & Alexander, H. (2003). The association between student characteristics and the development of clinical reasoning in a graduate-entry, PBL medical programme. Medical Teacher, 25(6), 626–631.

Guerrasio, J., Garrity, M. J., & Aagaard, E. M. (2014). Learner deficits and academic outcomes of medical students, residents, fellows, and attending physicians referred to a remediation program, 2006-2012. Academic Medicine, 89(2), 352–358.

Hamdy, H., Prasad, K., Anderson, M. B., Scherpbier, A., Williams, R., Zwierstra, R., & Cuddihy, H. (2006). BEME systematic review: Predictive values of measurements obtained in medical schools and future performance in medical practice. Medical Teacher. 28(2), 103–116.

Huggan, P. J., Samarasekara, D. D., Archuleta, S., Khoo, S. M., Sim, J. H., Sin, C. S., & Ooi, S. B. (2012). The successful, rapid transition to a new model of graduate medical education in Singapore. Academic Medicine, 87(9), 1268–1273.

Kanna, B., Gu, Y., Akhuetie, J., & Dimitrov, V. (2009). Predicting performance using background characteristics of international medical graduates in an inner-city university-affiliated Internal Medicine residency training program. BMC Medical Education, 9, 42.

Kazour, F., Richa, S., Zoghbi, M., El-Hage, W., & Haddad, F. G. (2017). Using the script concordance test to evaluate clinical reasoning skills in psychiatry. Academic Psychiatry, 41(1), 86-90.

Kusurkar, R., Kruitwagen, C., Ten Cate, O., & Croiset, G. (2010). Effects of age, gender and educational background on strength of motivation for medical school. Advances in Health Science Education, 15(3), 303–313.

Lubarsky, S., Chalk, C., Kazitani, D., Gagnon, R., & Charlin, B. (2009). The script concordance test: A new tool assessing clinical judgement in neurology. Canadian Journal of Neurological Sciences, 36, 326-331.

Lubarsky, S., Charlin, B., Cook, D. A., Chalk, C., & van der Vleuten, C. P. M. (2011). Script concordance testing: A review of published validity evidence. Medical Education, 45(4), 329–338.

McBee, E., Ratcliffe, T., Picho, K., Artino, A. R., Schuwirth, L., Kelly, W., … Durning, S. J. (2015). Consequences of contextual factors on clinical reasoning in resident physicians. Advances in Health Sciences Education, 20(5), 1225–1236.

Monteiro, S. M., & Norman, G. (2013), Diagnostic reasoning: Where we’ve been, where we’re going. Teaching and Learning in Medicine, 25(sup1), S26–S32.

Neufeld, V. R., Norman, G. R., Feightner, J. W., & Barrows, H. S. (1981). Clinical problem-solving by medical students: A cross-sectional and longitudinal analysis. Medical Education, 15, 315–322.

Nicholl, D. J., & Appleton, J. P. (2015). Clinical neurology: Why this still matters in the 21st century. Journal of Neurology, Neurosurgery and Psychiatry, 86(2), 229–233.

Norman, G., Young, M., & Brooks, L. (2007). Non-analytical models of clinical reasoning: The role of experience. Medical Education, 41(12), 1140–1145.

Postma, T. C., & White, J. G. (2015). Socio-demographic and academic correlates of clinical reasoning in a dental school in South Africa. European Journal of Dental Education, 10, 1–8.

Stegers-Jager, K. M., Themmen, A. P. N., Cohen-Schotanus, J., & Steyerberg, E. W. (2015). Predicting performance: Relative importance of students’ background and past performance. Medical Education, 49(9), 933–945.

Tan, K., Chin, H. X., Yau, C. W. L., Lim, E. C. H., Samarasekera, D., Ponnamperuma, G., & Tan, N. C. K. (2017). Evaluating a bedside tool for neuroanatomical localization with extended-matching questions. Anatomical Sciences Education, 11(3), 262-269.

Tan, K., Tan, N. C. K., Kandiah, N., Samarasekera, D., & Ponnamperuma, G. (2014). Validating a script concordance test for assessing neurological localization and emergencies. European Journal of Neurology, 21(11), 1419–1422.

Vaughan, S., Sanders, T., Crossley, N., O’Neill, P., & Wass, V. (2015). Bridging the gap: The roles of social capital and ethnicity in medical student achievement. Medical Education, 49(1), 114–123.

Wan, S. H. (2015). Using the script concordance test to assess clinical reasoning skills in undergraduate and postgraduate medicine. Hong Kong Medical Journal, 21(5), 455–461.

Woloschuk, W., McLaughlin, K., & Wright, B. (2010). Is undergraduate performance predictive of postgraduate performance? Teaching and Learning in Medicine, 22(3), 202–204.

Woloschuk, W., McLaughlin, K., & Wright, B. (2013). Predicting performance on the Medical Council of Canada Qualifying Exam Part II. Teaching and Learning in Medicine, 25(3), 237–241.

Woolf, K., Cave, J., Greenhalgh, T., & Dacre, J. (2008). Ethnic stereotypes and the underachievement of UK medical students from ethnic minorities: Qualitative study. British Medical Journal [Internet], 377, a1220. https://doi.org/10.1136/bmj.a1220

Woolf, K., Haq, I., McManus, I. C., Higham, J., & Dacre, J. (2008). Exploring the underperformance of male and minority ethnic medical students in first year clinical examinations. Advances in Health Science Education, 13(5), 607–616.

Woolf, K., Potts, H. W. W., & McManus, I. C. (2011). Ethnicity and academic performance in UK trained doctors and medical students: Systematic review and meta-analysis. British Medical Journal [Internet], 342, d901. https://doi.org/10.1136/bmj.d901

*Nigel Choon Kiat Tan

Office of Neurological Education,

Department of Neurology,

National Neuroscience Institute

11 Jalan Tan Tock Seng,

Singapore 308433

Email: nigel.tan@alumni.nus.edu.sg

Announcements

- Best Reviewer Awards 2025

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2025.

Refer here for the list of recipients. - Most Accessed Article 2025

The Most Accessed Article of 2025 goes to Analyses of self-care agency and mindset: A pilot study on Malaysian undergraduate medical students.

Congratulations, Dr Reshma Mohamed Ansari and co-authors! - Best Article Award 2025

The Best Article Award of 2025 goes to From disparity to inclusivity: Narrative review of strategies in medical education to bridge gender inequality.

Congratulations, Dr Han Ting Jillian Yeo and co-authors! - Best Reviewer Awards 2024

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2024.

Refer here for the list of recipients. - Most Accessed Article 2024

The Most Accessed Article of 2024 goes to Persons with Disabilities (PWD) as patient educators: Effects on medical student attitudes.

Congratulations, Dr Vivien Lee and co-authors! - Best Article Award 2024

The Best Article Award of 2024 goes to Achieving Competency for Year 1 Doctors in Singapore: Comparing Night Float or Traditional Call.

Congratulations, Dr Tan Mae Yue and co-authors! - Best Reviewer Awards 2023

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2023.

Refer here for the list of recipients. - Most Accessed Article 2023

The Most Accessed Article of 2023 goes to Small, sustainable, steps to success as a scholar in Health Professions Education – Micro (macro and meta) matters.

Congratulations, A/Prof Goh Poh-Sun & Dr Elisabeth Schlegel! - Best Article Award 2023

The Best Article Award of 2023 goes to Increasing the value of Community-Based Education through Interprofessional Education.

Congratulations, Dr Tri Nur Kristina and co-authors! - Best Reviewer Awards 2022

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2022.

Refer here for the list of recipients. - Most Accessed Article 2022

The Most Accessed Article of 2022 goes to An urgent need to teach complexity science to health science students.

Congratulations, Dr Bhuvan KC and Dr Ravi Shankar. - Best Article Award 2022

The Best Article Award of 2022 goes to From clinician to educator: A scoping review of professional identity and the influence of impostor phenomenon.

Congratulations, Ms Freeman and co-authors.